Welcome

1. Introduction

1.1. Introduction

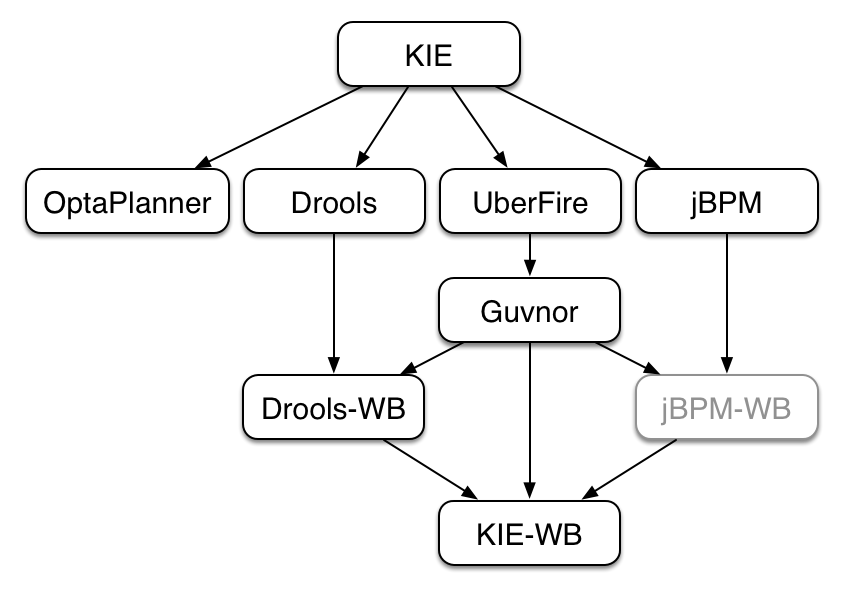

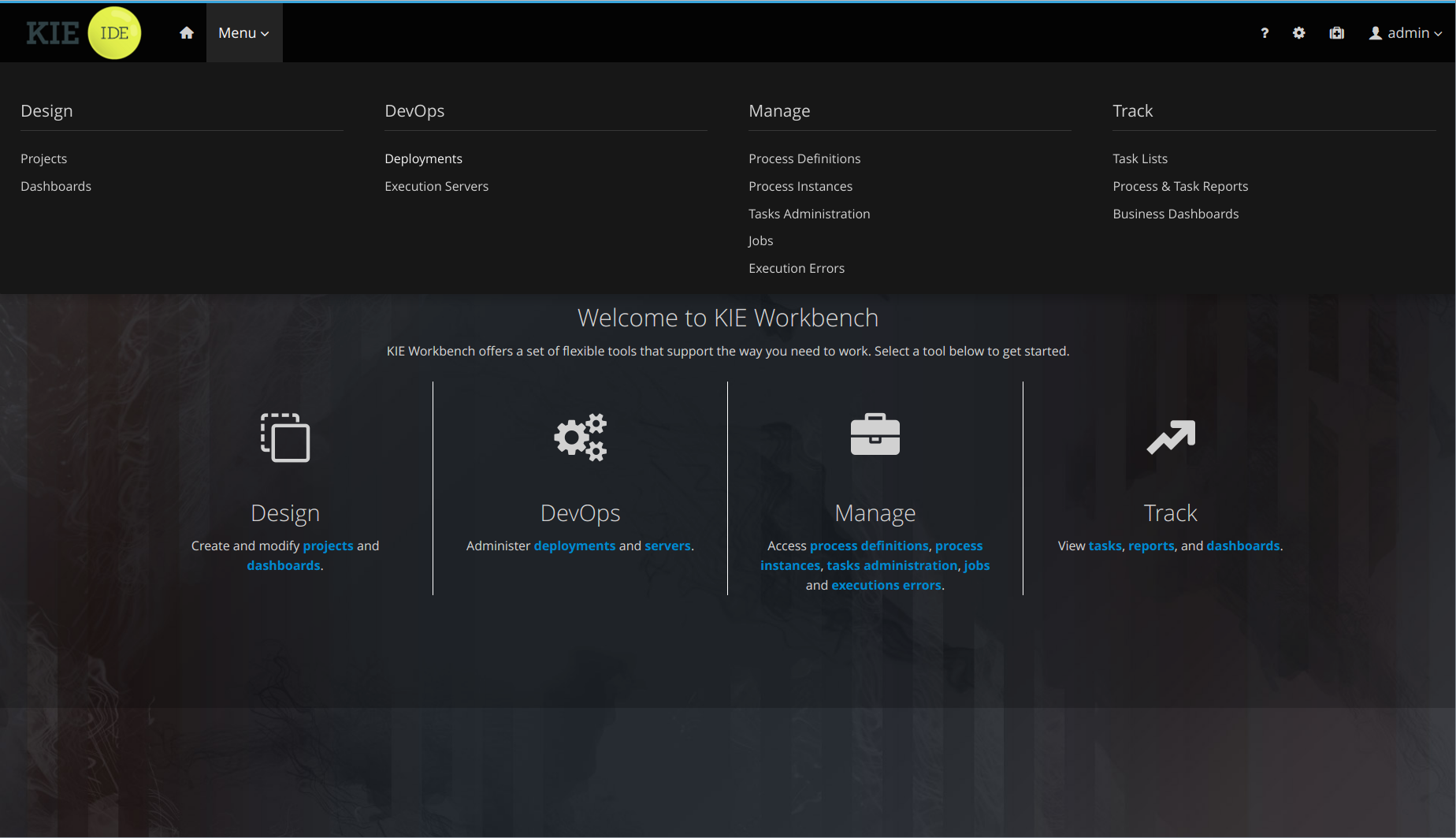

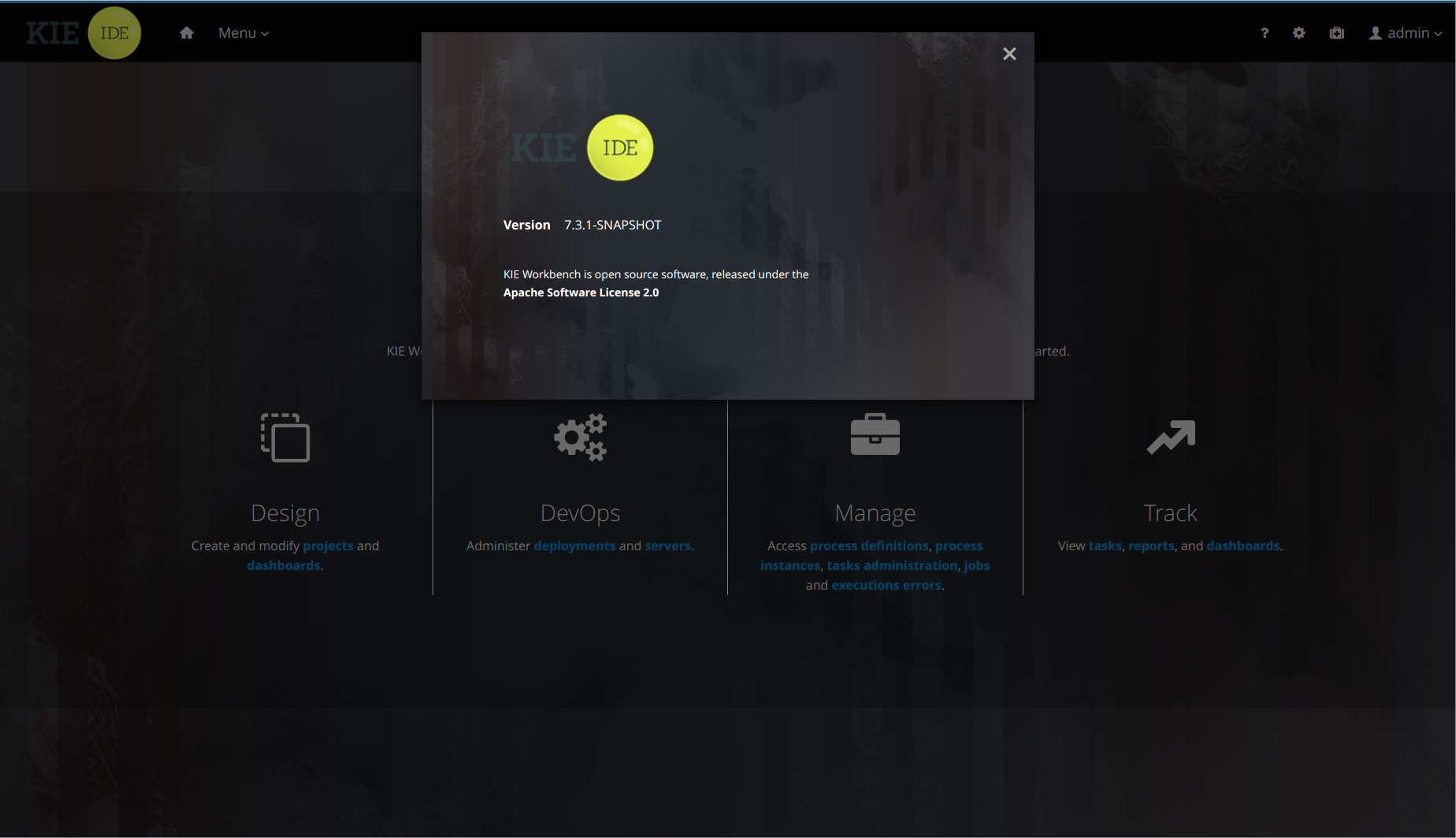

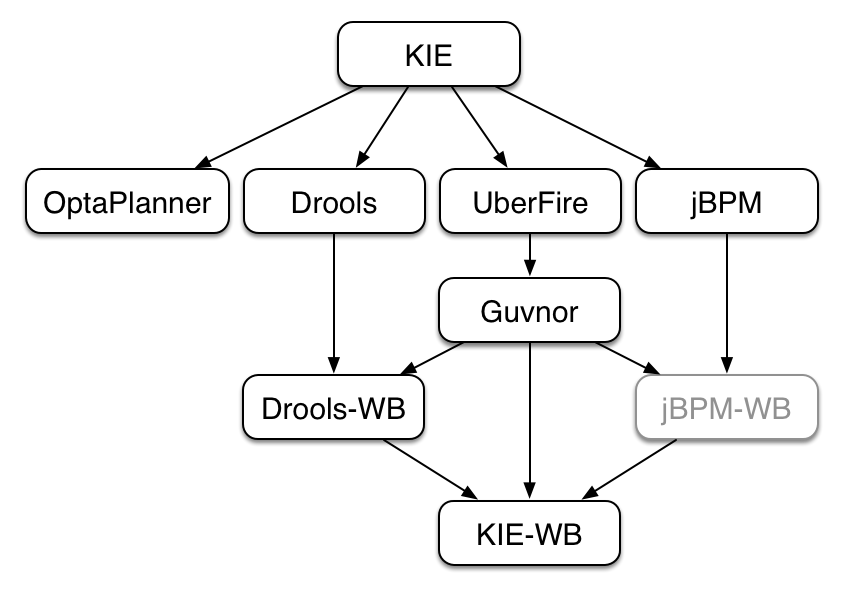

KIE (Knowledge Is Everything) is an umbrella project introduced to bring our related technologies together under one roof. It also acts as the core shared between our projects.

KIE contains the following different but related projects offering a complete portfolio of solutions for business automation and management:

-

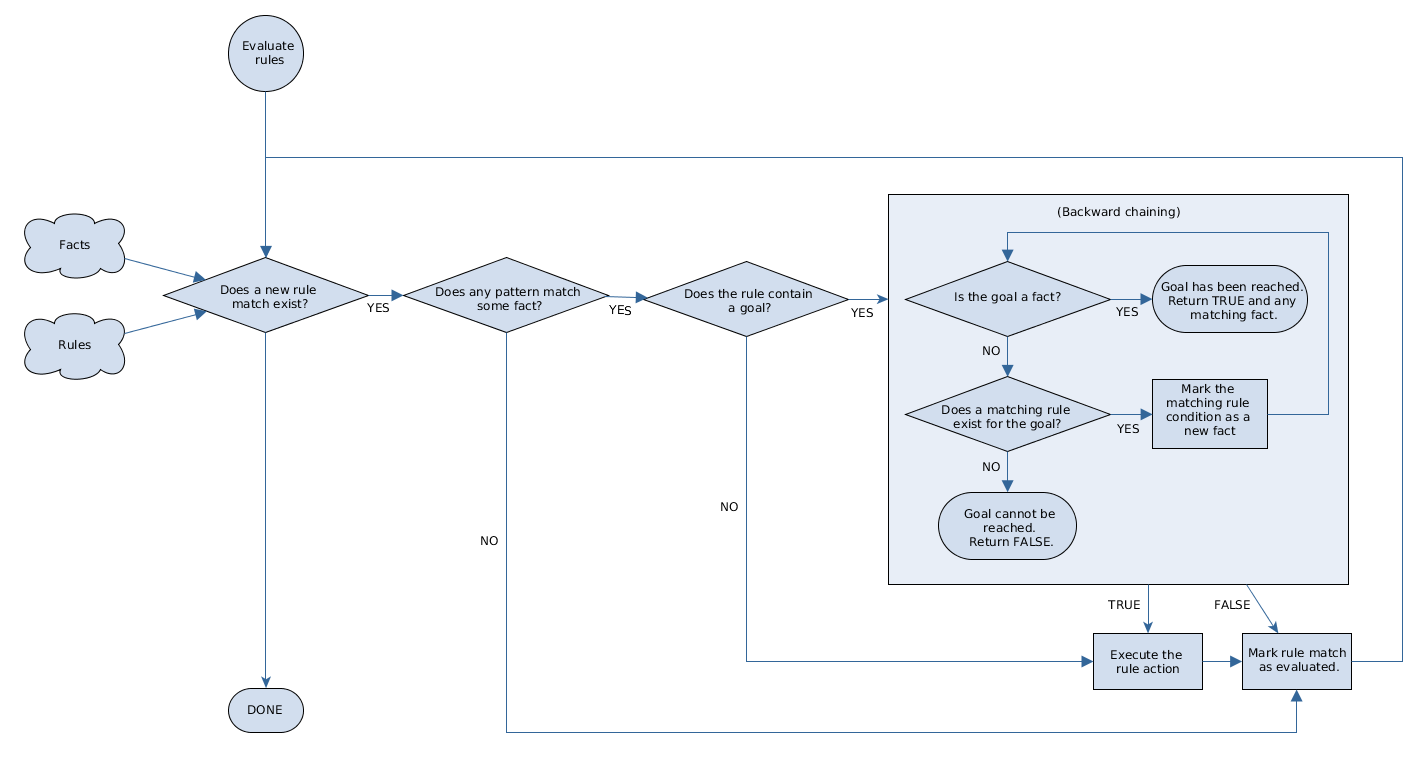

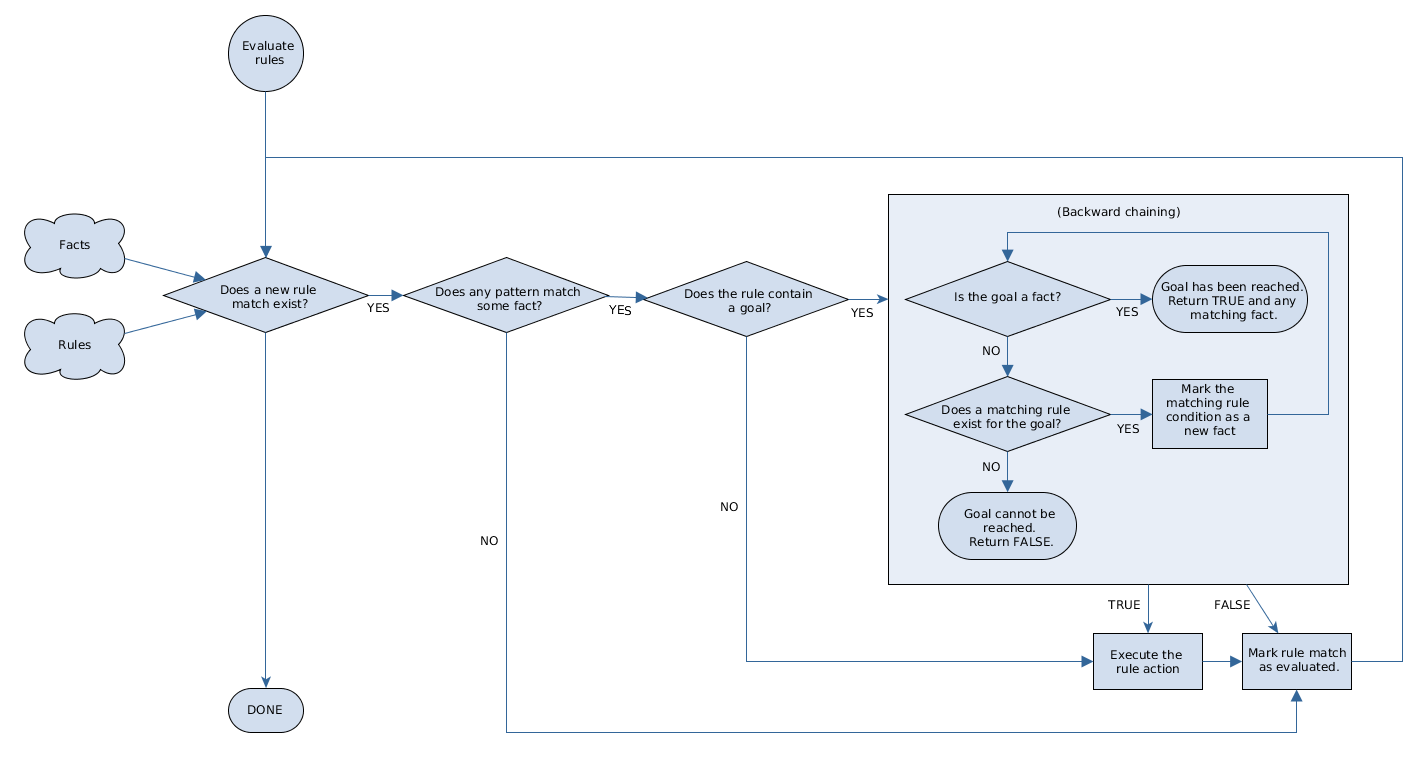

Drools is a business-rule management system with a forward-chaining and backward-chaining inference-based rules engine, allowing fast and reliable evaluation of business rules and complex event processing. A rules engine is also a fundamental building block to create an expert system which, in artificial intelligence, is a computer system that emulates the decision-making ability of a human expert.

-

jBPM is a flexible Business Process Management suite allowing you to model your business goals by describing the steps that need to be executed to achieve those goals.

-

OptaPlanner is a constraint solver that optimizes use cases such as employee rostering, vehicle routing, task assignment and cloud optimization.

-

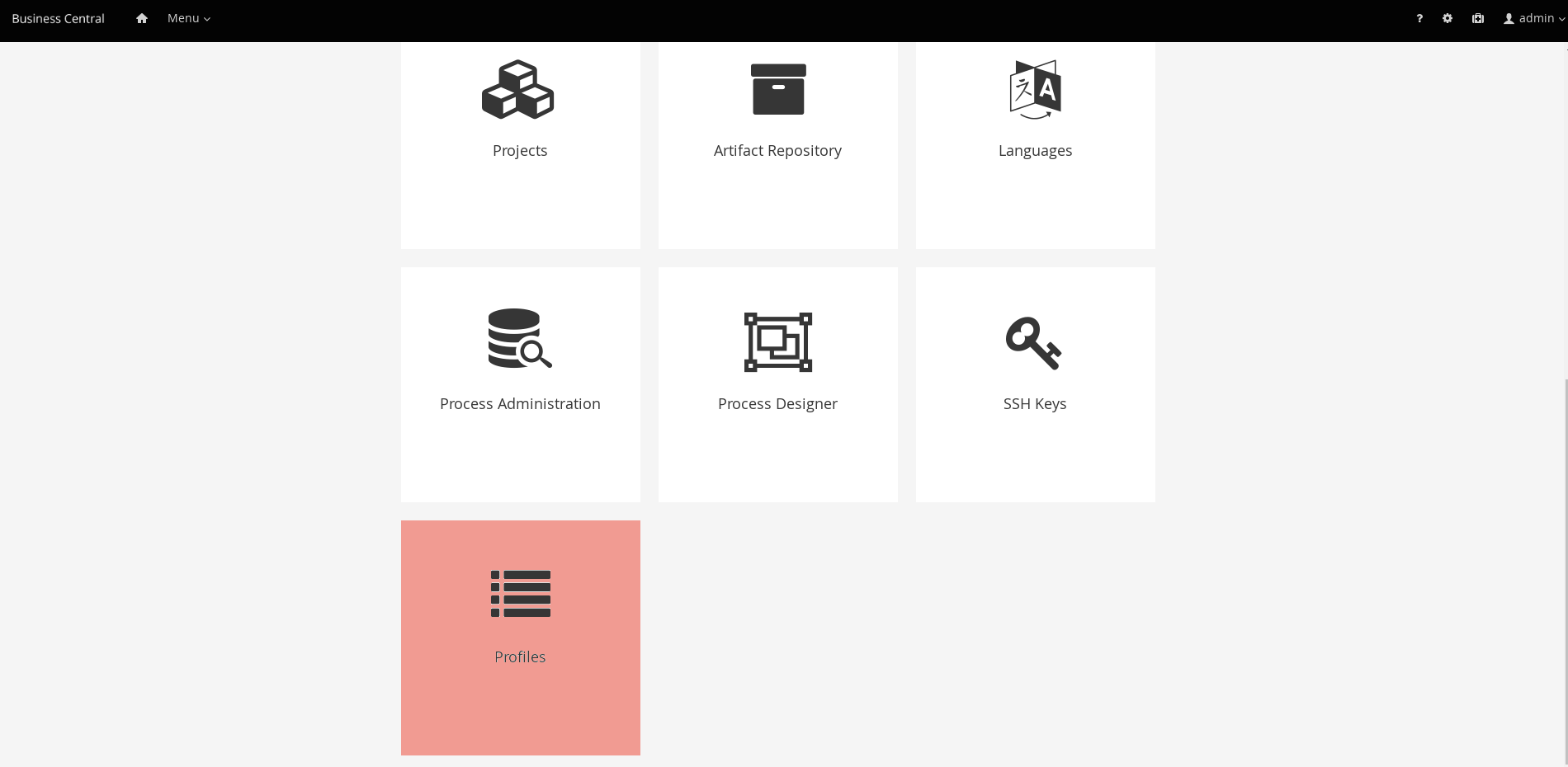

Business Central is a full featured web application for the visual composition of custom business rules and processes.

-

UberFire is a web-based workbench framework inspired by Eclipse Rich Client Platform.

The 7.x series will follow a more agile approach with more regular and iterative releases. We plan to do some bigger changes than normal for a series of minor releases, and users need to be aware those are coming before adopting.

-

UI sections and links will become object oriented, rather than task oriented. https://en.wikipedia.org/wiki/Object-oriented_user_interface

-

Authoring/Library will become project oriented, rather than repository oriented. You’ll create, browse and open projects rather than repositories. The repository concept will be pushed lower, for instance it’ll be created automatically when you create the project.

-

The old form modeller will be removed and only the new one made available. Although old forms will continue to render.

-

The new designer will continue to mature with more nodes and improved UXD. Eventually it’ll become the default editor, but we will not remove the old one until there is feature parity in BPMN2 support.

-

Continued UXD improvements in lots of places.

-

We will introduce the AppFormer project, this will be a re-org and consolidation of existing projects and result in some artifact renames. UberFire will become AppFormer-Core, forms, data modeller and dashbuilder will come under AppFormer. Dashbuilder will most likely be called Appformer-Insight.

The 8.x series will come towards the end of this year. We have ongoing parallel work to introduce concepts of workspaces with improved git support, that will have a built in workflow for forking and pull requests. This will be combined with horizontal scaling and improved high availability. These changes are important for usability and cloud scalability, but too much of a change for a minor release, hence the bump to 8.x

1.2. Getting Involved

We are often asked "How do I get involved". Luckily the answer is simple, just write some code and submit it :) There are no hoops you have to jump through or secret handshakes. We have a very minimal "overhead" that we do request to allow for scalable project development. Below we provide a general overview of the tools and "workflow" we request, along with some general advice.

If you contribute some good work, don’t forget to blog about it :)

1.2.1. Sign up to jboss.org

Signing to jboss.org will give you access to the JBoss wiki, forums and JIRA. Go to https://www.jboss.org/ and click "Register".

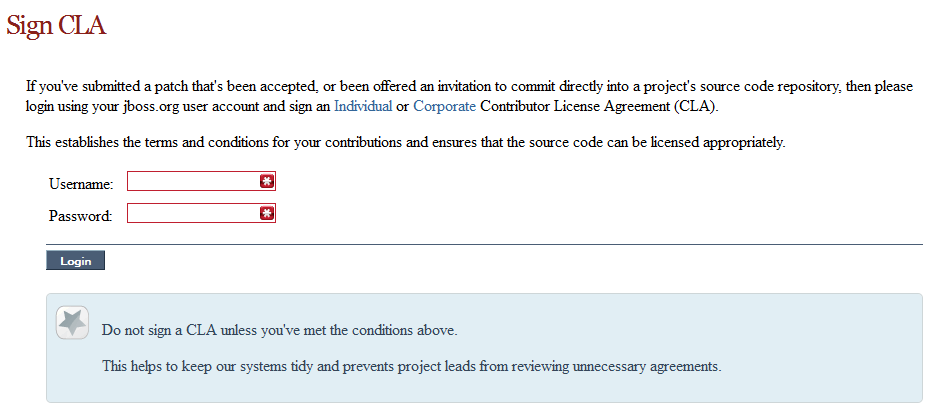

1.2.2. Sign the Contributor Agreement

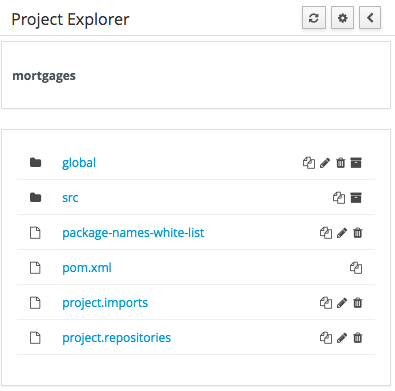

The only form you need to sign is the contributor agreement, which is fully automated via the web. As the image below says "This establishes the terms and conditions for your contributions and ensures that source code can be licensed appropriately"

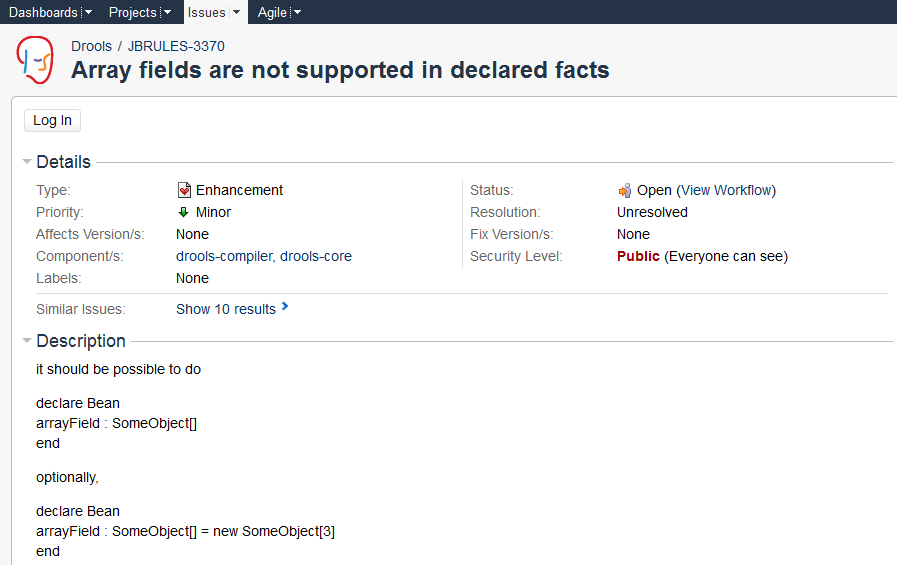

1.2.3. Submitting issues via JIRA

To be able to interact with the core development team you will need to use JIRA, the issue tracker. This ensures that all requests are logged and allocated to a release schedule and all discussions captured in one place. Bug reports, bug fixes, feature requests and feature submissions should all go here. General questions should be undertaken at the mailing lists.

Minor code submissions, like format or documentation fixes do not need an associated JIRA issue created.

1.2.4. Fork GitHub

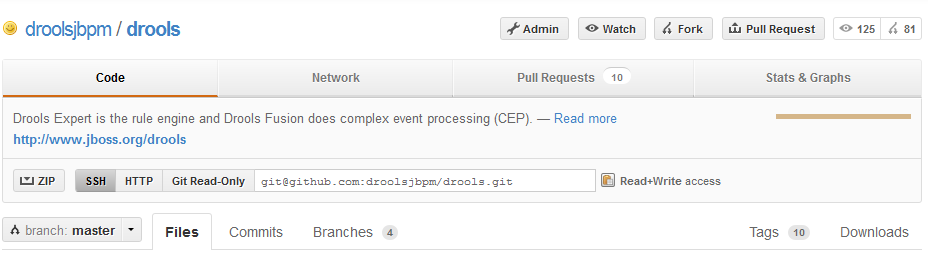

With the contributor agreement signed and your requests submitted to JIRA you should now be ready to code :) Create a GitHub account and fork any of the Drools, jBPM or Guvnor repositories. The fork will create a copy in your own GitHub space which you can work on at your own pace. If you make a mistake, don’t worry blow it away and fork again. Note each GitHub repository provides you the clone (checkout) URL, GitHub will provide you URLs specific to your fork.

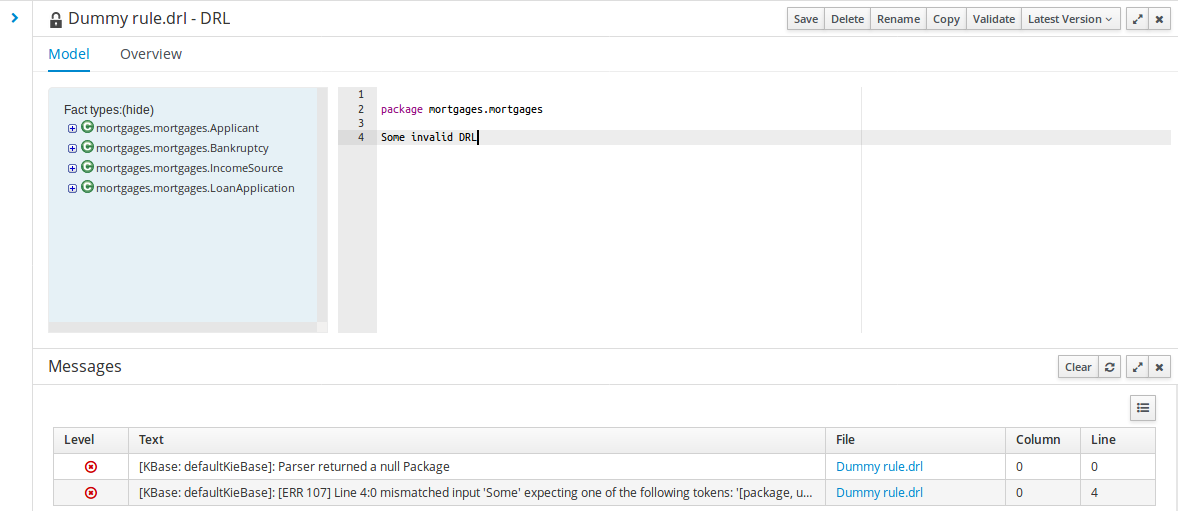

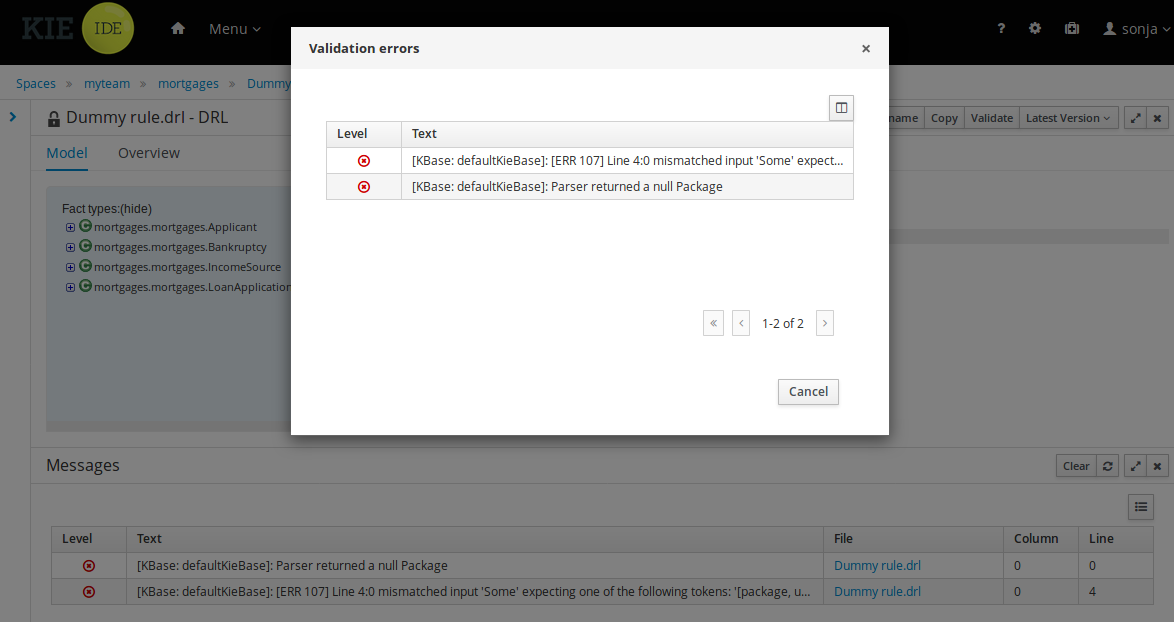

1.2.5. Writing Tests

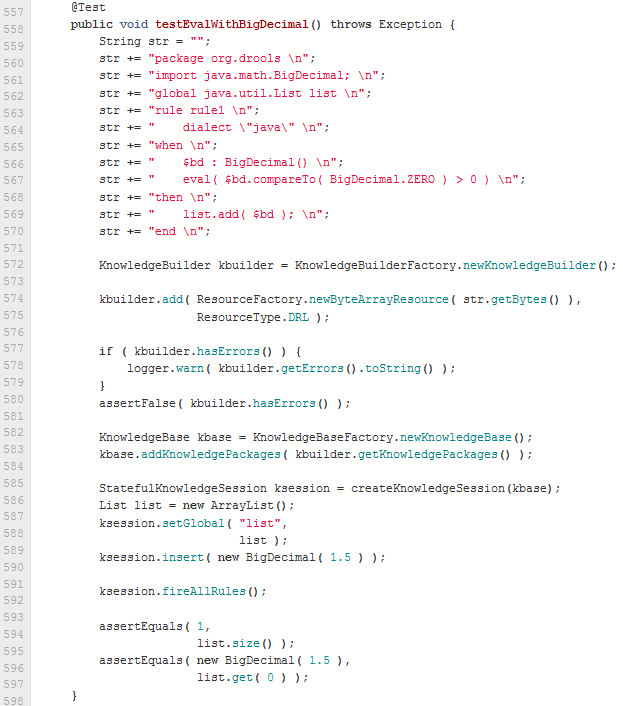

When writing tests, try and keep them minimal and self contained. We prefer to keep the DRL fragments within the test, as it makes for quicker reviewing. If there are a large number of rules then using a String is not practical so then by all means place them in separate DRL files instead to be loaded from the classpath. If your tests need to use a model, please try to use those that already exist for other unit tests; such as Person, Cheese or Order. If no classes exist that have the fields you need, try and update fields of existing classes before adding a new class.

There are a vast number of tests to look over to get an idea, MiscTest is a good place to start.

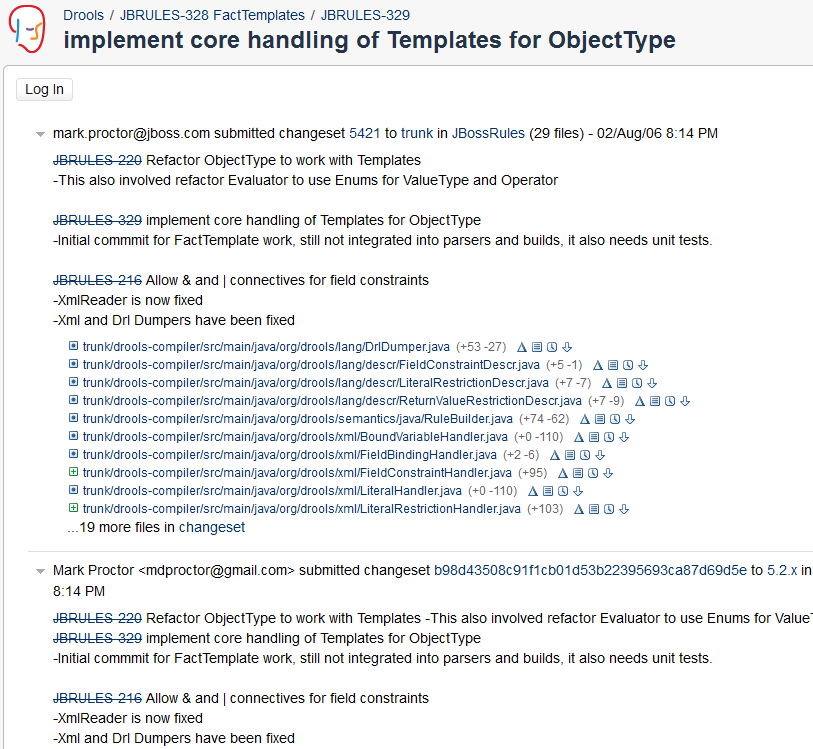

1.2.6. Commit with Correct Conventions

When you commit, make sure you use the correct conventions. The commit must start with the JIRA issue id, such as DROOLS-1946. This ensures the commits are cross referenced via JIRA, so we can see all commits for a given issue in the same place. After the id the title of the issue should come next. Then use a newline, indented with a dash, to provide additional information related to this commit. Use an additional new line and dash for each separate point you wish to make. You may add additional JIRA cross references to the same commit, if it’s appropriate. In general try to avoid combining unrelated issues in the same commit.

Don’t forget to rebase your local fork from the primary branch and then push your commits back to your fork.

1.2.7. Submit Pull Requests

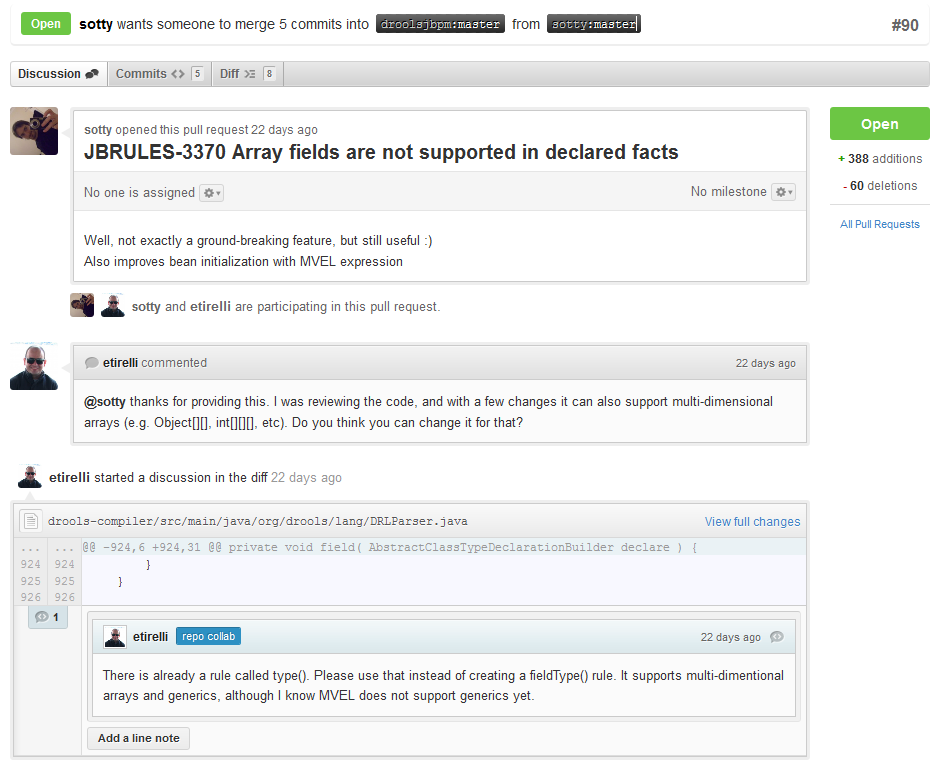

With your code rebased from primary branch and pushed to your personal GitHub area, you can now submit your work as a pull request. If you look at the top of the page in GitHub for your work area there will be a "Pull Request" button. Selecting this will then provide a gui to automate the submission of your pull request.

The pull request then goes into a queue for everyone to see and comment on. Below you can see a typical pull request. The pull requests allow for discussions and it shows all associated commits and the diffs for each commit. The discussions typically involve code reviews which provide helpful suggestions for improvements, and allows for us to leave inline comments on specific parts of the code. Don’t be disheartened if we don’t merge straight away, it can often take several revisions before we accept a pull request. Luckily GitHub makes it very trivial to go back to your code, do some more commits and then update your pull request to your latest and greatest.

It can take time for us to get round to responding to pull requests, so please be patient. Submitted tests that come with a fix will generally be applied quite quickly, where as just tests will often way until we get time to also submit that with a fix. Don’t forget to rebase and resubmit your request from time to time, otherwise over time it will have merge conflicts and core developers will general ignore those.

1.3. Installation and Setup (Core and IDE)

1.3.1. Installing and using

Drools engine provides an Eclipse-based IDE, which is optional. However, the Eclipse-based IDE will be deprecated in a future release.

The latest working version of the Eclipse-based IDE is 7.46.0.Final and can be used with the recent versions of Drools.

A simple way to get started is to download and install the Eclipse plug-in - this will also require the Eclipse GEF framework to be installed (see below, if you don’t have it installed already). This will provide you with all the dependencies you need to get going: you can simply create a new rule project and everything will be done for you. Refer to the chapter on Business Central and IDE for detailed instructions on this. Installing the Eclipse plug-in is generally as simple as unzipping a file into your Eclipse plug-in directory.

Use of the Eclipse plug-in is not required. Rule files are just textual input (or spreadsheets as the case may be) and the IDE (also known as Business Central) is just a convenience. People have integrated the Drools engine in many ways, there is no "one size fits all".

Alternatively, you can download the binary distribution, and include the relevant JARs in your projects classpath.

1.3.1.1. Dependencies and JARs

Drools is broken down into a few modules, some are required during rule development/compiling, and some are required at runtime. In many cases, people will simply want to include all the dependencies at runtime, and this is fine. It allows you to have the most flexibility. However, some may prefer to have their "runtime" stripped down to the bare minimum, as they will be deploying rules in binary form - this is also possible. The core Drools engine can be quite compact, and only requires a few 100 kilobytes across 3 JAR files.

The following is a description of the important libraries that make up JBoss Drools

-

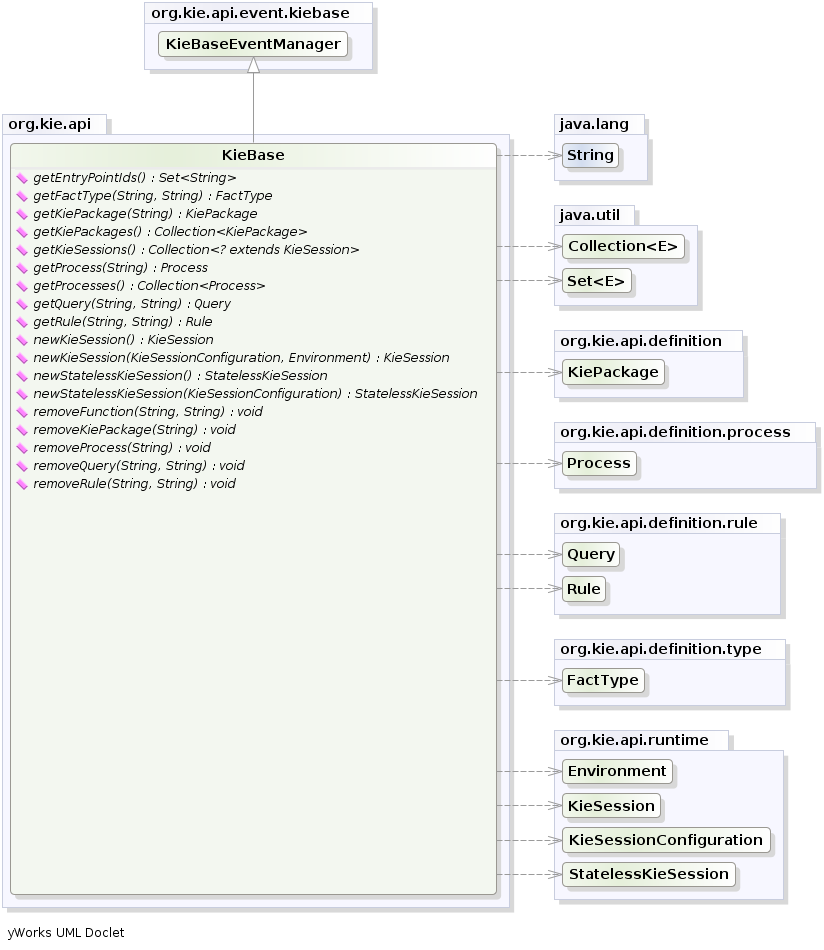

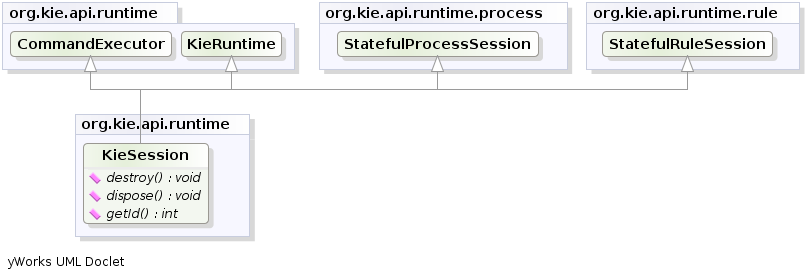

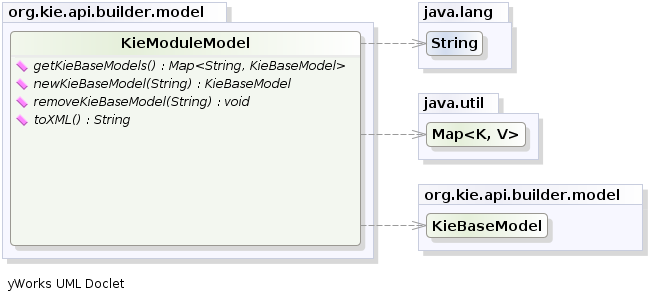

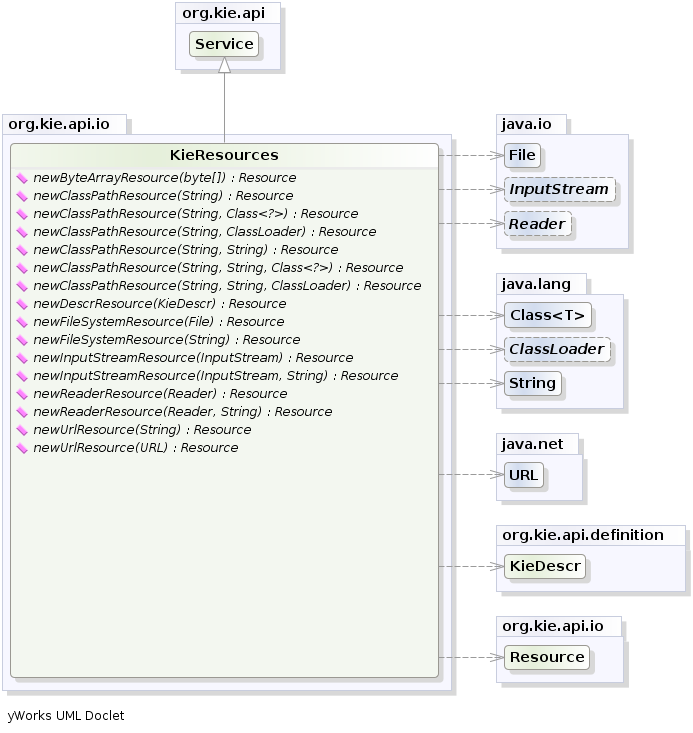

knowledge-api.jar - this provides the interfaces and factories. It also helps clearly show what is intended as a user API and what is just an engine API.

-

knowledge-internal-api.jar - this provides internal interfaces and factories.

-

drools-core.jar - this is the core Drools engine, runtime component. Contains both the RETE engine and the LEAPS engine. This is the only runtime dependency if you are pre-compiling rules (and deploying via Package or RuleBase objects).

-

drools-compiler.jar - this contains the compiler/builder components to take rule source, and build executable rule bases. This is often a runtime dependency of your application, but it need not be if you are pre-compiling your rules. This depends on drools-core.

-

drools-jsr94.jar - this is the JSR-94 compliant implementation, this is essentially a layer over the drools-compiler component. Note that due to the nature of the JSR-94 specification, not all features are easily exposed via this interface. In some cases, it will be easier to go direct to the Drools API, but in some environments the JSR-94 is mandated.

-

drools-decisiontables.jar - this is the decision tables 'compiler' component, which uses the drools-compiler component. This supports both excel and CSV input formats.

There are quite a few other dependencies which the above components require, most of which are for the drools-compiler, drools-jsr94 or drools-decisiontables module. Some key ones to note are "POI" which provides the spreadsheet parsing ability, and "antlr" which provides the parsing for the rule language itself.

| if you are using Drools in J2EE or servlet containers and you come across classpath issues with "JDT", then you can switch to the janino compiler. Set the system property "drools.compiler": For example: -Ddrools.compiler=JANINO. |

For up to date info on dependencies in a release, consult the released POMs, which can be found on the Maven repository.

1.3.1.2. Use with Maven, Gradle, Ivy, Buildr or Ant

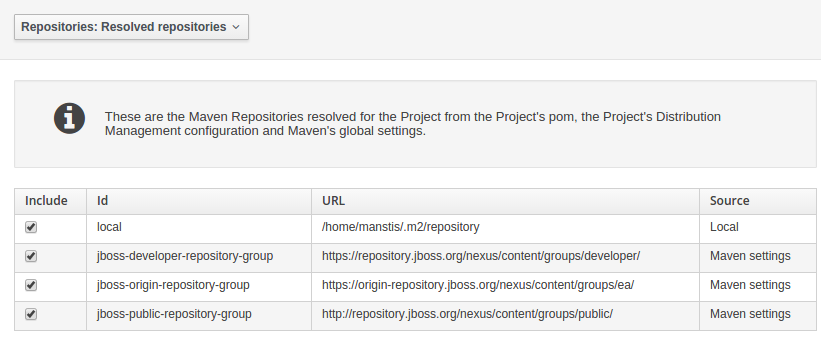

The JARs are also available in the central Maven repository (and also in https://repository.jboss.org/nexus/index.html#nexus-search;gavorg.drools~[the JBoss Maven repository]).

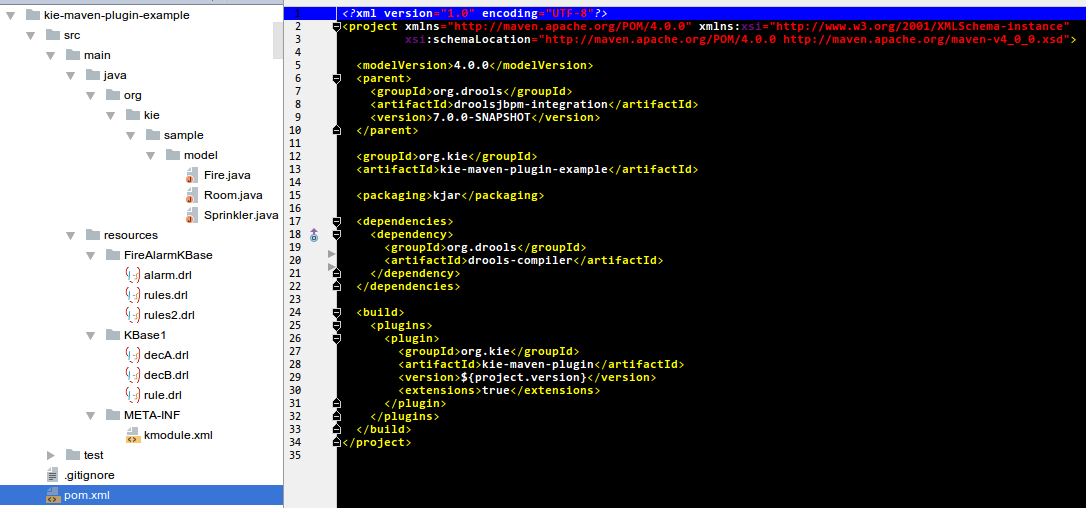

If you use Maven, add KIE and Drools dependencies in your project’s pom.xml like this:

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.drools</groupId>

<artifactId>drools-bom</artifactId>

<type>pom</type>

<version>...</version>

<scope>import</scope>

</dependency>

...

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>org.kie</groupId>

<artifactId>kie-api</artifactId>

</dependency>

<dependency>

<groupId>org.drools</groupId>

<artifactId>drools-compiler</artifactId>

<scope>runtime</scope>

</dependency>

...

<dependencies>This is similar for Gradle, Ivy and Buildr. To identify the latest version, check the Maven repository.

If you’re still using Ant (without Ivy), copy all the JARs from the download zip’s binaries directory and manually verify that your classpath doesn’t contain duplicate JARs.

1.3.1.3. Runtime

The "runtime" requirements mentioned here are if you are deploying rules as their binary form (either as KnowledgePackage objects, or KnowledgeBase objects etc). This is an optional feature that allows you to keep your runtime very light. You may use drools-compiler to produce rule packages "out of process", and then deploy them to a runtime system. This runtime system only requires drools-core.jar and knowledge-api for execution. This is an optional deployment pattern, and many people do not need to "trim" their application this much, but it is an ideal option for certain environments.

1.3.1.4. Installing IDE (Rule Workbench)

The rule workbench (for Eclipse) requires that you have Eclipse 3.4 or greater, as well as Eclipse GEF 3.4 or greater. You can install it either by downloading the plug-in or using the update site.

Another option is to use the JBoss IDE, which comes with all the plug-in requirements pre packaged, as well as a choice of other tools separate to rules. You can choose just to install rules from the "bundle" that JBoss IDE ships with.

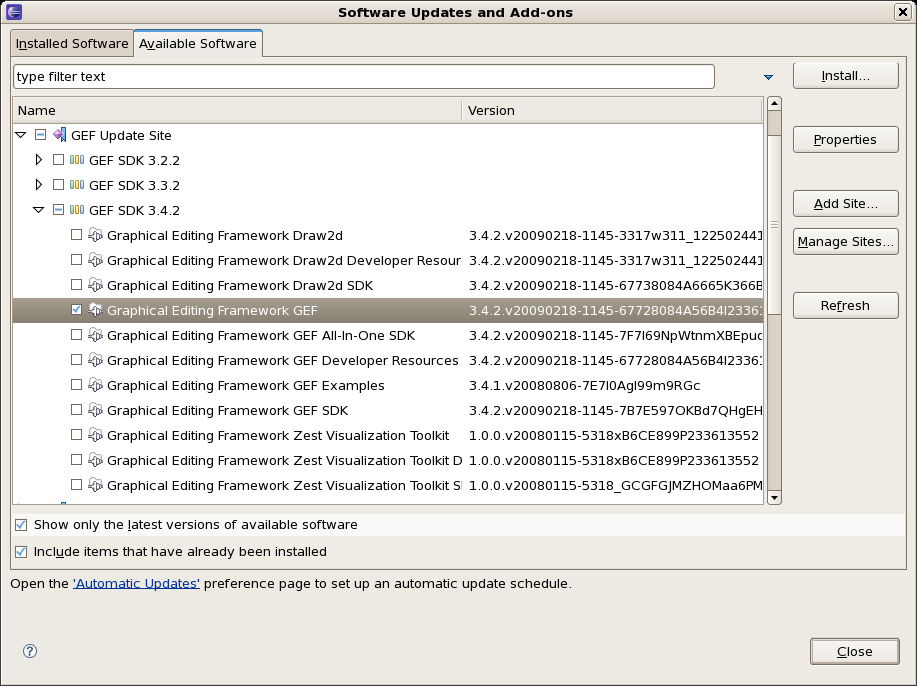

Installing GEF (a required dependency)

GEF is the Eclipse Graphical Editing Framework, which is used for graph viewing components in the plug-in.

If you don’t have GEF installed, you can install it using the built in update mechanism (or downloading GEF from the Eclipse.org website not recommended). JBoss IDE has GEF already, as do many other "distributions" of Eclipse, so this step may be redundant for some people.

Open the Help→Software updates…→Available Software→Add Site… from the help menu. Location is:

http://download.eclipse.org/tools/gef/updates/releases/Next you choose the GEF plug-in:

Press next, and agree to install the plug-in (an Eclipse restart may be required). Once this is completed, then you can continue on installing the rules plug-in.

Installing GEF from zip file

To install from the zip file, download and unzip the file. Inside the zip you will see a plug-in directory, and the plug-in JAR itself. You place the plug-in JAR into your Eclipse applications plug-in directory, and restart Eclipse.

Installing Drools plug-in from zip file

Download the Drools Eclipse IDE plugin from the link below. Unzip the downloaded file in your main eclipse folder (do not just copy the file there, extract it so that the feature and plugin JARs end up in the features and plugin directory of eclipse) and (re)start Eclipse.

To check that the installation was successful, try opening the Drools perspective: Click the 'Open Perspective' button in the top right corner of your Eclipse window, select 'Other…' and pick the Drools perspective. If you cannot find the Drools perspective as one of the possible perspectives, the installation probably was unsuccessful. Check whether you executed each of the required steps correctly: Do you have the right version of Eclipse (3.4.x)? Do you have Eclipse GEF installed (check whether the org.eclipse.gef_3.4..jar exists in the plugins directory in your eclipse root folder)? Did you extract the Drools Eclipse plugin correctly (check whether the org.drools.eclipse_.jar exists in the plugins directory in your eclipse root folder)? If you cannot find the problem, try contacting us (e.g. on irc or on the user mailing list), more info can be found no our homepage here:

Drools Runtimes

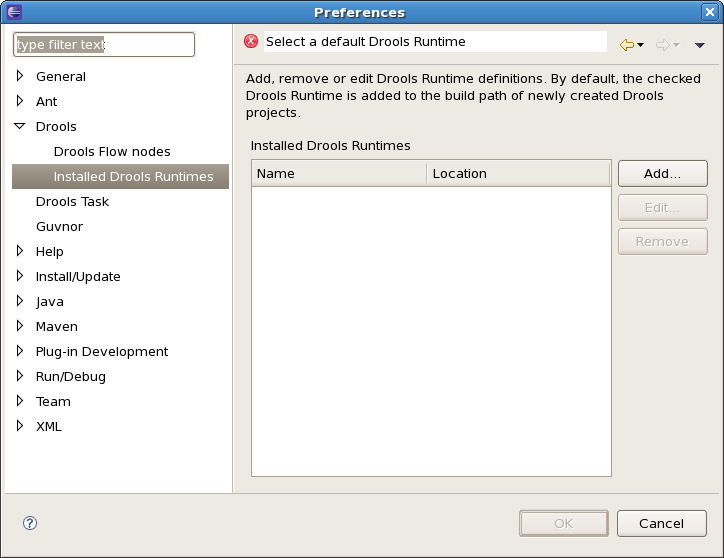

A Drools runtime is a collection of JARs on your file system that represent one specific release of the Drools project JARs. To create a runtime, you must point the IDE to the release of your choice. If you want to create a new runtime based on the latest Drools project JARs included in the plugin itself, you can also easily do that. You are required to specify a default Drools runtime for your Eclipse workspace, but each individual project can override the default and select the appropriate runtime for that project specifically.

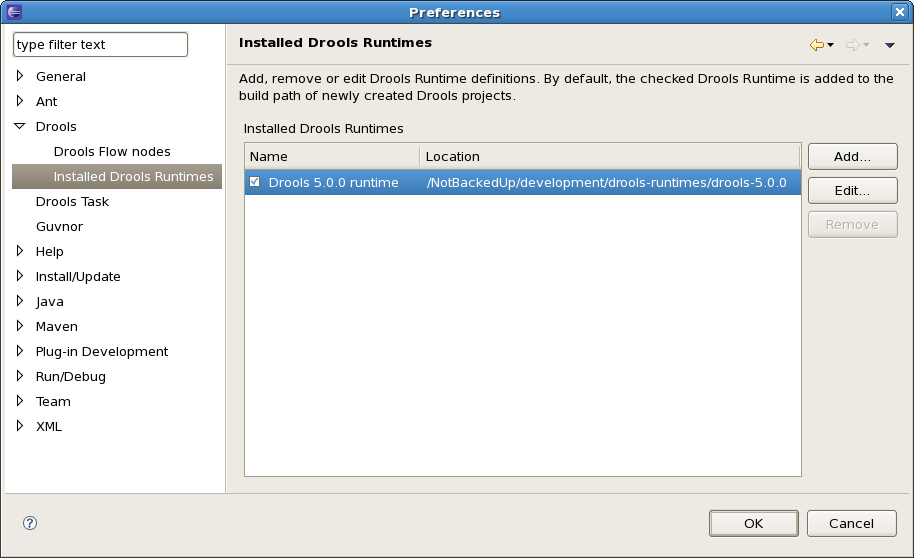

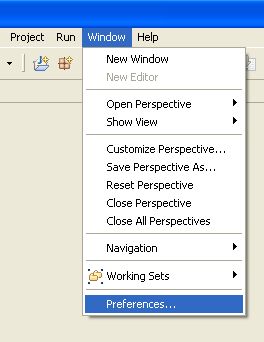

You are required to define one or more Drools runtimes using the Eclipse preferences view. To open up your preferences, in the menu Window select the Preferences menu item. A new preferences dialog should show all your preferences. On the left side of this dialog, under the Drools category, select "Installed Drools runtimes". The panel on the right should then show the currently defined Drools runtimes. If you have not yet defined any runtimes, it should like something like the figure below.

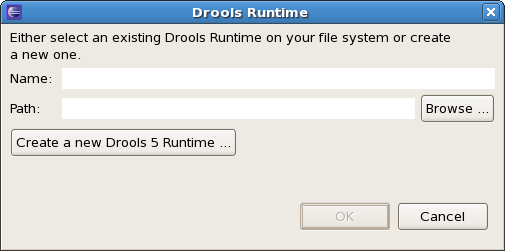

To define a new Drools runtime, click the add button. A dialog as shown below should pop up, requiring the name for your runtime and the location on your file system where it can be found.

In general, you have two options:

-

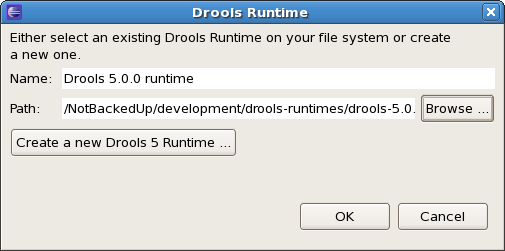

If you simply want to use the default JARs as included in the Drools Eclipse plugin, you can create a new Drools runtime automatically by clicking the "Create a new Drools 5 runtime …" button. A file browser will show up, asking you to select the folder on your file system where you want this runtime to be created. The plugin will then automatically copy all required dependencies to the specified folder. After selecting this folder, the dialog should look like the figure shown below.

-

If you want to use one specific release of the Drools project, you should create a folder on your file system that contains all the necessary Drools libraries and dependencies. Instead of creating a new Drools runtime as explained above, give your runtime a name and select the location of this folder containing all the required JARs.

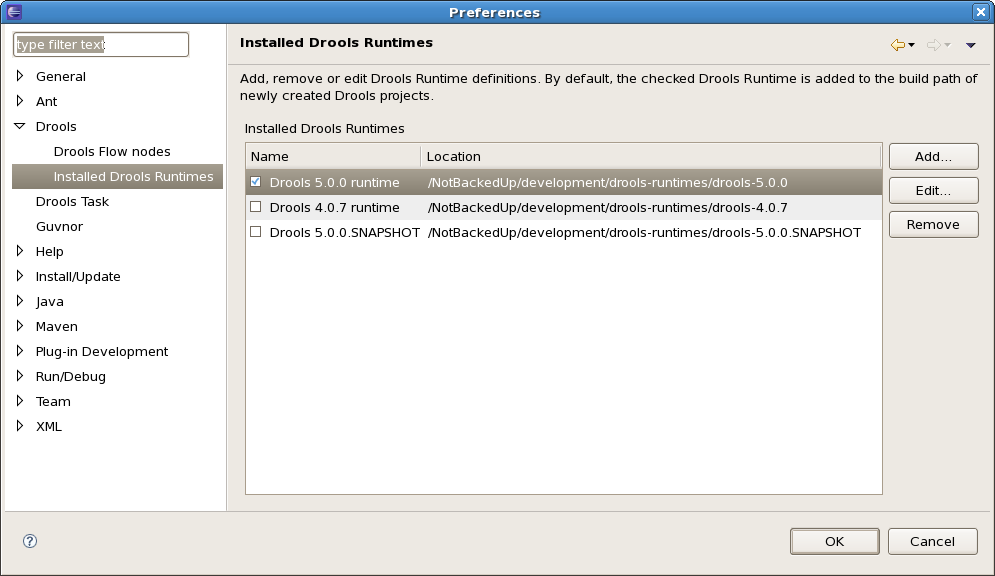

After clicking the OK button, the runtime should show up in your table of installed Drools runtimes, as shown below. Click checkbox in front of the newly created runtime to make it the default Drools runtime. The default Drools runtime will be used as the runtime of all your Drools project that have not selected a project-specific runtime.

You can add as many Drools runtimes as you need. For example, the screenshot below shows a configuration where three runtimes have been defined: a Drools 4.0.7 runtime, a Drools 5.0.0 runtime and a Drools 5.0.0.SNAPSHOT runtime. The Drools 5.0.0 runtime is selected as the default one.

Note that you will need to restart Eclipse if you changed the default runtime and you want to make sure that all the projects that are using the default runtime update their classpath accordingly.

Whenever you create a Drools project (using the New Drools Project wizard or by converting an existing Java project to a Drools project using the "Convert to Drools Project" action that is shown when you are in the Drools perspective and you right-click an existing Java project), the plugin will automatically add all the required JARs to the classpath of your project.

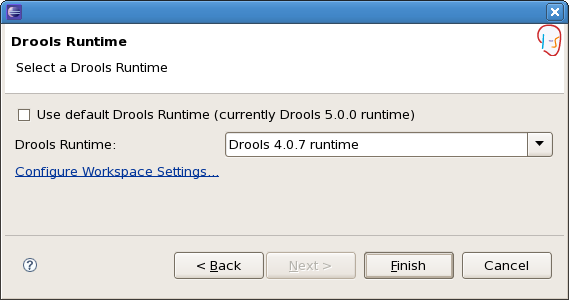

When creating a new Drools project, the plugin will automatically use the default Drools runtime for that project, unless you specify a project-specific one. You can do this in the final step of the New Drools Project wizard, as shown below, by deselecting the "Use default Drools runtime" checkbox and selecting the appropriate runtime in the drop-down box. If you click the "Configure workspace settings …" link, the workspace preferences showing the currently installed Drools runtimes will be opened, so you can add new runtimes there.

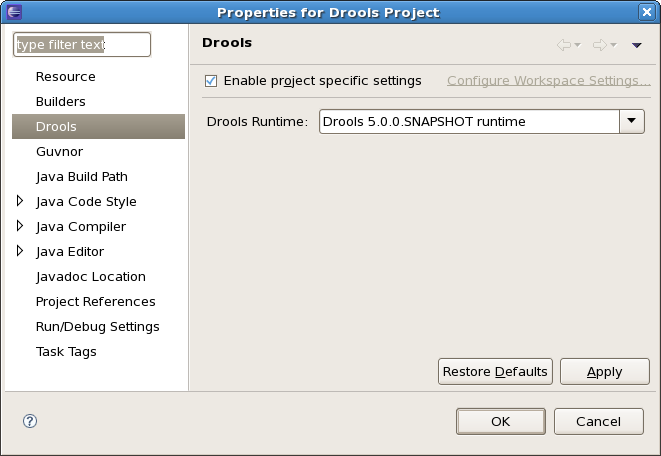

You can change the runtime of a Drools project at any time by opening the project properties (right-click the project and select Properties) and selecting the Drools category, as shown below. Check the "Enable project specific settings" checkbox and select the appropriate runtime from the drop-down box. If you click the "Configure workspace settings …" link, the workspace preferences showing the currently installed Drools runtimes will be opened, so you can add new runtimes there. If you deselect the "Enable project specific settings" checkbox, it will use the default runtime as defined in your global preferences.

1.3.2. Building from source

1.3.2.1. Getting the sources

The source code of each Maven artifact is available in the JBoss Maven repository as a source JAR. The same source JARs are also included in the download zips. However, if you want to build from source, it’s highly recommended to get our sources from our source control.

Git allows you to fork our code, independently make personal changes on it, yet still merge in our latest changes regularly and optionally share your changes with us. To learn more about git, read the free book Git Pro.

1.3.2.2. Building the sources

In essence, building from source is very easy, for example if you want to build the guvnor project:

$ git clone git@github.com:kiegroup/guvnor.git

...

$ cd guvnor

$ mvn clean install -DskipTests -Dfull

...However, there are a lot potential pitfalls, so if you’re serious about building from source and possibly contributing to the project, follow the instructions in the README file in droolsjbpm-build-bootstrap.

1.3.3. Eclipse

1.3.3.1. Importing Eclipse Projects

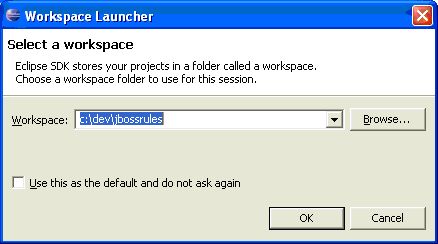

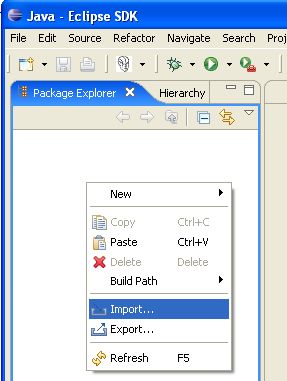

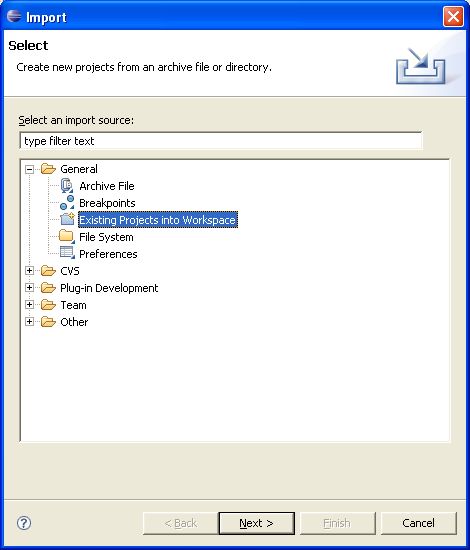

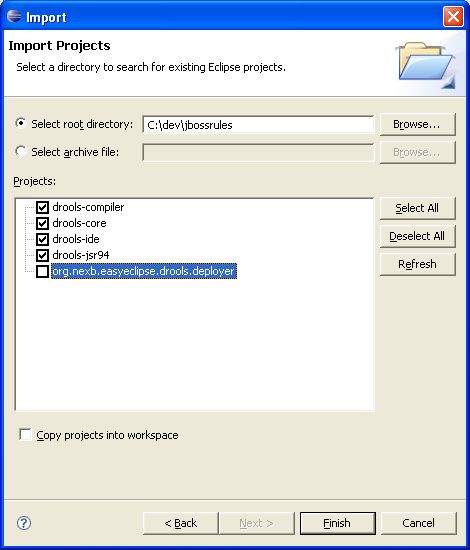

With the Eclipse project files generated they can now be imported into Eclipse. When starting Eclipse open the workspace in the root of your subversion checkout.

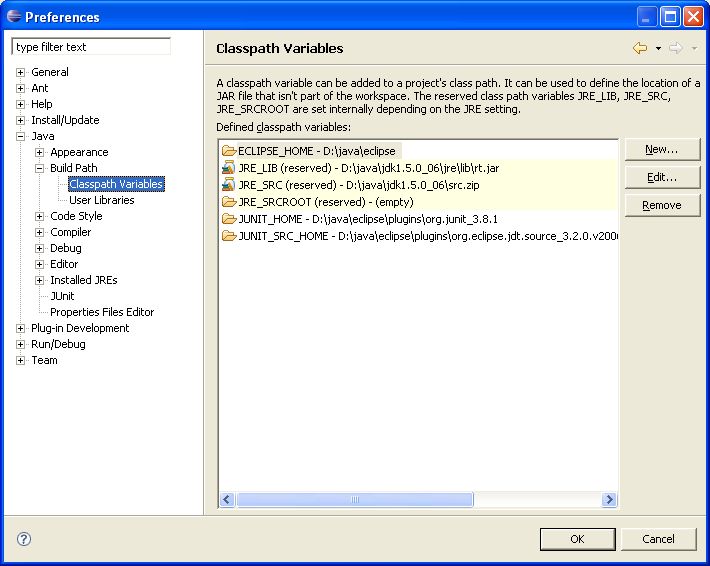

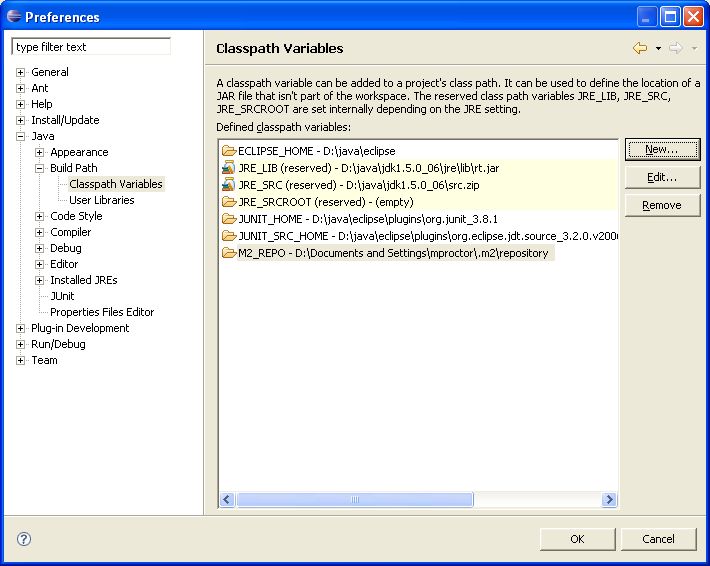

When calling mvn install all the project dependencies were downloaded and added to the local Maven repository.

Eclipse cannot find those dependencies unless you tell it where that repository is.

To do this setup an M2_REPO classpath variable.

Getting started

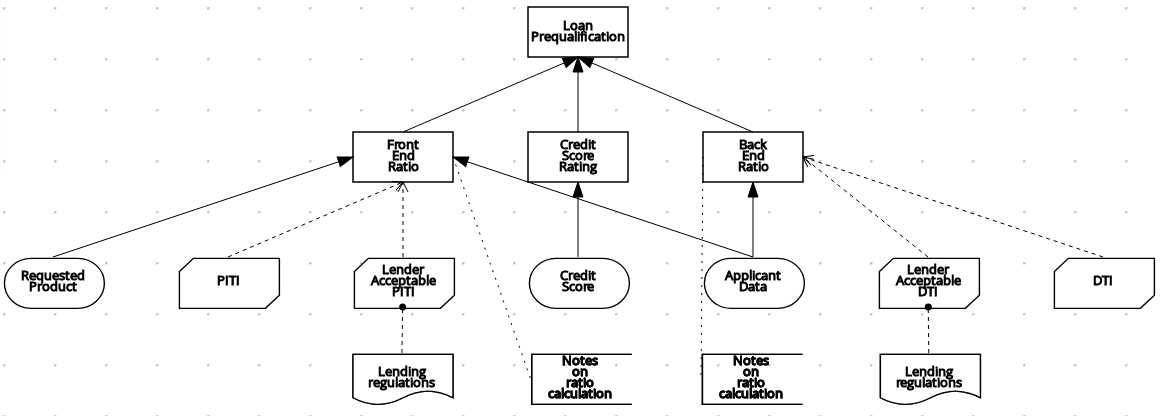

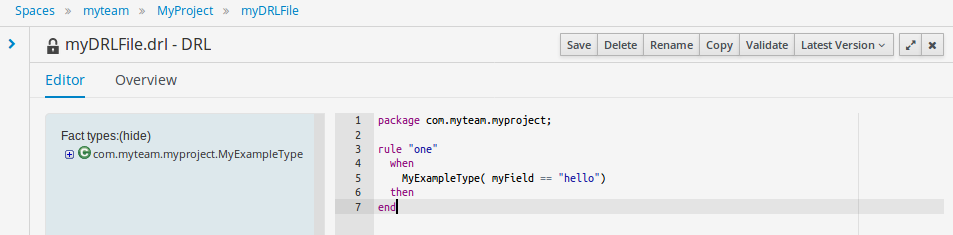

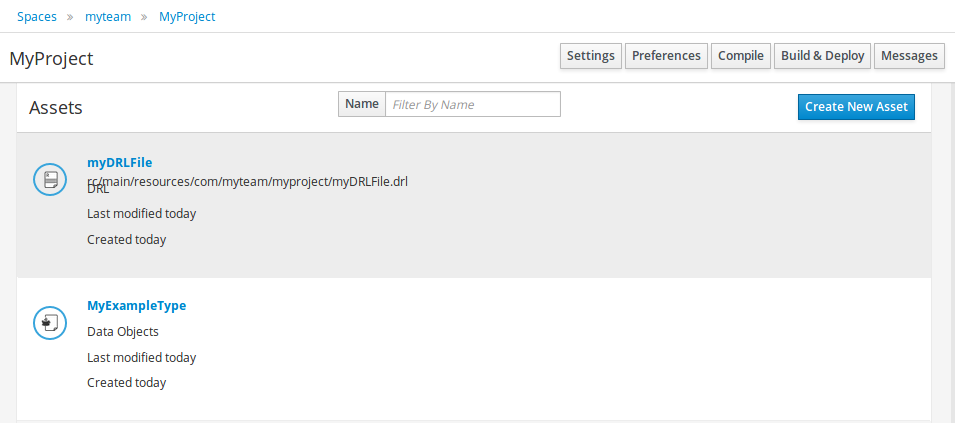

2. Getting started with decision services in Drools

As a business rules developer, you can use Business Central in Drools to design a variety of decision services. Drools provides example projects with example business assets directly in Business Central as a reference. This document describes how to create and test an example traffic violation project based on the Traffic_Violation sample project included in Business Central. This sample project uses a Decision Model and Notation (DMN) model to define driver penalty and suspension rules in a traffic violation decision service. You can follow the steps in this document to create the project and the assets it contains, or open and review the existing Traffic_Violation sample project.

For more information about the DMN components and implementation in Drools, see Designing a decision service using DMN models.

-

Red Hat JBoss Enterprise Application Platform 7.4 is installed. For installation information, see Red Hat JBoss Enterprise Application Platform 7.4 Installation Guide.

-

Drools is installed and configured with KIE Server. For more information see Installing and configuring Drools on Red Hat JBoss EAP 7.4.

-

Drools is running and you can log in to Business Central with the

developerrole. For more information, see Planning a Drools installation.

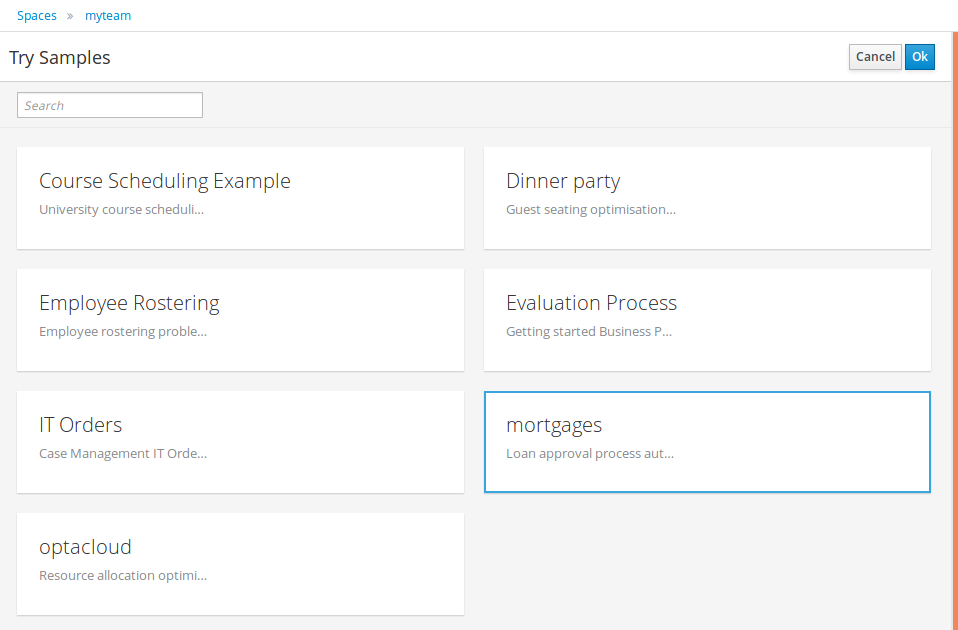

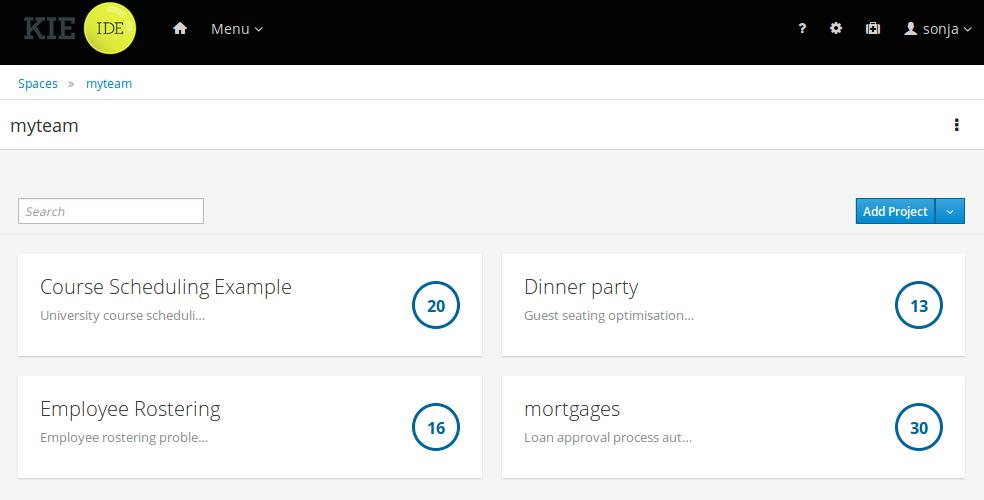

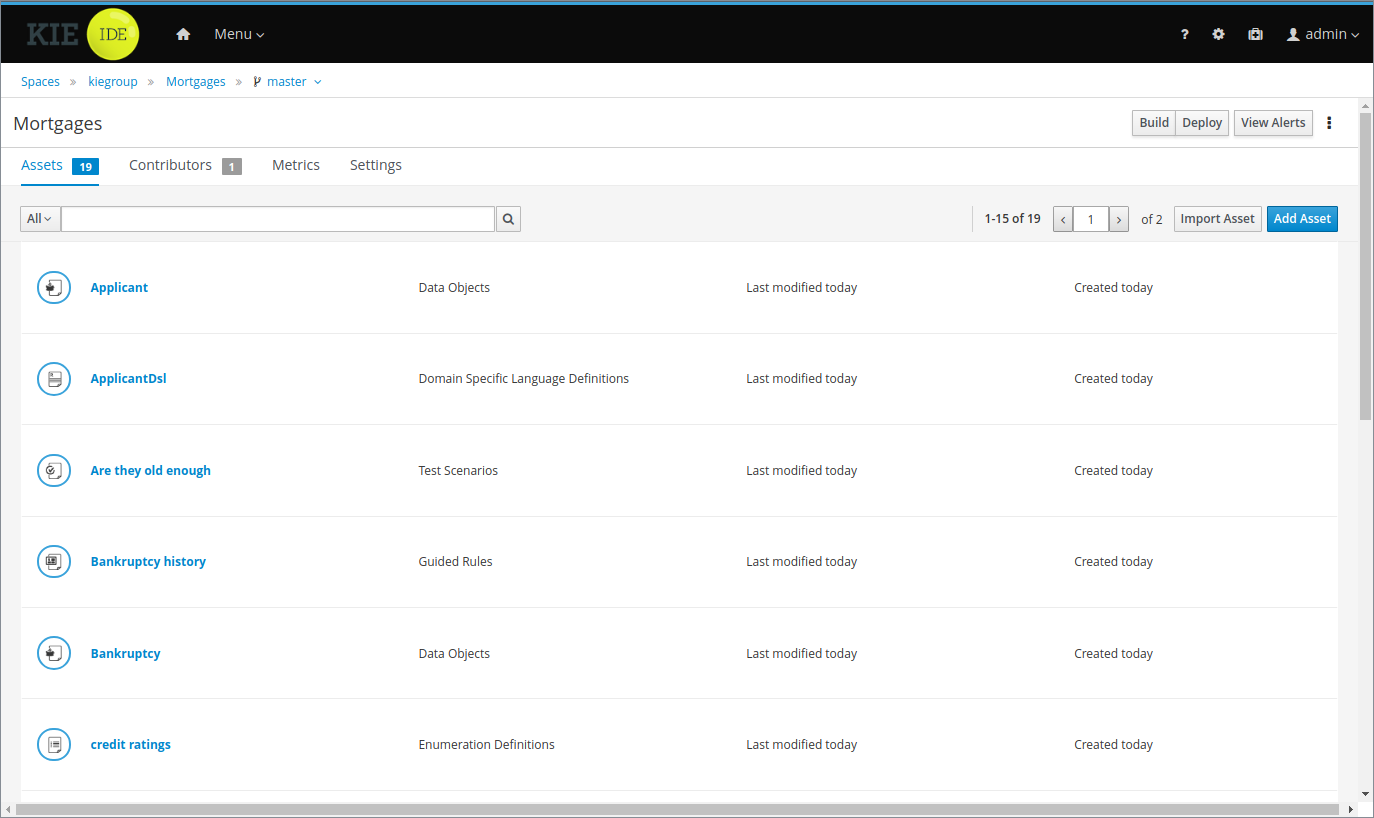

2.1. Sample projects and business assets in Business Central

Business Central contains sample projects with business assets that you can use as a reference for the rules or other assets that you create in your own Drools projects. Each sample project is designed differently to demonstrate decision management or business optimization assets and logic in Drools.

| Red Hat does not provide support for the sample code included in the Drools distribution. |

The following sample projects are available in Business Central:

-

Course_Scheduling: (Business optimization) Course scheduling and curriculum decision process. Assigns lectures to rooms and determines a student’s curriculum based on factors such as course conflicts and class room capacity.

-

Dinner_Party: (Business optimization) Guest seating optimization using guided decision tables. Assigns guest seating based on each guest’s job type, political beliefs, and known relationships.

-

Employee_Rostering: (Business optimization) Employee rostering optimization using decision and solver assets. Assigns employees to shifts based on skills.

-

Evaluation_Process: (Process automation) Evaluation process using business process assets. Evaluates employees based on performance.

-

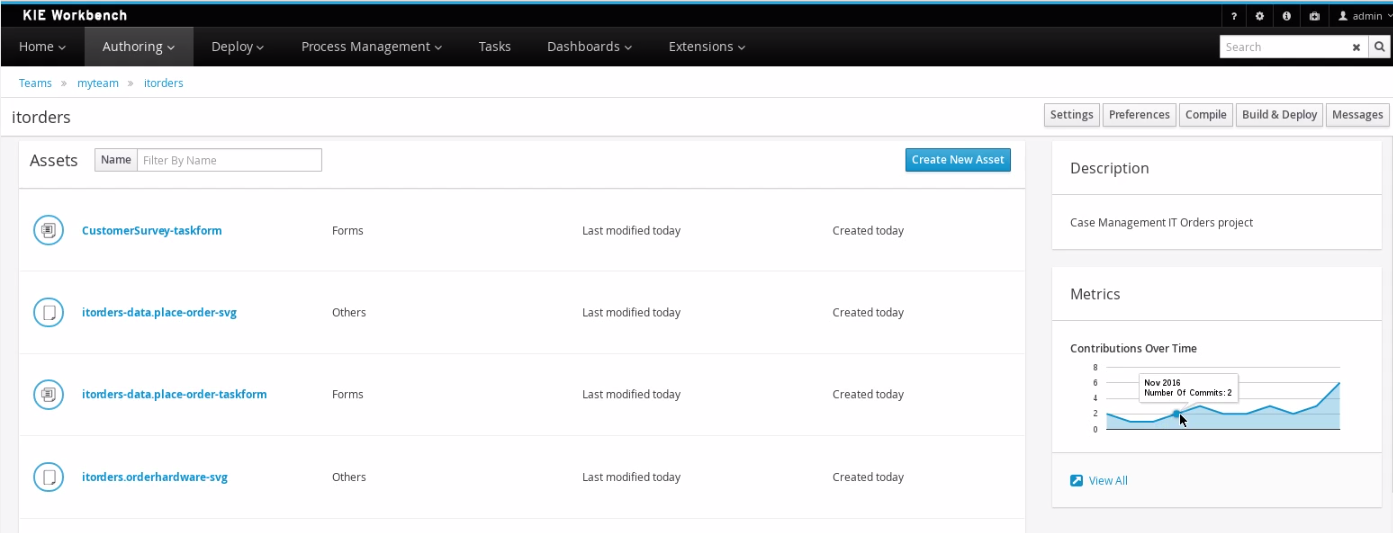

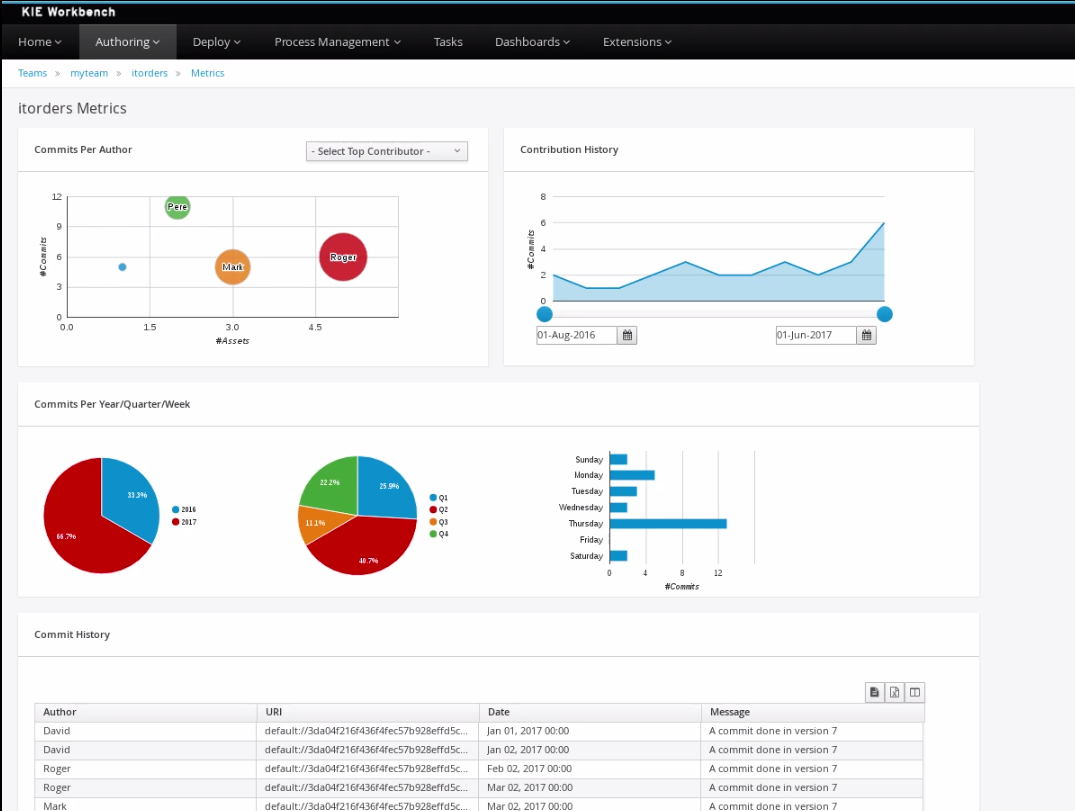

IT_Orders: (Process automation and case management) Ordering case using business process and case management assets. Places an IT hardware order based on needs and approvals.

-

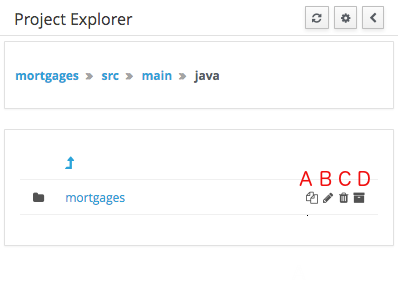

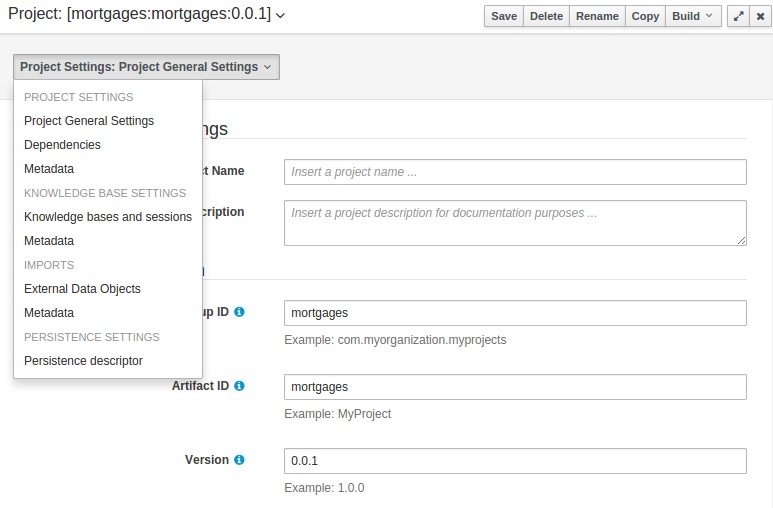

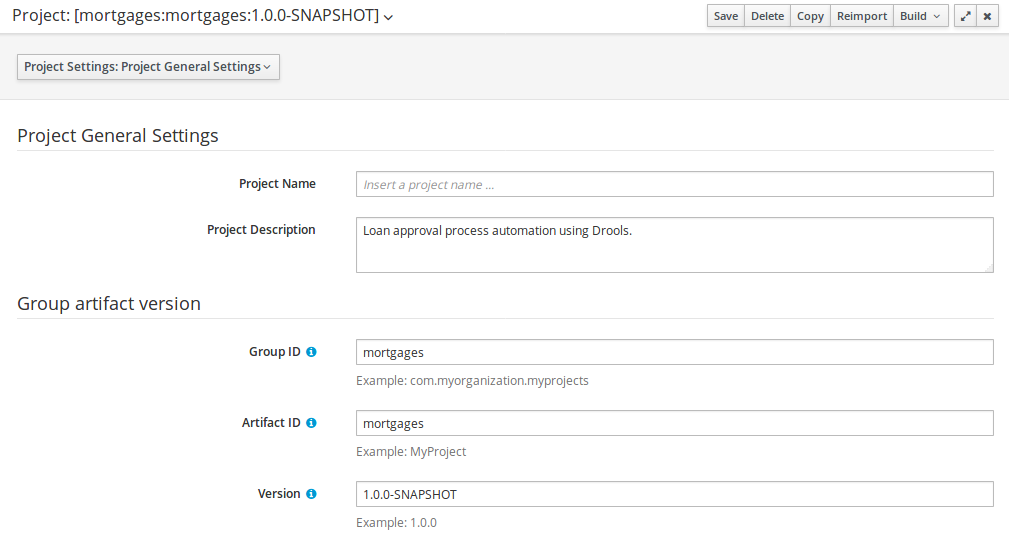

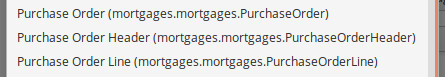

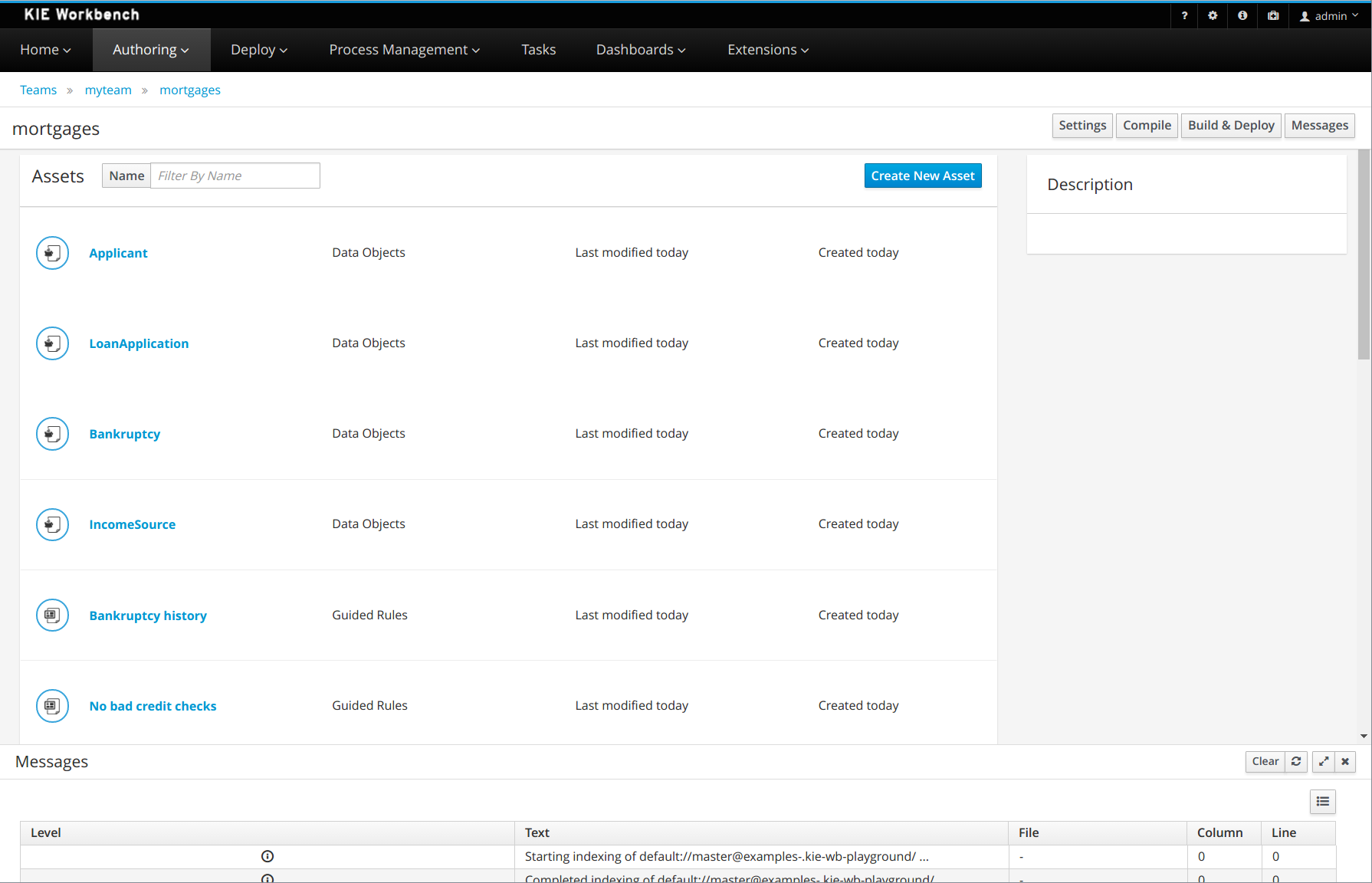

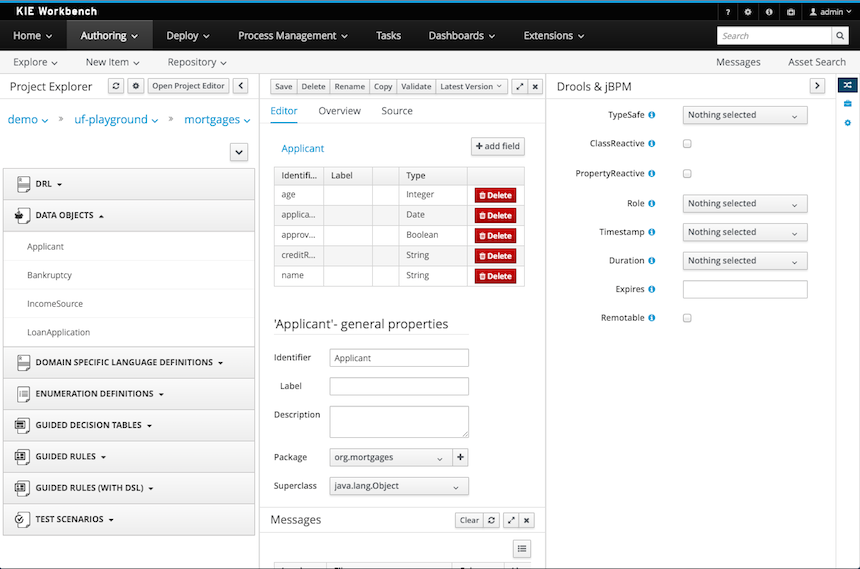

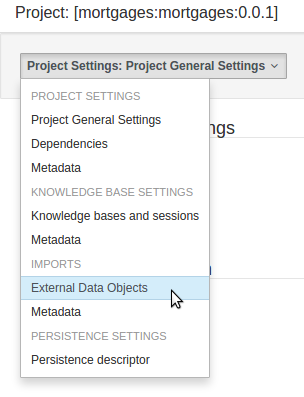

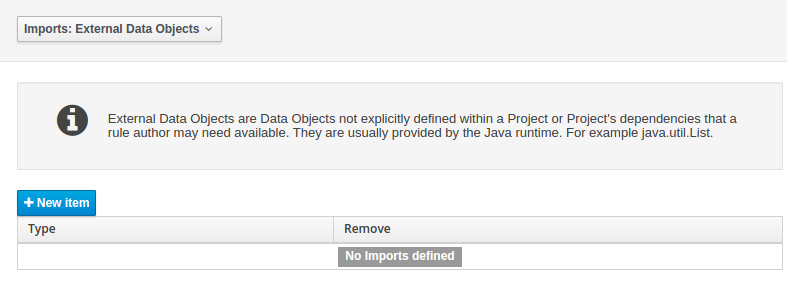

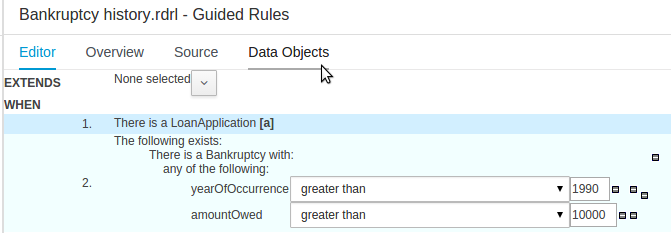

Mortgages: (Decision management with rules) Loan approval process using rule-based decision assets. Determines loan eligibility based on applicant data and qualifications.

-

Mortgage_Process: (Process automation) Loan approval process using business process and decision assets. Determines loan eligibility based on applicant data and qualifications.

-

OptaCloud: (Business optimization) Resource allocation optimization using decision and solver assets. Assigns processes to computers with limited resources.

-

Traffic_Violation: (Decision management with DMN) Traffic violation decision service using a Decision Model and Notation (DMN) model. Determines driver penalty and suspension based on traffic violations.

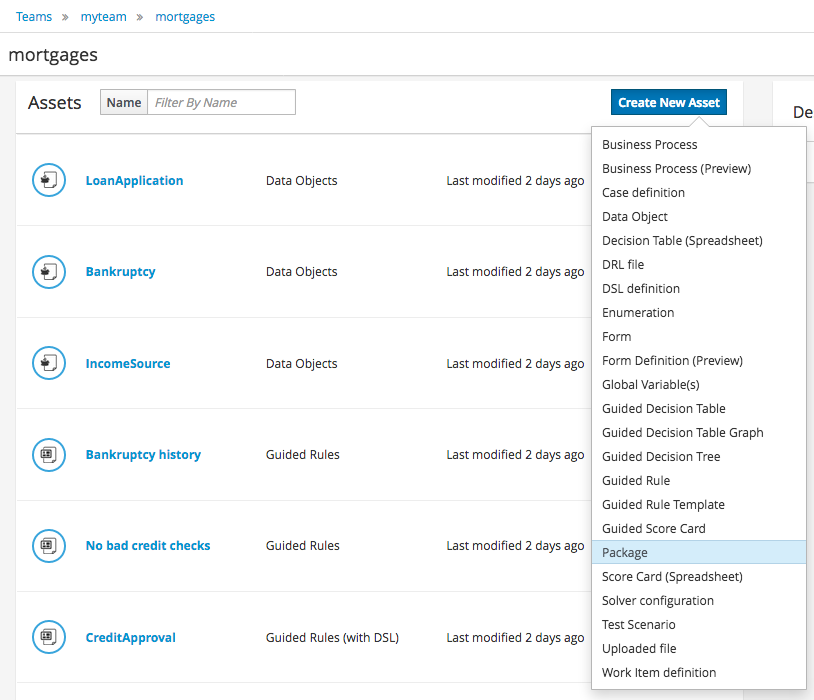

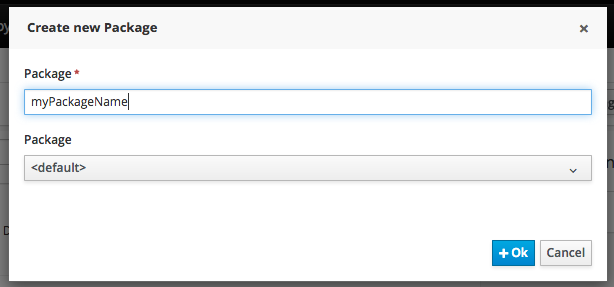

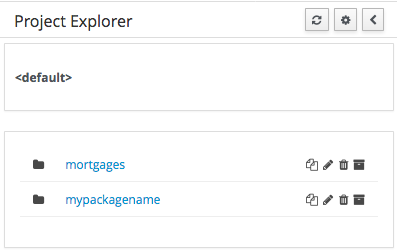

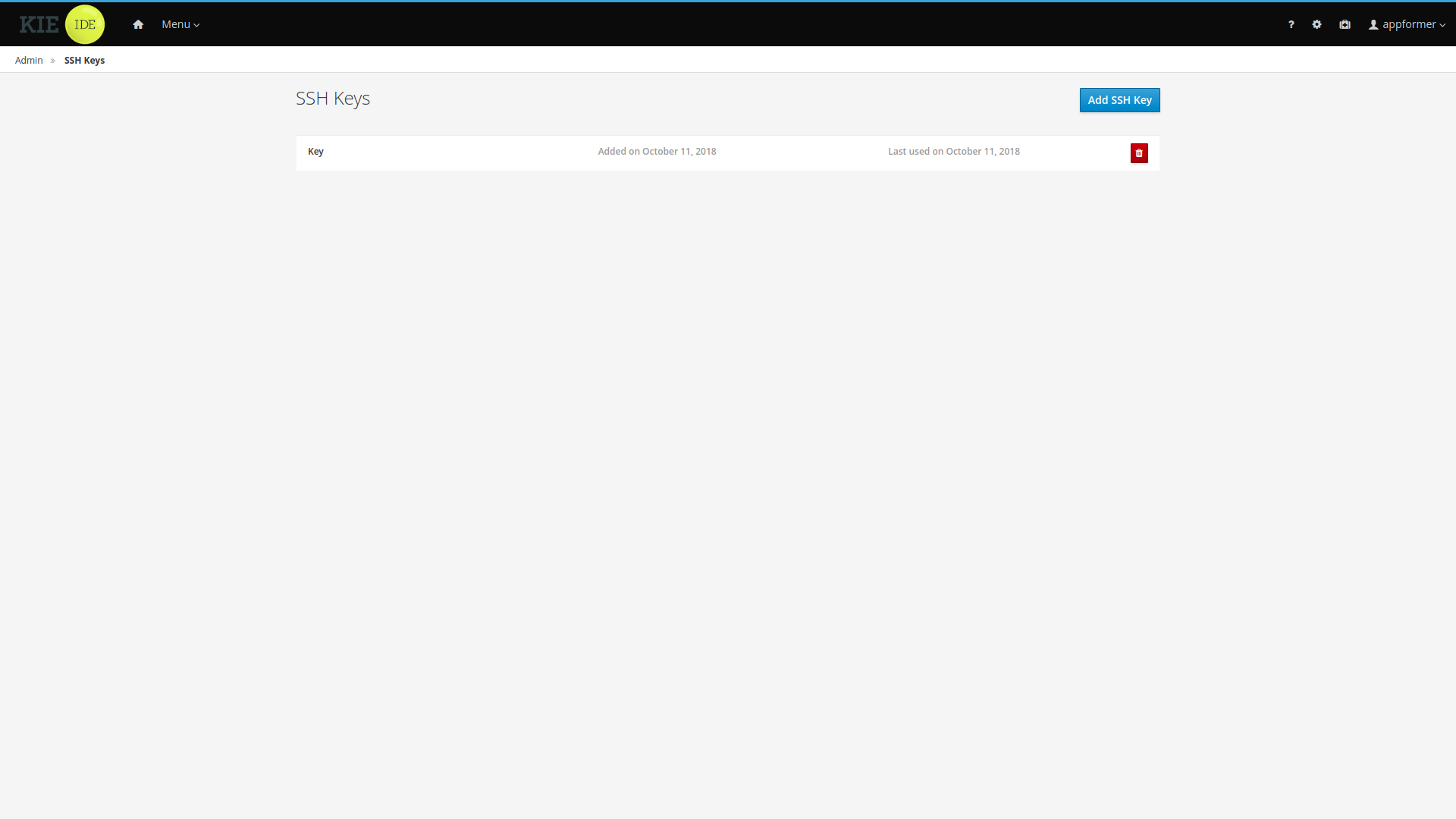

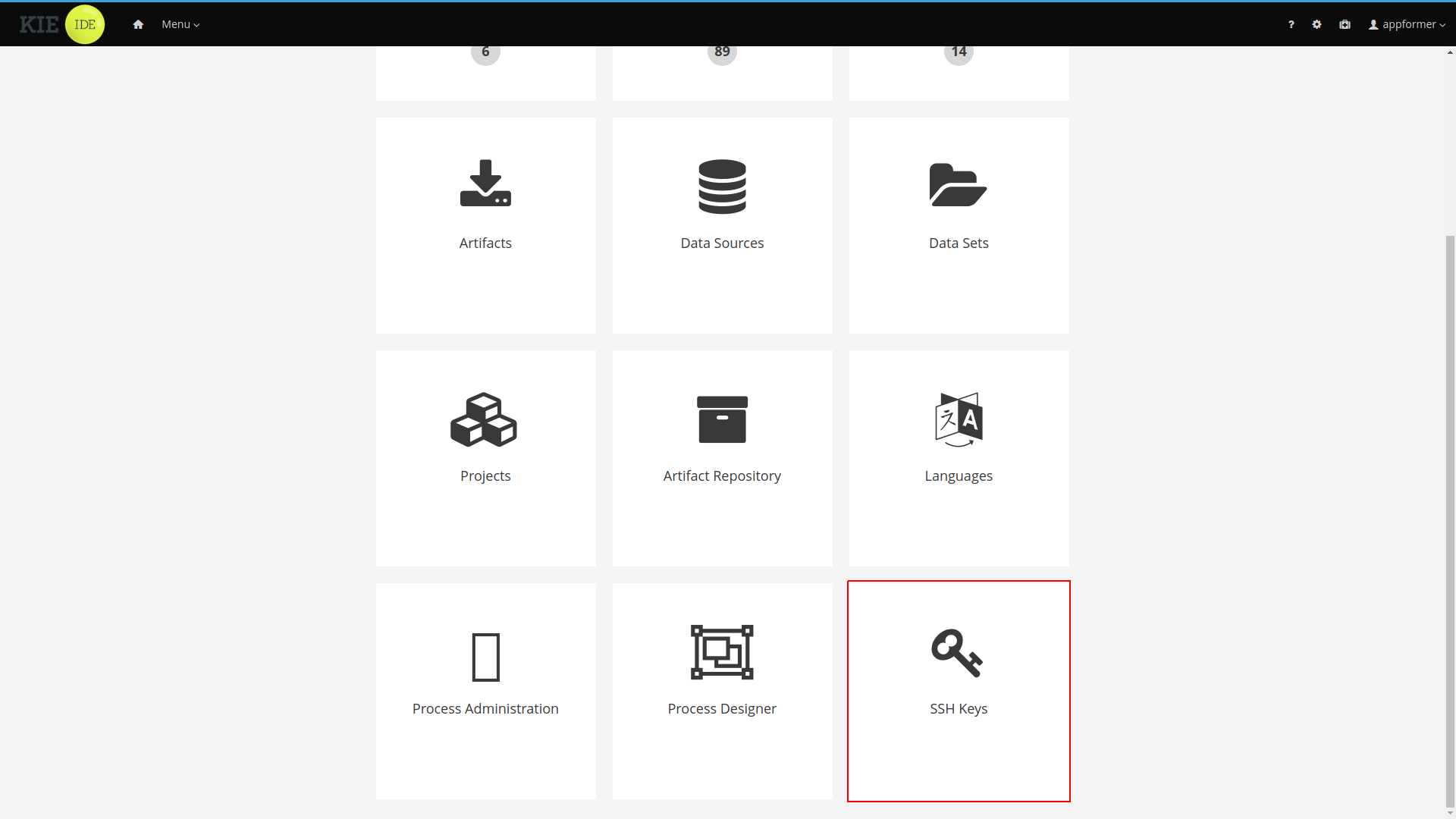

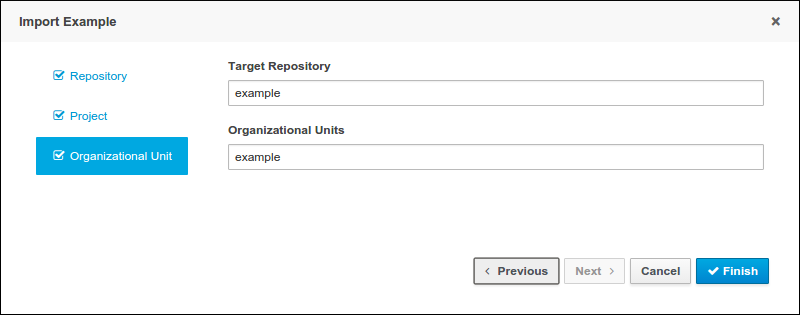

2.1.1. Accessing sample projects and business assets in Business Central

You can use the sample projects in Business Central to explore business assets as a reference for the rules or other assets that you create in your own Drools projects.

-

Business Central is installed and running. For installation options, see Planning a Drools installation.

-

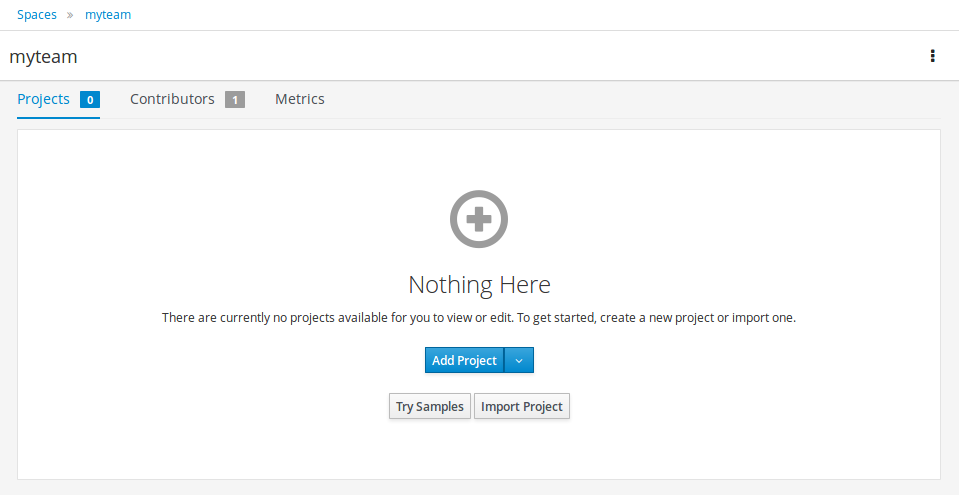

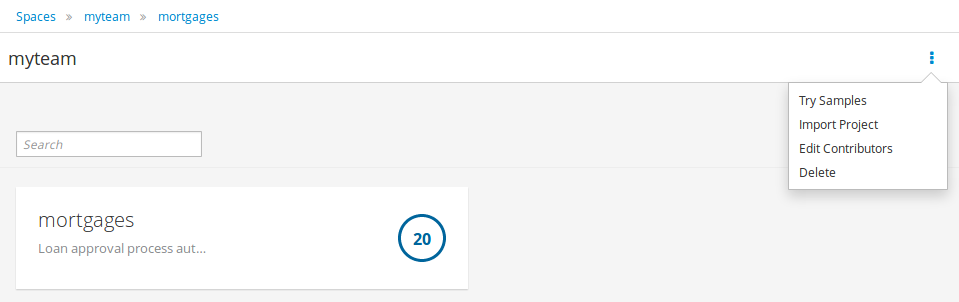

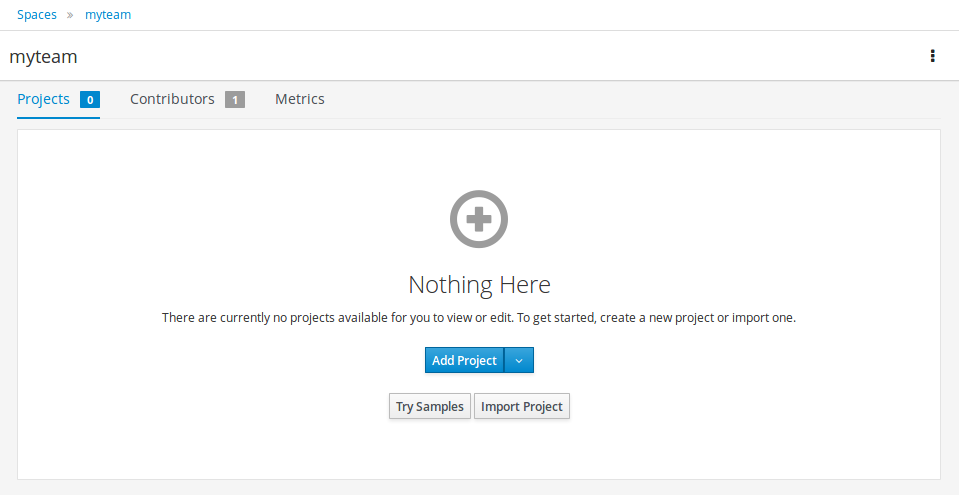

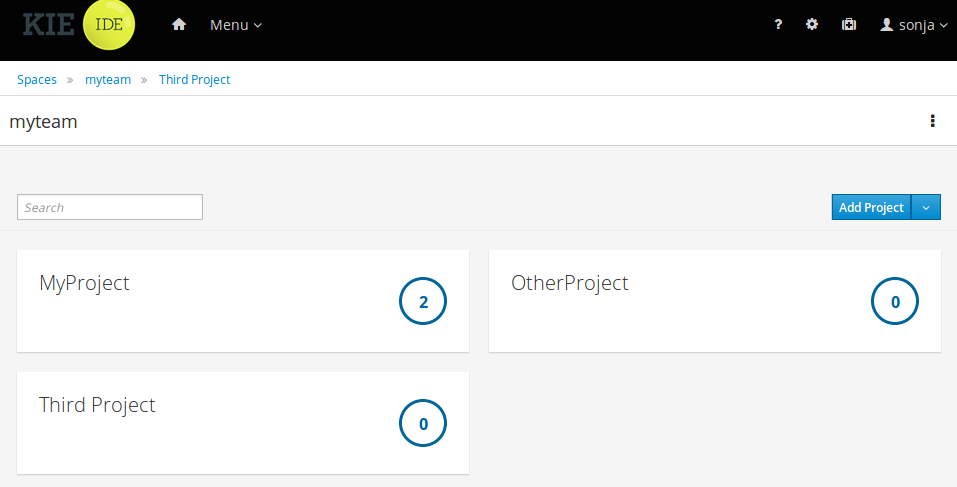

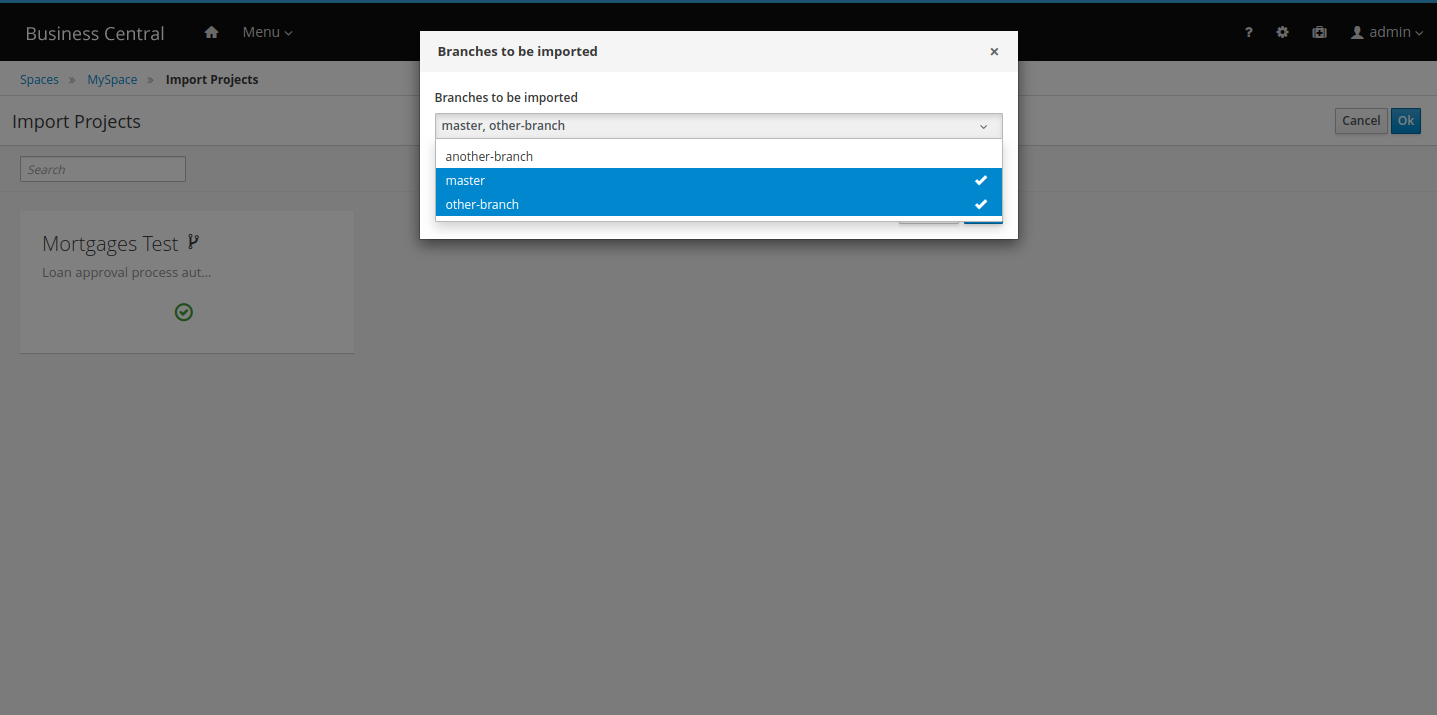

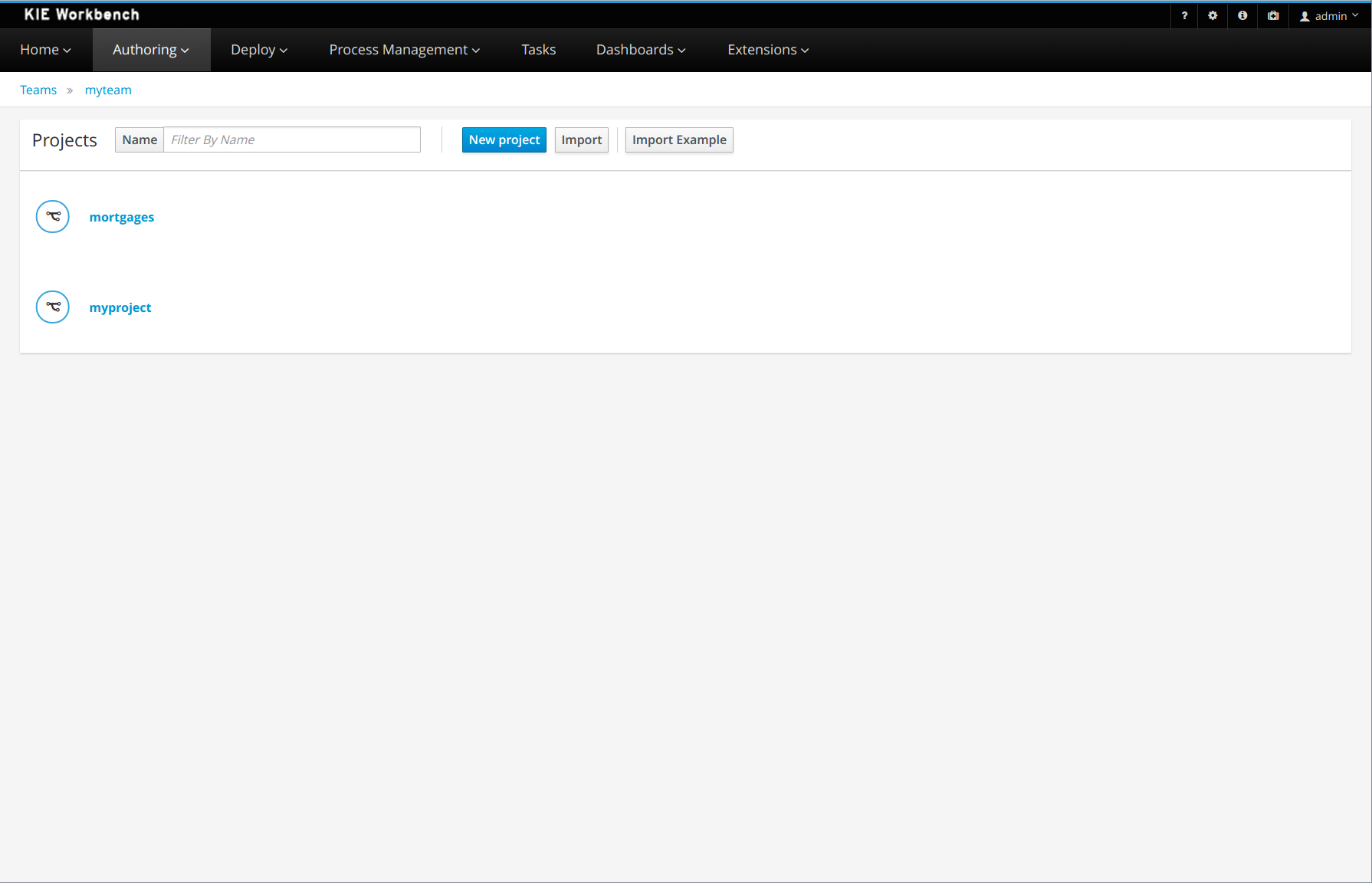

In Business Central, go to Menu → Design → Projects. If there are existing projects, you can access the samples by clicking the MySpace default space and selecting Try Samples from the Add Project drop-down menu. If there are no existing projects, click Try samples.

-

Review the descriptions for each sample project to determine which project you want to explore. Each sample project is designed differently to demonstrate decision management or business optimization assets and logic in Drools.

-

Select one or more sample projects and click Ok to add the projects to your space.

-

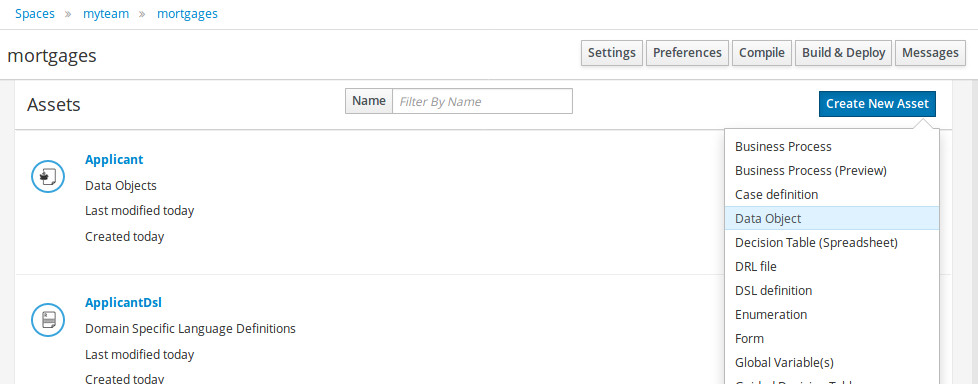

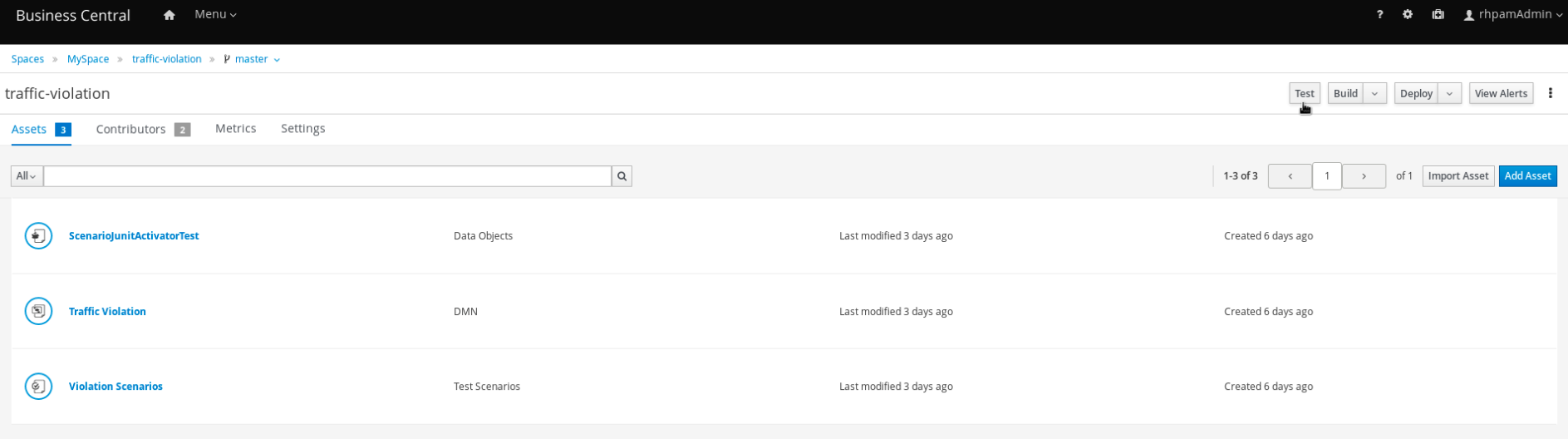

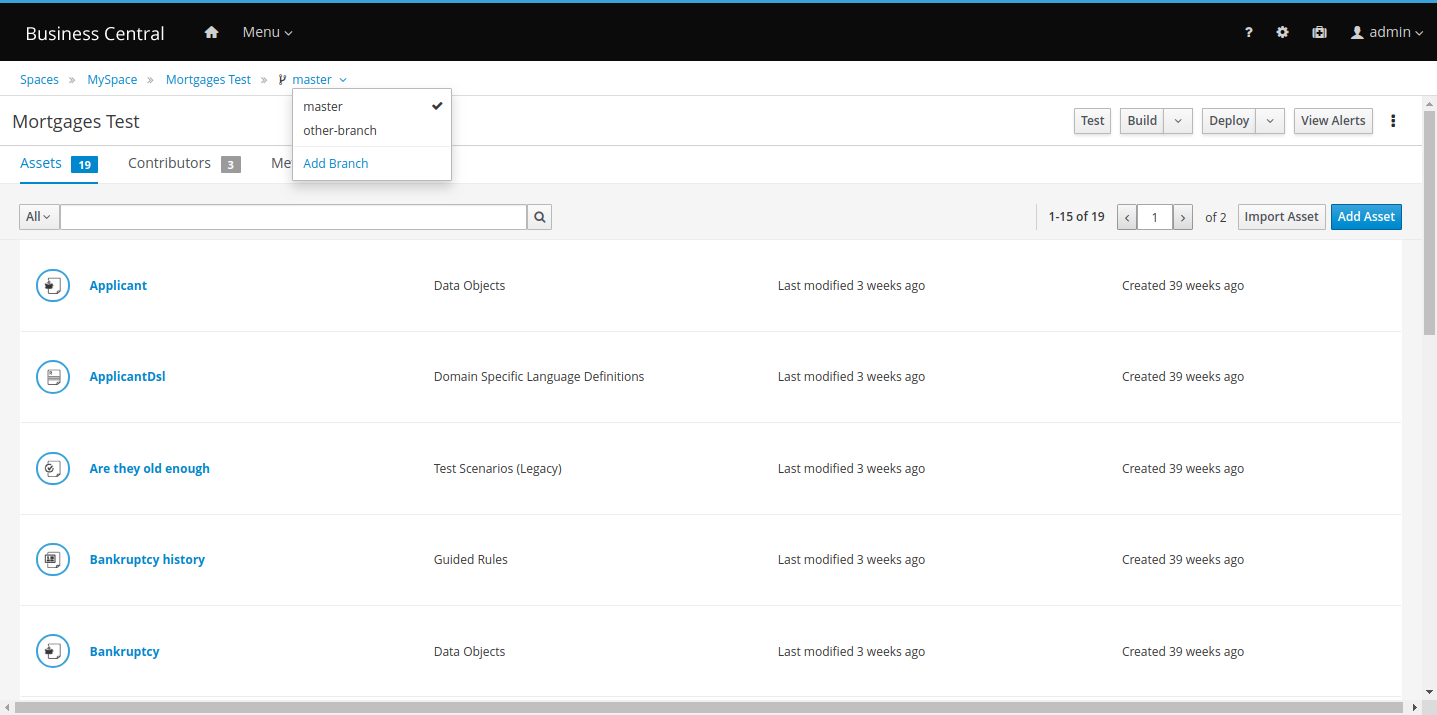

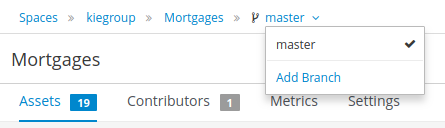

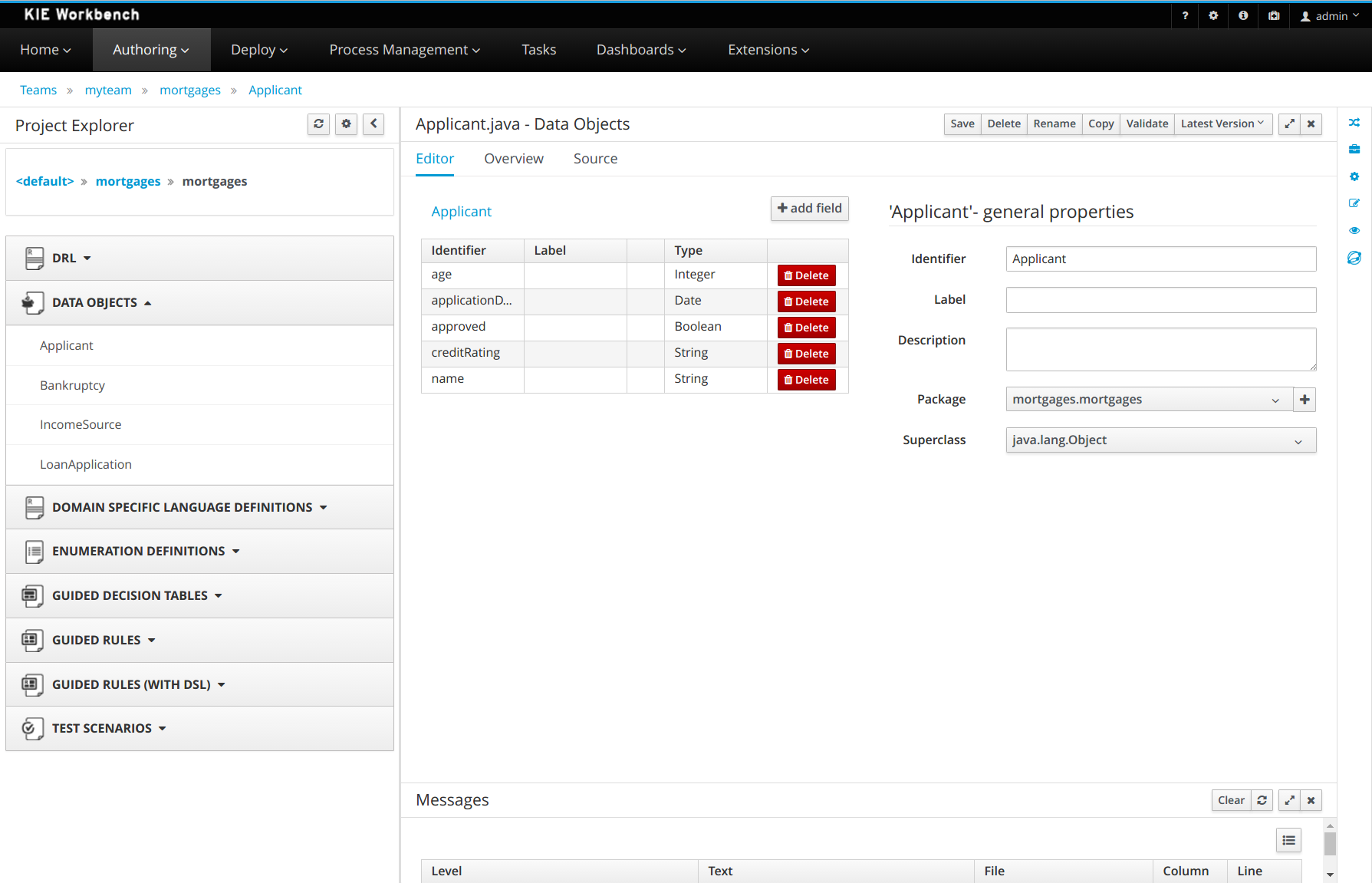

In the Projects page of your space, select one of the sample projects to view the assets for that project.

-

Select each asset to explore how the project is designed to achieve the specified goal or workflow. Some of the sample projects contain more than one page of assets. Click the left or right arrows in the upper-right corner to view the full asset list.

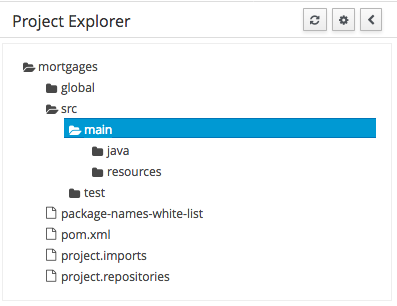

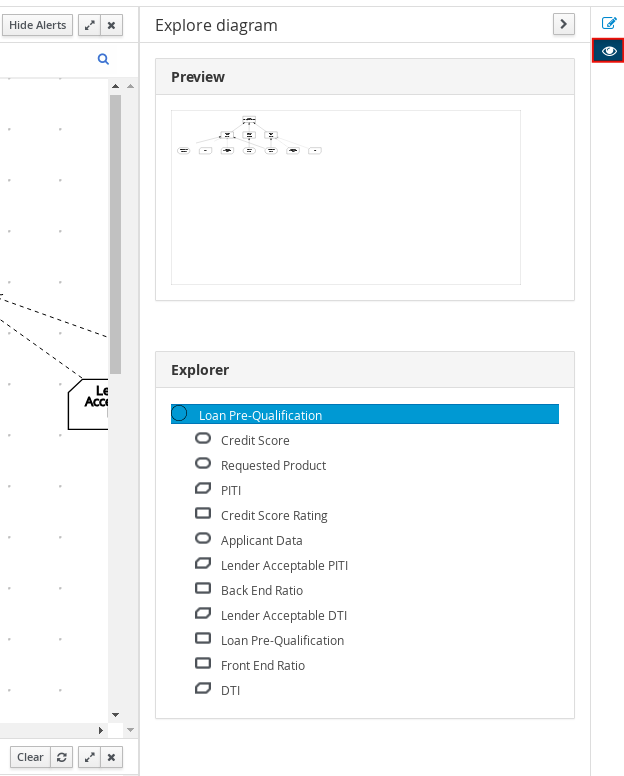

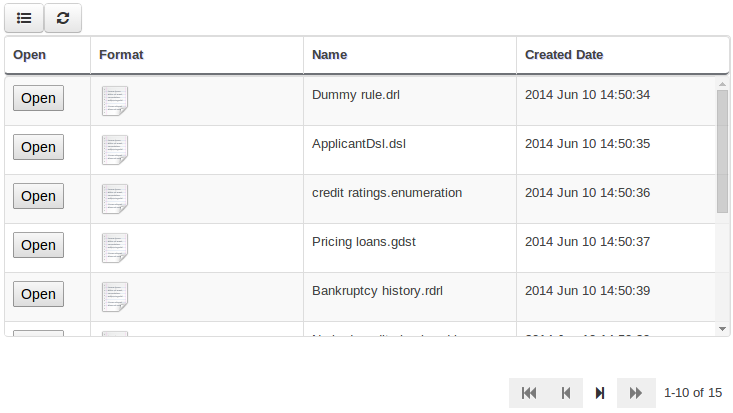

Figure 1. Asset page selection

Figure 1. Asset page selection -

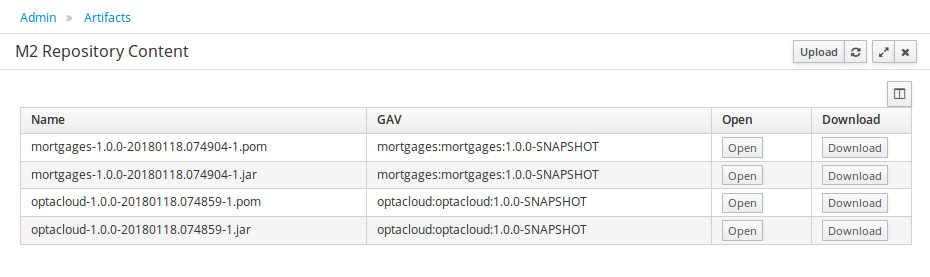

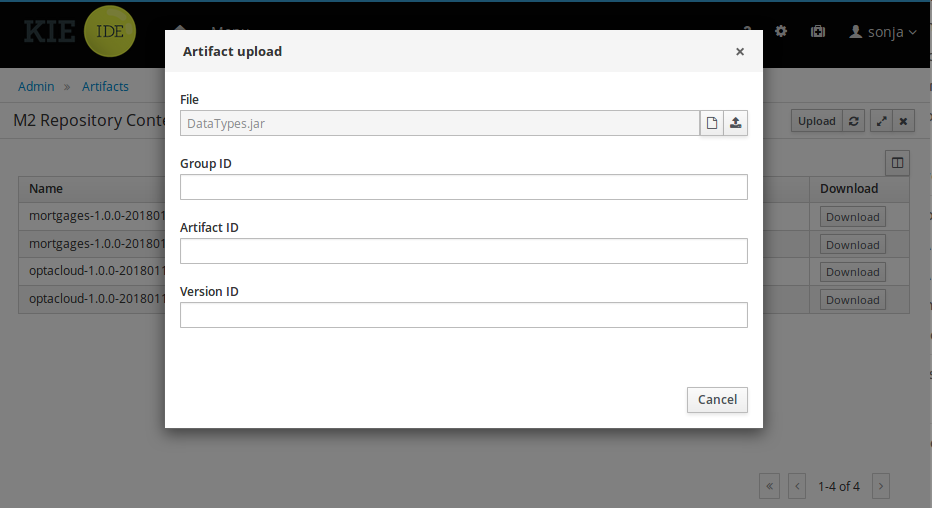

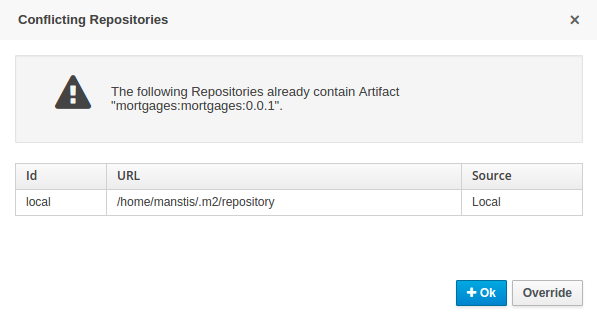

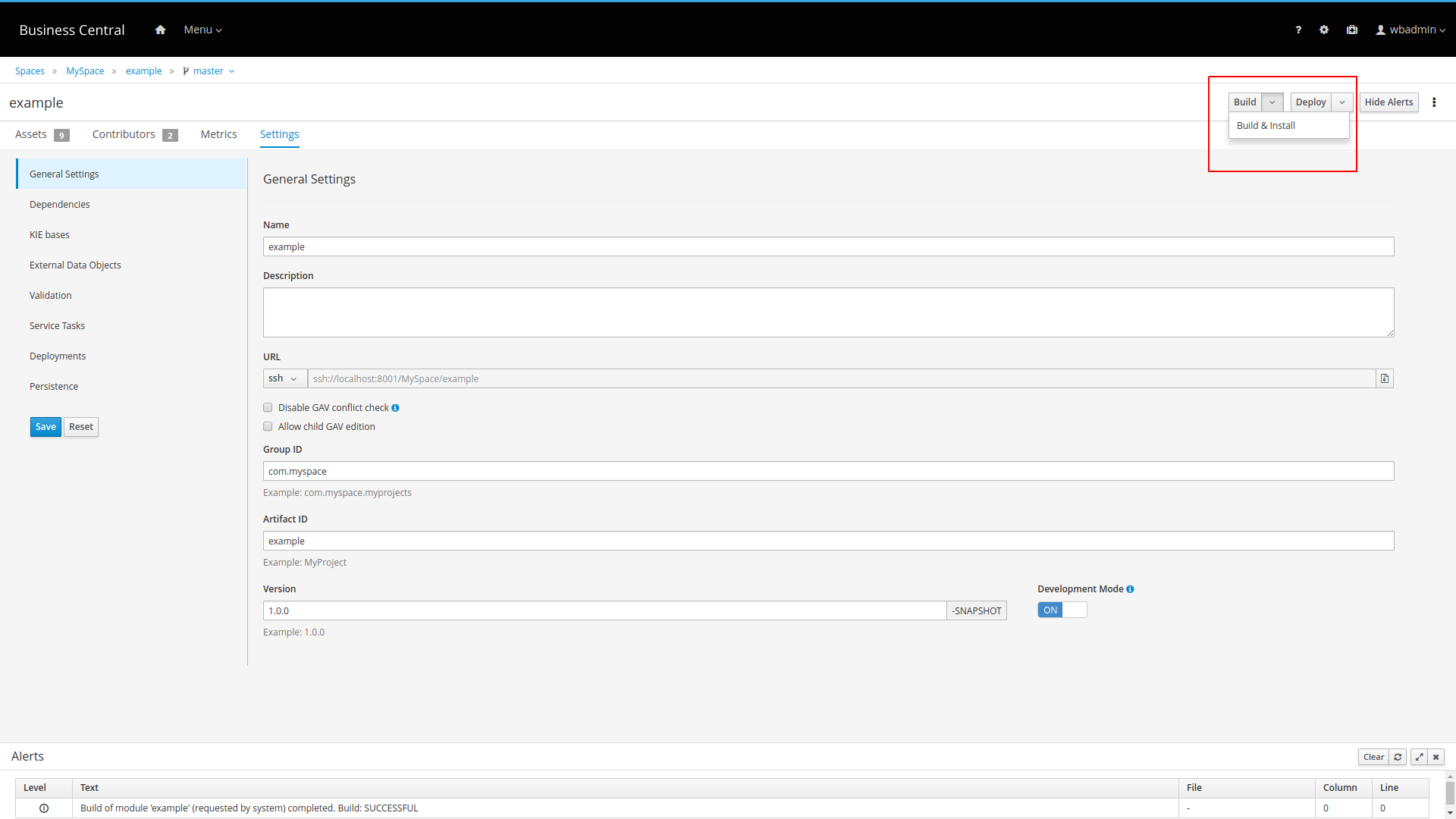

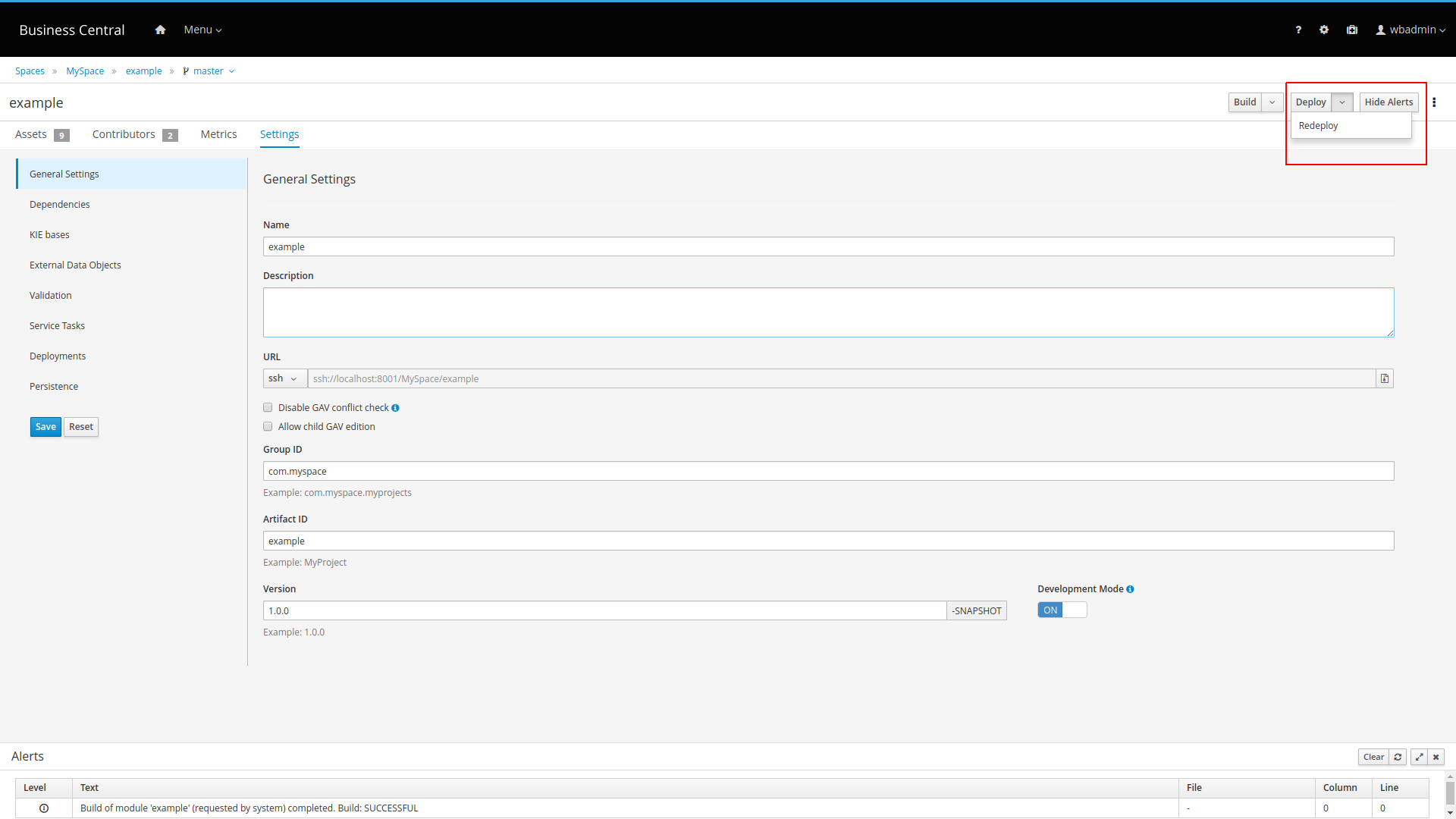

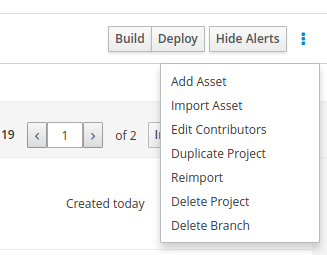

In the upper-right corner of the project Assets page, click Build to build the sample project or Deploy to build the project and then deploy it to KIE Server.

You can also select the Build & Install option to build the project and publish the KJAR file to the configured Maven repository without deploying to a KIE Server. In a development environment, you can click Deploy to deploy the built KJAR file to a KIE Server without stopping any running instances (if applicable), or click Redeploy to deploy the built KJAR file and replace all instances. The next time you deploy or redeploy the built KJAR, the previous deployment unit (KIE container) is automatically updated in the same target KIE Server. In a production environment, the Redeploy option is disabled and you can click Deploy only to deploy the built KJAR file to a new deployment unit (KIE container) on a KIE Server.

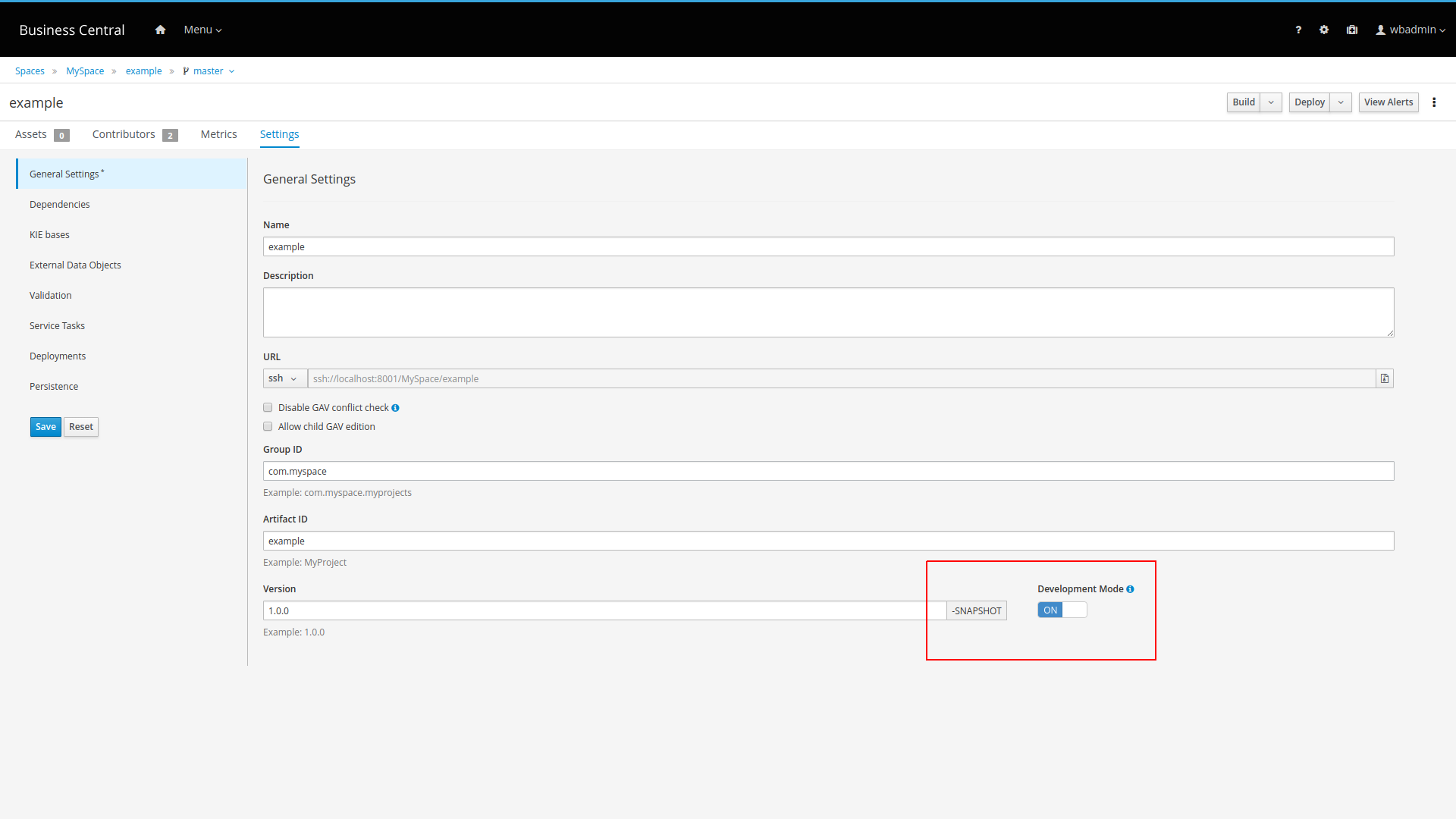

To configure the KIE Server environment mode, set the

org.kie.server.modesystem property toorg.kie.server.mode=developmentororg.kie.server.mode=production. To configure the deployment behavior for a corresponding project in Business Central, go to project Settings → General Settings → Version, toggle the Development Mode option, and click Save. By default, KIE Server and all new projects in Business Central are in development mode. You cannot deploy a project with Development Mode turned on or with a manually addedSNAPSHOTversion suffix to a KIE Server that is in production mode.To review project deployment details, click View deployment details in the deployment banner at the top of the screen or in the Deploy drop-down menu. This option directs you to the Menu → Deploy → Execution Servers page.

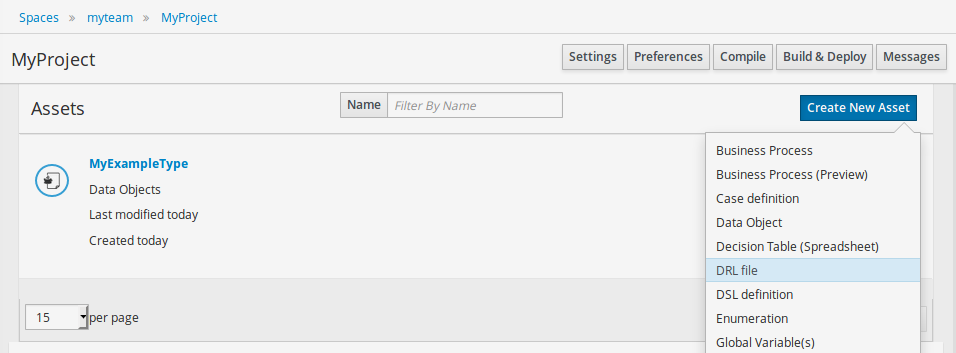

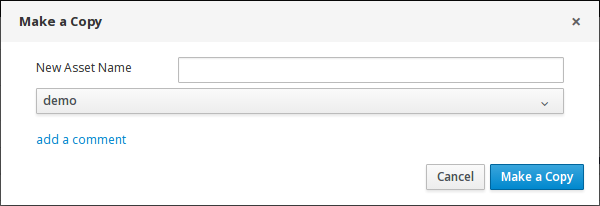

2.2. Creating the traffic violations project in Business Central

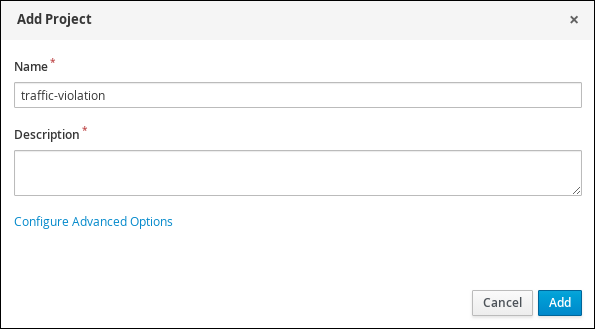

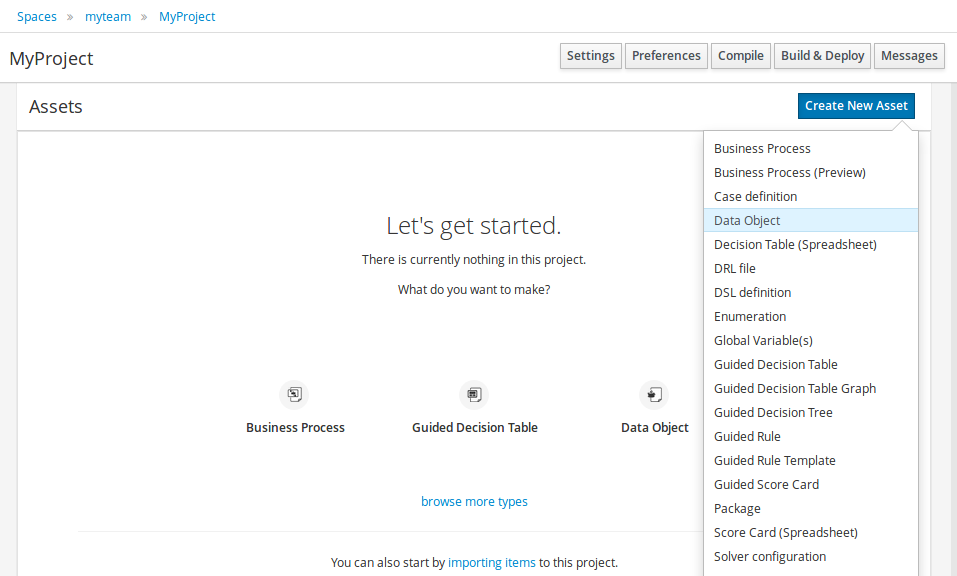

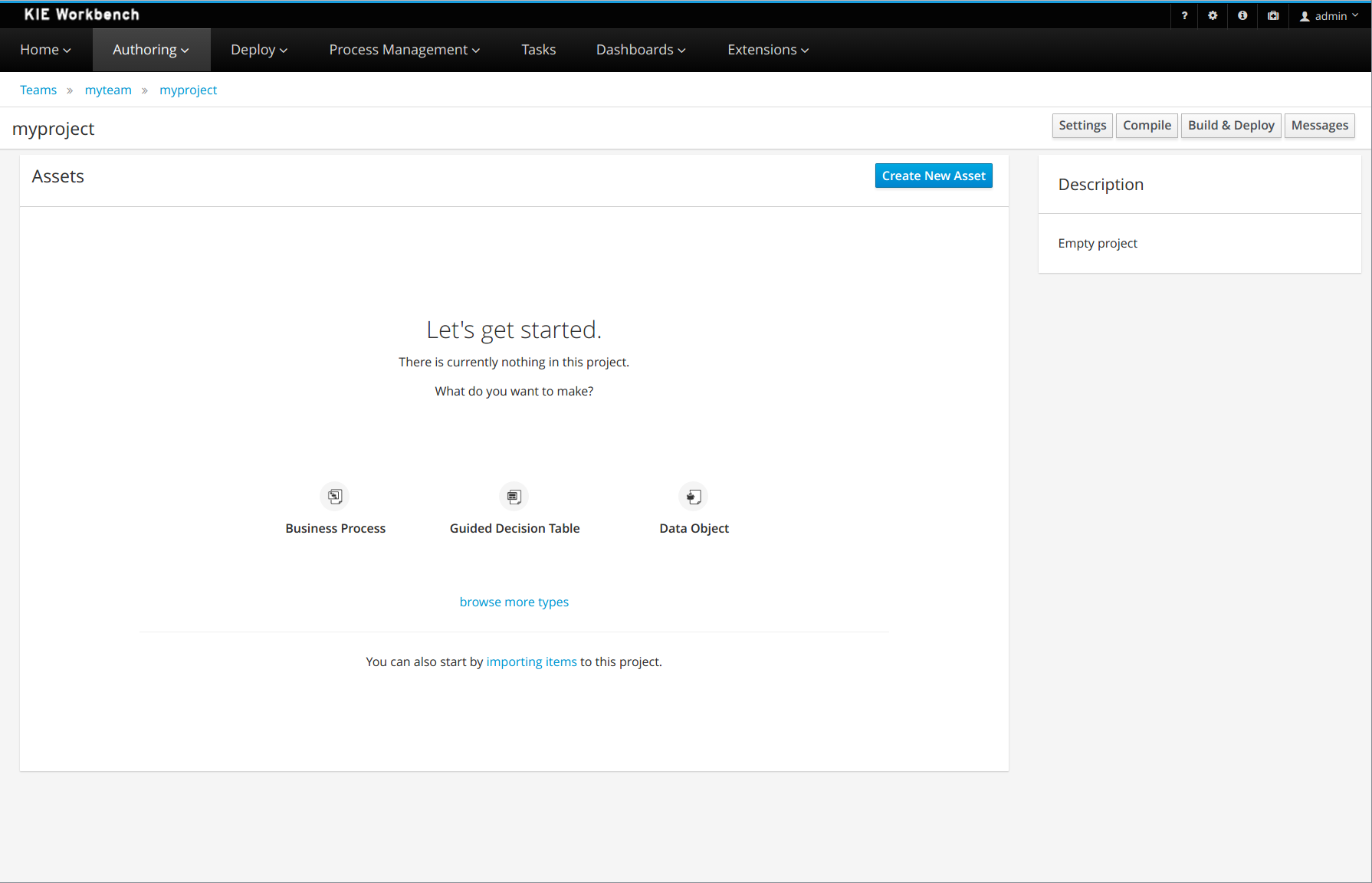

For this example, create a new project called traffic-violation. A project is a container for assets such as data objects, DMN assets, and test scenarios. This example project that you are creating is similar to the existing Traffic_Violation sample project in Business Central.

-

In Business Central, go to Menu → Design → Projects.

Drools provides a default space called MySpace. You can use the default space to create and test example projects.

-

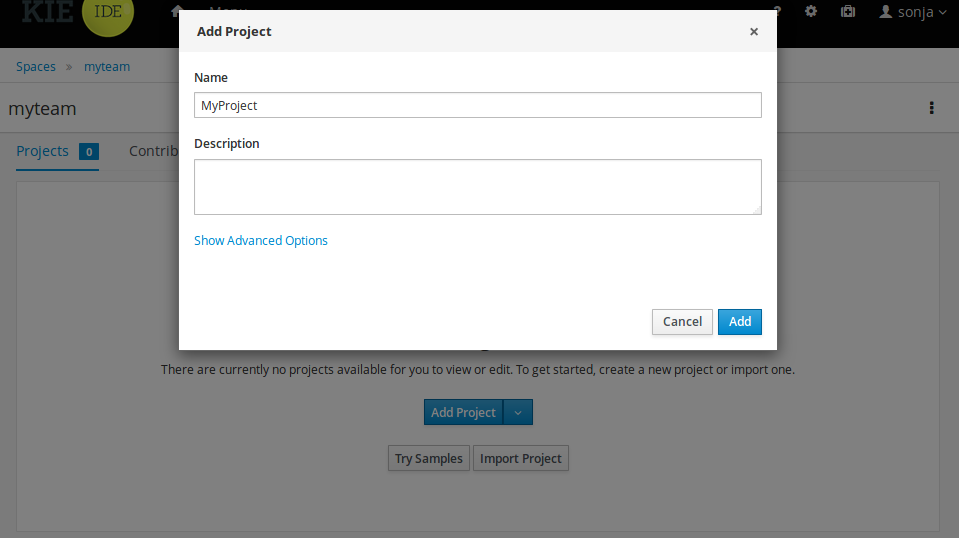

Click Add Project.

-

Enter

traffic-violationin the Name field. -

Click Add.

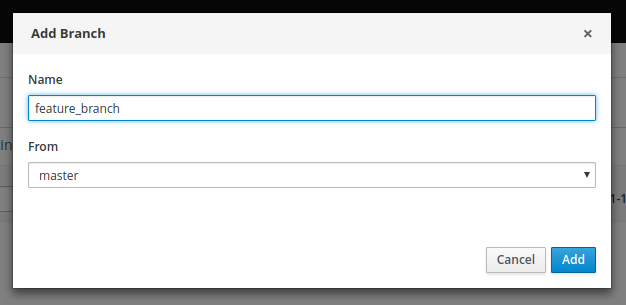

Figure 2. Add Project window

Figure 2. Add Project windowThe Assets view of the project opens.

2.3. Decision Model and Notation (DMN)

Decision Model and Notation (DMN) is a standard established by the Object Management Group (OMG) for describing and modeling operational decisions. DMN defines an XML schema that enables DMN models to be shared between DMN-compliant platforms and across organizations so that business analysts and business rules developers can collaborate in designing and implementing DMN decision services. The DMN standard is similar to and can be used together with the Business Process Model and Notation (BPMN) standard for designing and modeling business processes.

For more information about the background and applications of DMN, see the OMG Decision Model and Notation specification.

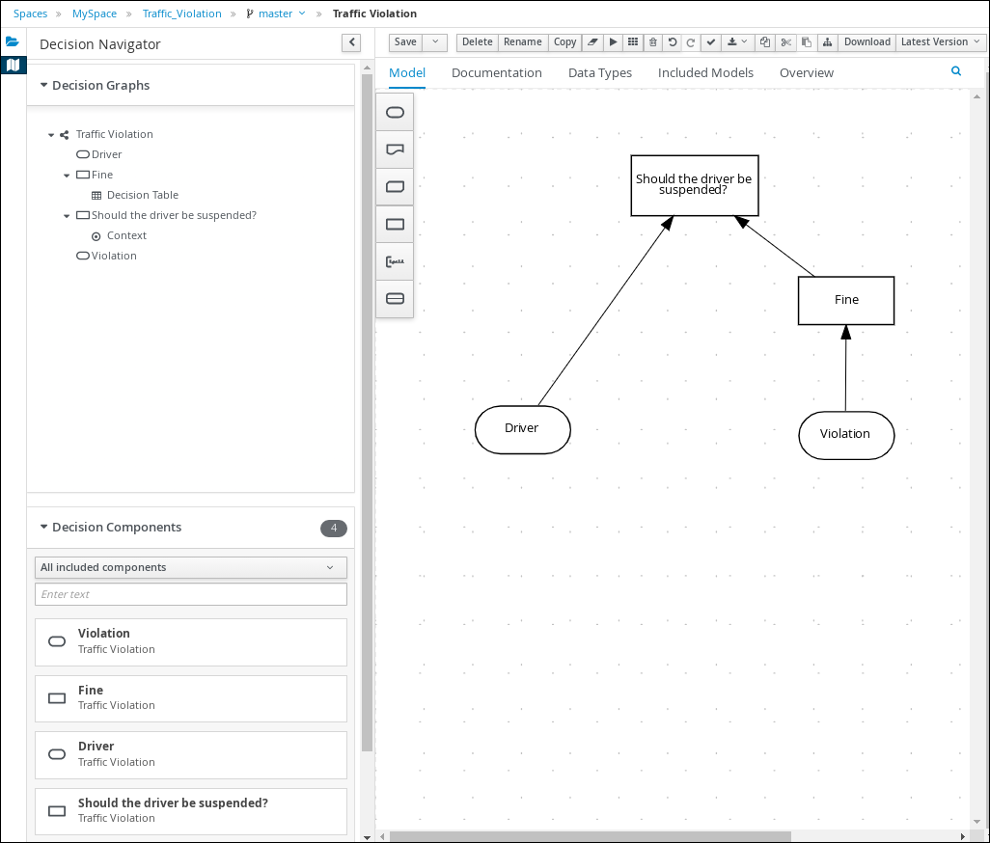

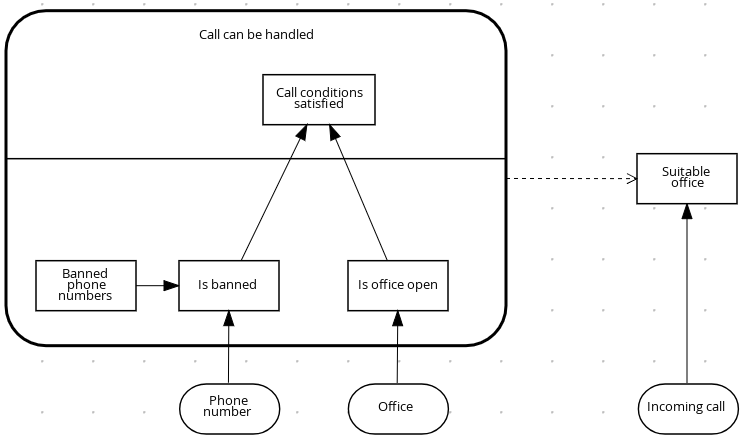

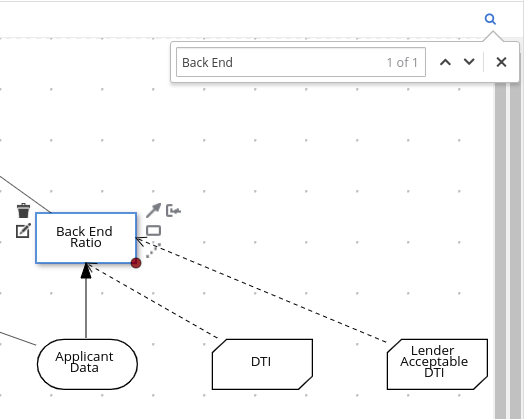

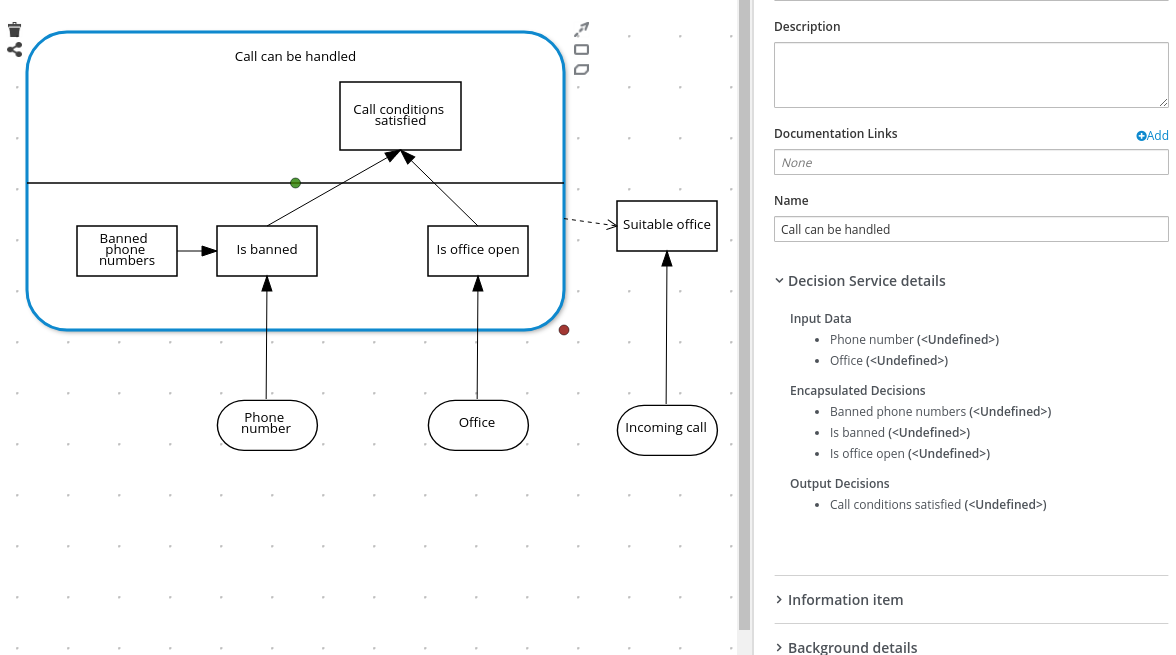

2.3.1. Creating the traffic violations DMN decision requirements diagram (DRD)

A decision requirements diagram (DRD) is a visual representation of your DMN model. Use the DMN designer in Business Central to design the DRD for the traffic violations project and to define the decision logic of the DRD components.

-

You have created the traffic violations project in Business Central.

-

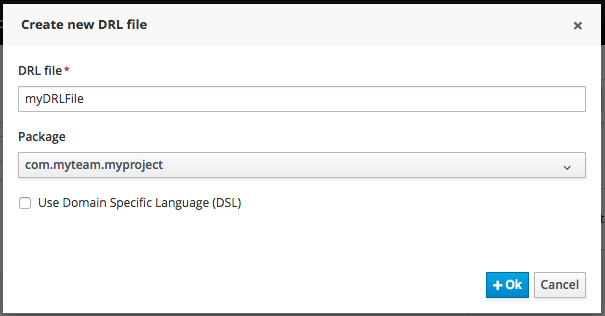

On the traffic-violation project’s home page, click Add Asset.

-

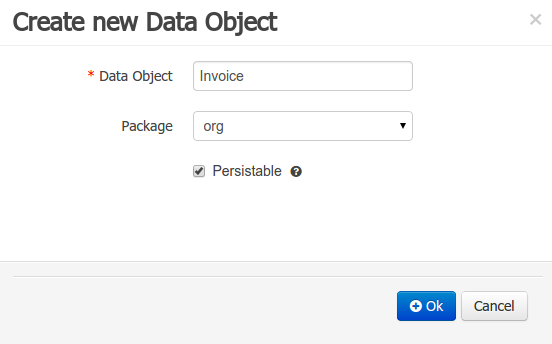

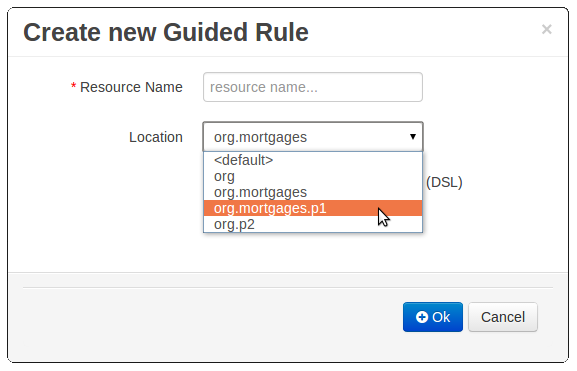

On the Add Asset page, click DMN. The Create new DMN window is opened.

-

In the Create new DMN window, enter

Traffic Violationin the DMN name field. -

From the Package list, select

com.myspace.traffic_violation. -

Click Ok. The DMN asset in the DMN designer is opened.

-

-

In the DMN designer canvas, drag two DMN Input Data input nodes onto the canvas.

Figure 4. DMN Input Data nodes

Figure 4. DMN Input Data nodes -

In the upper-right corner, click the

icon.

icon. -

Double-click the input nodes and rename one to

Driverand the other toViolation. -

Drag a DMN Decision decision node onto the canvas.

-

Double-click the decision node and rename it to

Fine. -

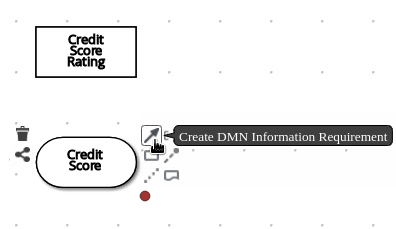

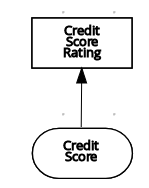

Click the Violation input node, select the Create DMN Information Requirement icon and click the

Finedecision node to link the two nodes. Figure 5. Create DMN Information Requirement icon

Figure 5. Create DMN Information Requirement icon -

Drag a DMN Decision decision node onto the canvas.

-

Double-click the decision node and rename it to

Should the driver be suspended?. -

Click the Driver input node, select the Create DMN Information Requirement icon and click the Should the driver be suspended? decision node to link the two nodes.

-

Click the Fine decision node, select the Create DMN Information Requirement icon, and select the Should the driver be suspended? decision node.

-

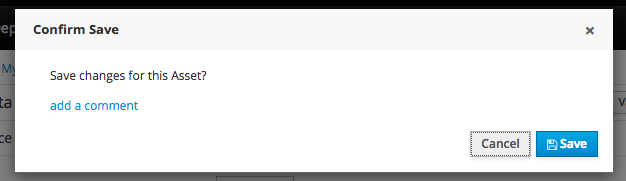

Click Save.

As you periodically save a DRD, the DMN designer performs a static validation of the DMN model and might produce error messages until the model is defined completely. After you finish defining the DMN model completely, if any errors remain, troubleshoot the specified problems accordingly.

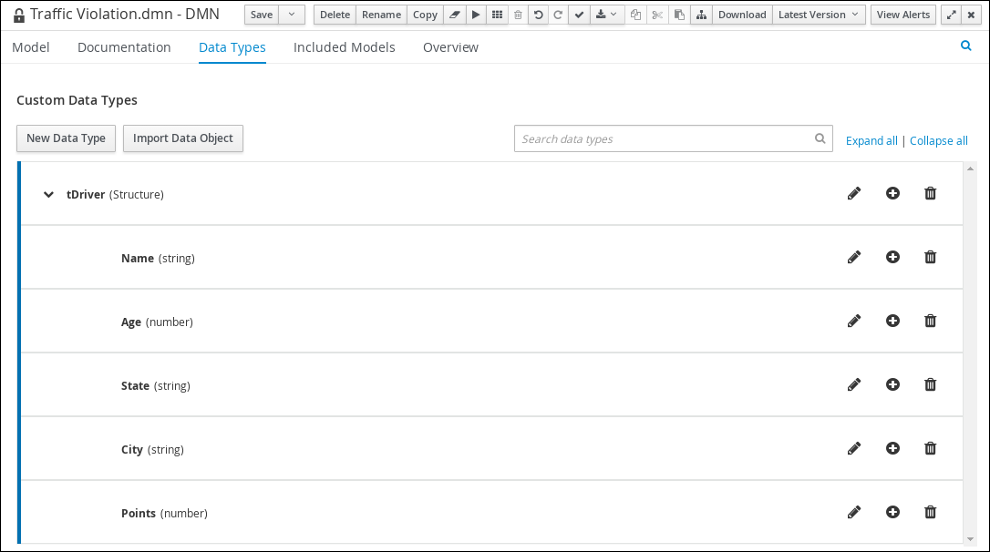

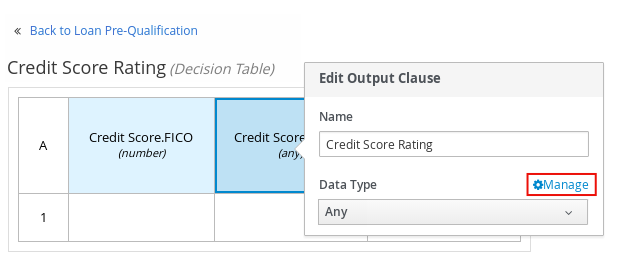

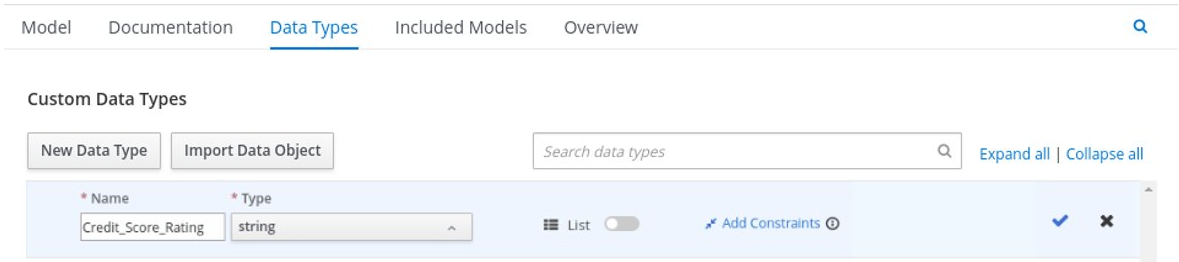

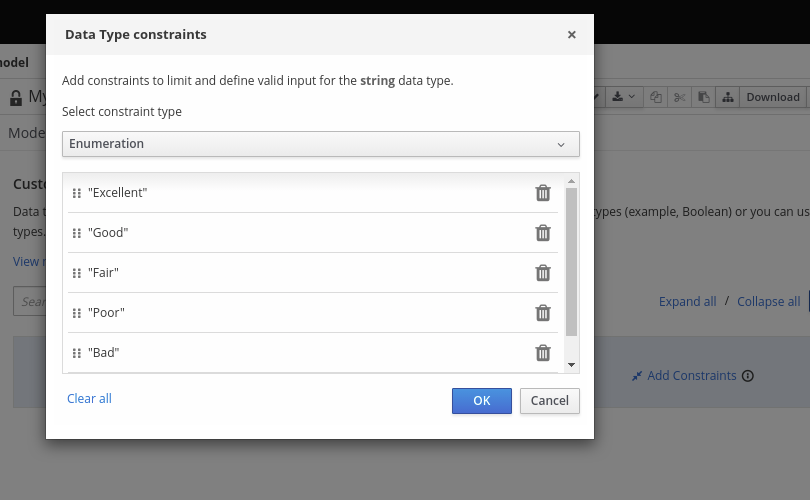

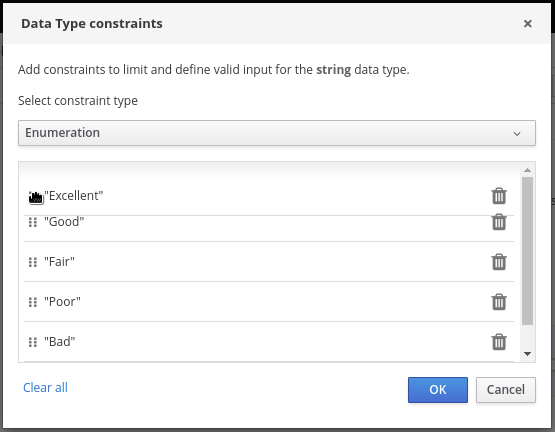

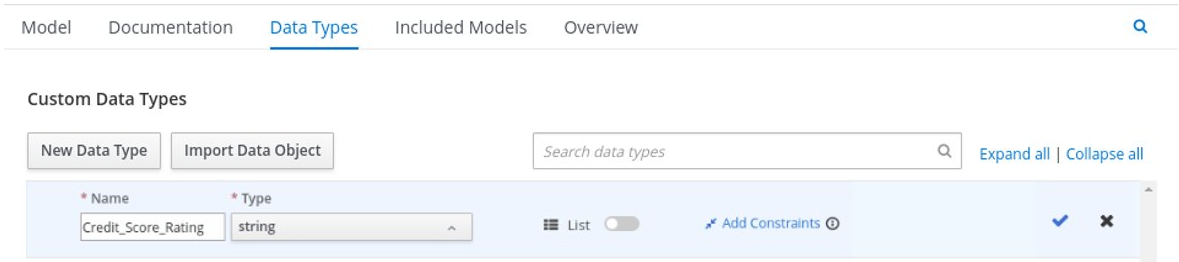

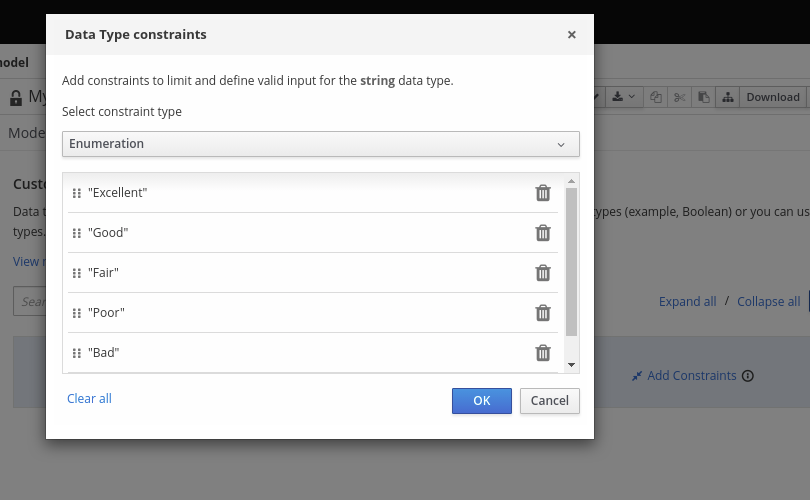

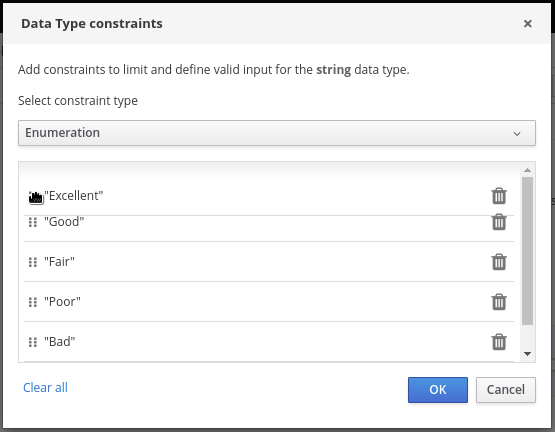

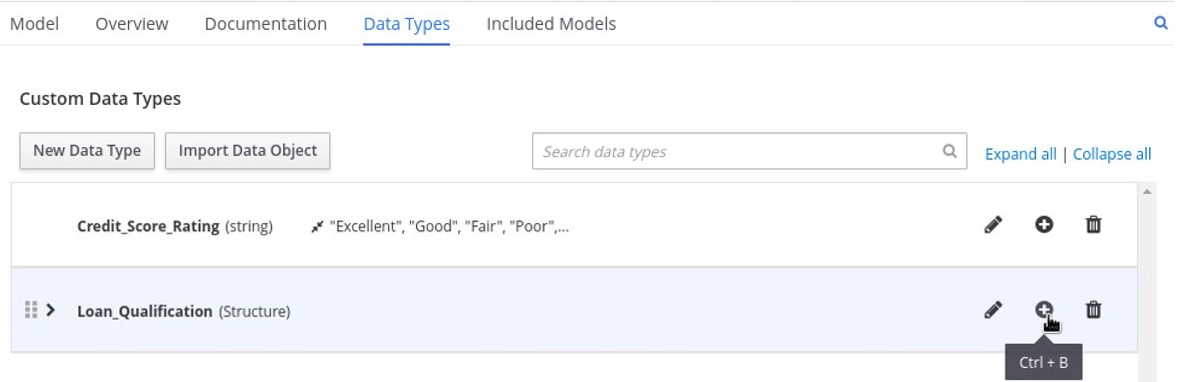

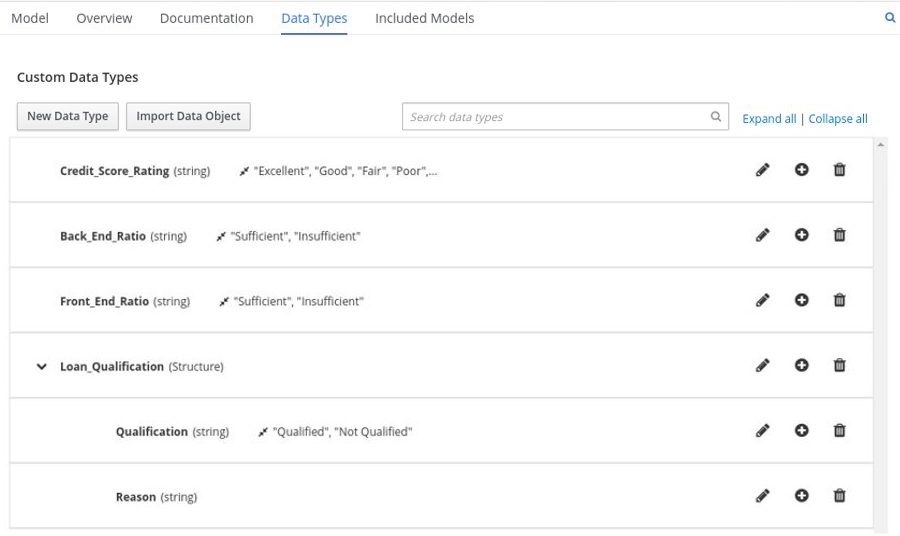

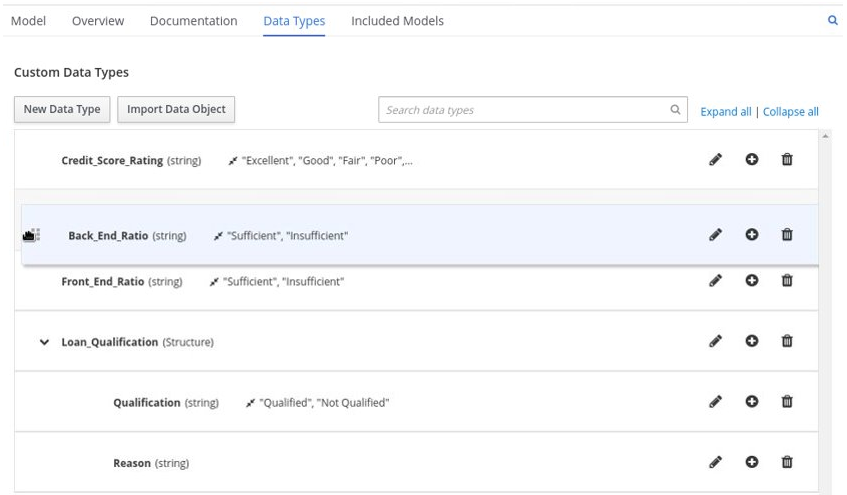

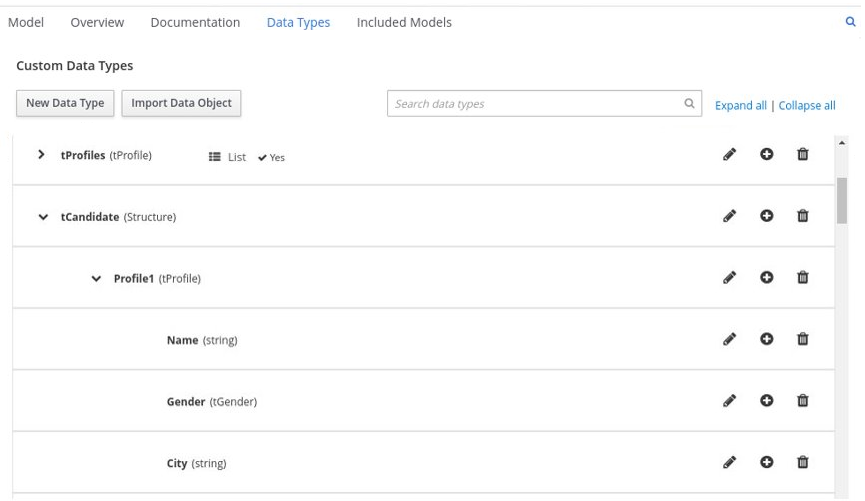

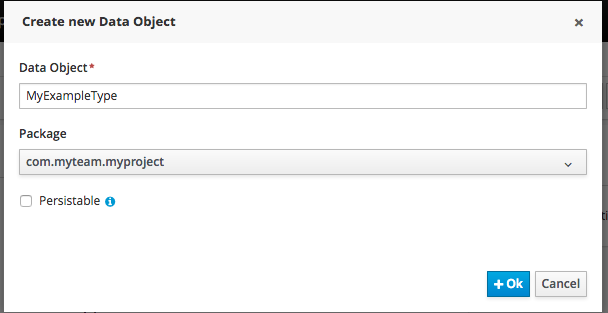

2.3.2. Creating the traffic violations DMN custom data types

DMN data types determine the structure of the data that you use within a table, column, or field in a DMN boxed expression for defining decision logic. You can use default DMN data types (such as string, number, or boolean) or you can create custom data types to specify additional fields and constraints that you want to implement for the boxed expression values. Use the DMN designer’s Data Types tab in Business Central to define the custom data types for the traffic violations project.

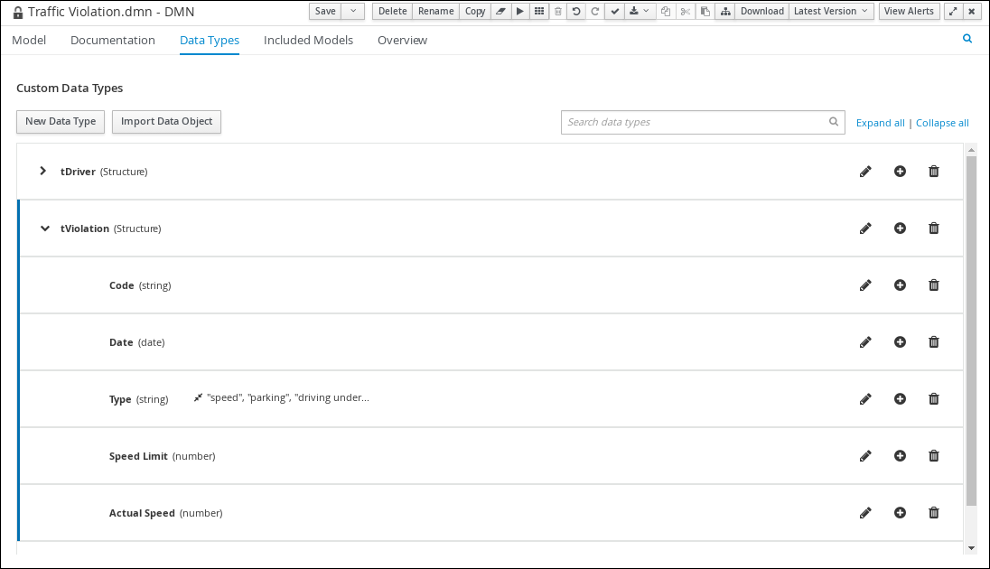

The following tables list the tDriver, tViolation, and tFine custom data types that you will create for this project.

| Name | Type |

|---|---|

tDriver |

Structure |

Name |

string |

Age |

number |

State |

string |

City |

string |

Points |

number |

| Name | Type |

|---|---|

tViolation |

Structure |

Code |

string |

Date |

date |

Type |

string |

Speed Limit |

number |

Actual Speed |

number |

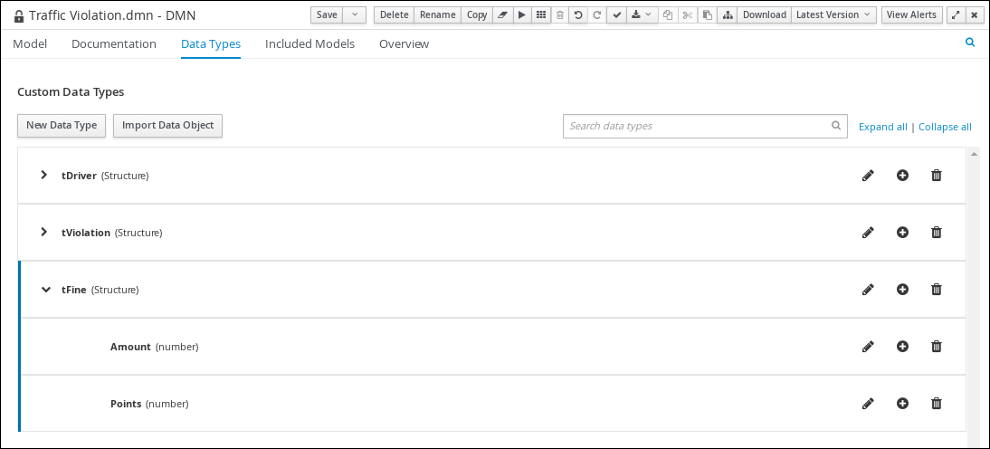

| Name | Type |

|---|---|

tFine |

Structure |

Amount |

number |

Points |

number |

-

You created the traffic violations DMN decision requirements diagram (DRDs) in Business Central.

-

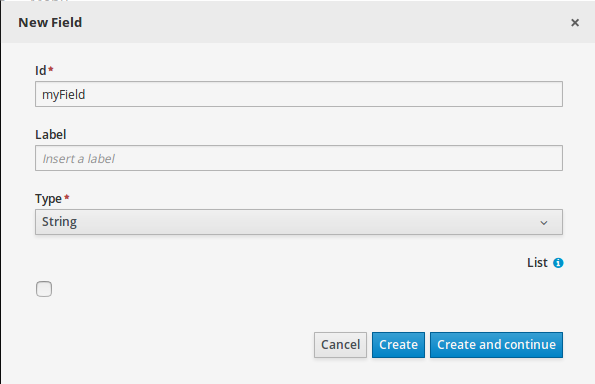

To create the

tDrivercustom data type, click Add a custom Data Type on the Data Types tab, entertDriverin the Name field, and selectStructurefrom the Type list. -

Click the check mark to the right of the new data type to save your changes.

Figure 7. The tDriver custom data type

Figure 7. The tDriver custom data type -

Add each of the following nested data types to the

tDriverstructured data type by clicking the plus sign next totDriverfor each new nested data type. Click the check mark to the right of each new data type to save your changes.-

Name(string) -

Age(number) -

State(string) -

City(string) -

Points(number)

-

-

To create the

tViolationcustom data type, click New Data Type, entertViolationin the Name field, and selectStructurefrom the Type list. -

Click the check mark to the right of the new data type to save your changes.

Figure 8. The tViolation custom data type

Figure 8. The tViolation custom data type -

Add each of the following nested data types to the

tViolationstructured data type by clicking the plus sign next totViolationfor each new nested data type. Click the check mark to the right of each new data type to save your changes.-

Code(string) -

Date(date) -

Type(string) -

Speed Limit(number) -

Actual Speed(number)

-

-

To add the following constraints to the

Typenested data type, click the edit icon, click Add Constraints, and select Enumeration from the Select constraint type drop-down menu.-

speed -

parking -

driving under the influence

-

-

Click OK, then click the check mark to the right of the Type data type to save your changes.

-

To create the

tFinecustom data type, click New Data Type, entertFinein the Name field, selectStructurefrom the Type list, and click Save. Figure 9. The tFine custom data type

Figure 9. The tFine custom data type -

Add each of the following nested data types to the

tFinestructured data type by clicking the plus sign next totFinefor each new nested data type. Click the check mark to the right of each new data type to save your changes.-

Amount(number) -

Points(number)

-

-

Click Save.

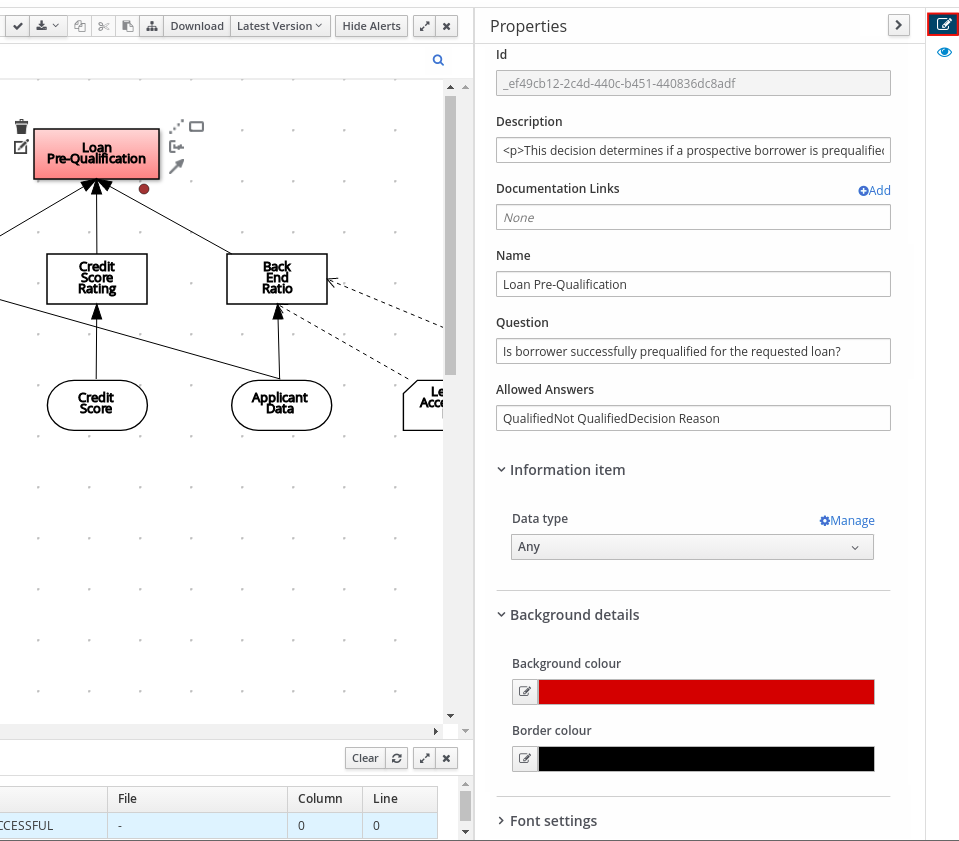

2.3.3. Assigning custom data types to the DRD input and decision nodes

After you create the DMN custom data types, assign them to the appropriate DMN Input Data and DMN Decision nodes in the traffic violations DRD.

-

You have created the traffic violations DMN custom data types in Business Central.

-

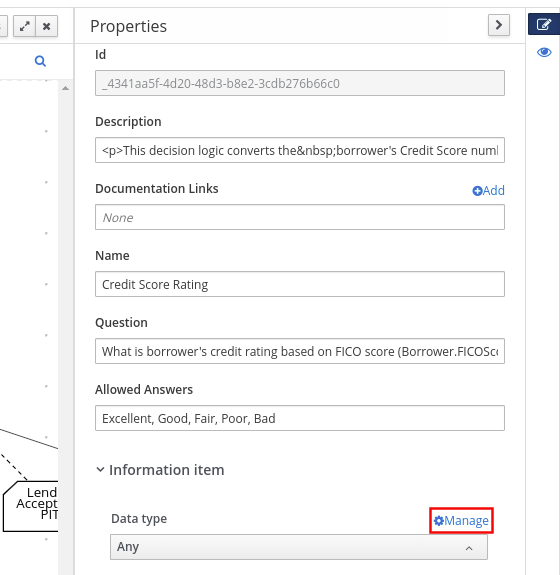

Click the Model tab on the DMN designer and click the Properties

icon in the upper-right corner of the DMN designer to expose the DRD properties.

icon in the upper-right corner of the DMN designer to expose the DRD properties. -

In the DRD, select the Driver input data node and in the Properties panel, select

tDriverfrom the Data type drop-down menu. -

Select the Violation input data node and select

tViolationfrom the Data type drop-down menu. -

Select the Fine decision node and select

tFinefrom the Data type drop-down menu. -

Select the Should the driver be suspended? decision node and set the following properties:

-

Data type:

string -

Question:

Should the driver be suspended due to points on his driver license? -

Allowed Answers:

Yes,No

-

-

Click Save.

You have assigned the custom data types to your DRD’s input and decision nodes.

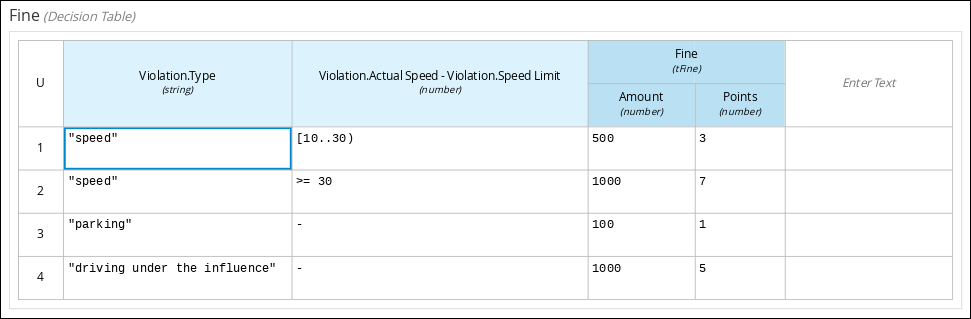

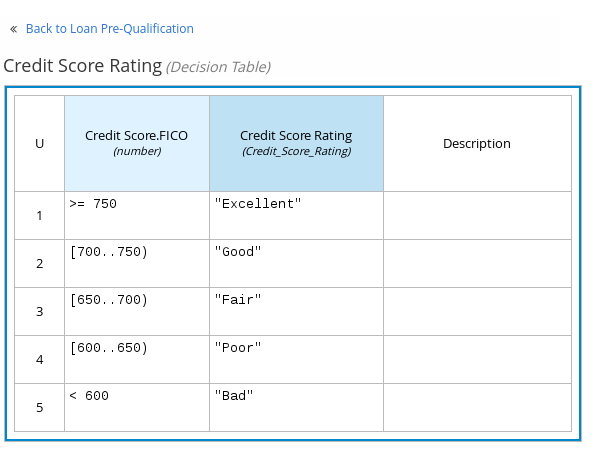

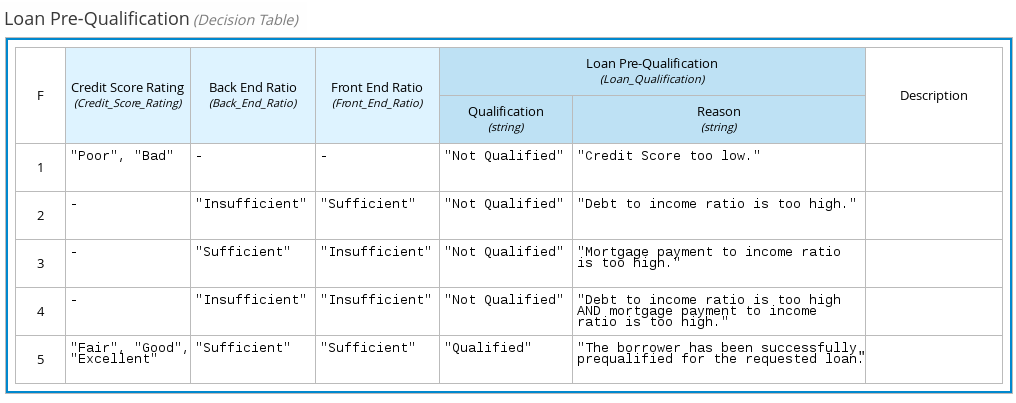

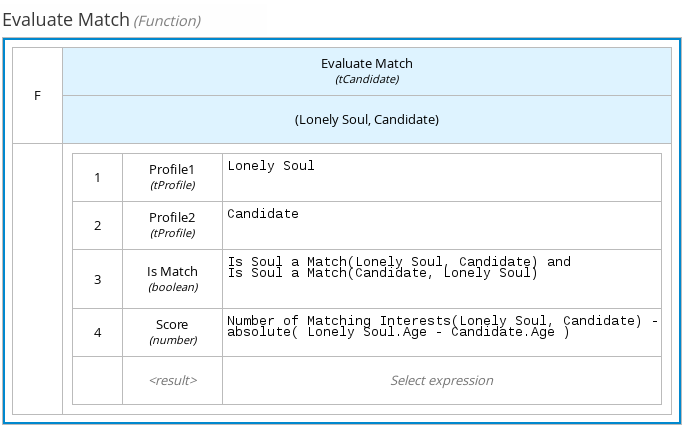

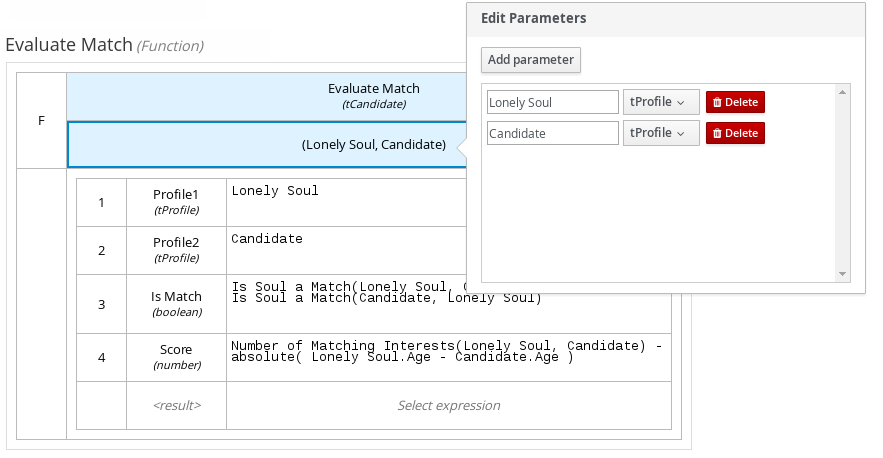

2.3.4. Defining the traffic violations DMN decision logic

To calculate the fine and to decide whether the driver is to be suspended or not, you can define the traffic violations DMN decision logic using a DMN decision table and context boxed expression.

-

You have assigned the DMN custom data types to the appropriate decision and input nodes in the traffic violations DRD in Business Central.

-

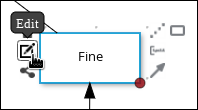

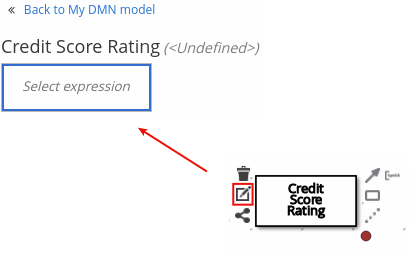

To calculate the fine, in the DMN designer canvas, select the Fine decision node and click the Edit icon to open the DMN boxed expression designer.

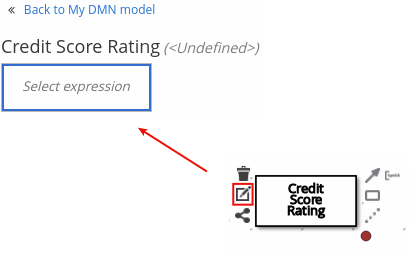

Figure 12. Decision node edit icon

Figure 12. Decision node edit icon -

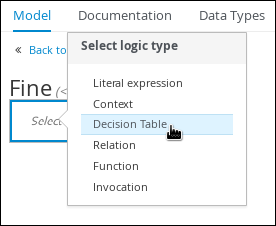

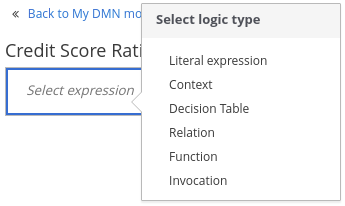

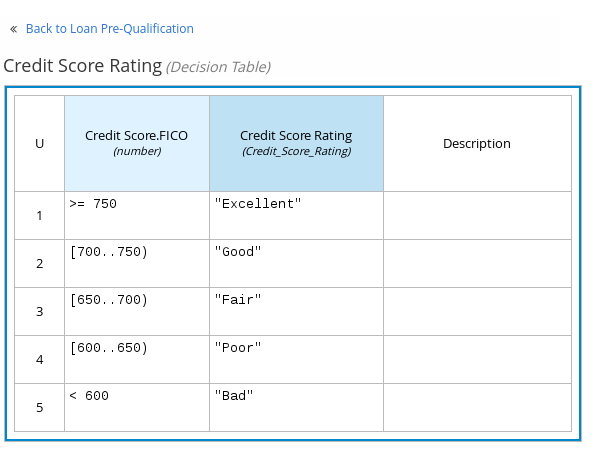

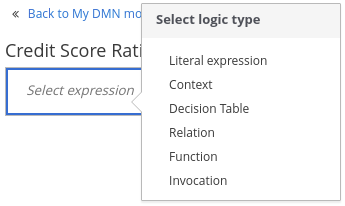

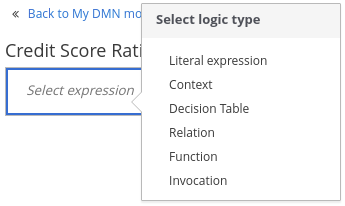

Click Select expression → Decision Table.

Figure 13. Select Decisiong Table logic type

Figure 13. Select Decisiong Table logic type -

For the Violation.Date, Violation.Code, and Violation.Speed Limit columns, right-click and select Delete for each field.

-

Click the Violation.Actual Speed column header and enter the expression

Violation.Actual Speed - Violation.Speed Limitin the Expression field." -

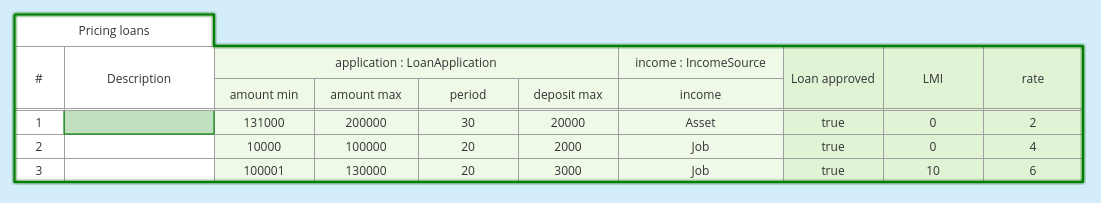

Enter the following values in the first row of the decision table:

-

Violation.Type:

"speed" -

Violation.Actual Speed - Violation.Speed Limit:

[10..30) -

Amount:

500 -

Points:

3Right-click the first row and select

Insert belowto add another row.

-

-

Enter the following values in the second row of the decision table:

-

Violation.Type:

"speed" -

Violation.Actual Speed - Violation.Speed Limit:

>= 30 -

Amount:

1000 -

Points:

7Right-click the second row and select

Insert belowto add another row.

-

-

Enter the following values in the third row of the decision table:

-

Violation.Type:

"parking" -

Violation.Actual Speed - Violation.Speed Limit:

- -

Amount:

100 -

Points:

1Right-click the third row and select

Insert belowto add another row.

-

-

Enter the following values in the fourth row of the decision table:

-

Violation.Type:

"driving under the influence" -

Violation.Actual Speed - Violation.Speed Limit:

- -

Amount:

1000 -

Points:

5

-

-

Click Save.

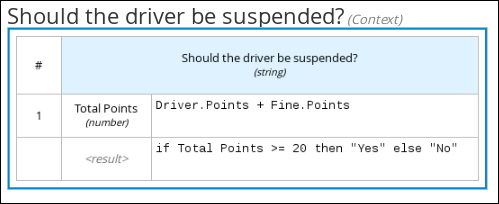

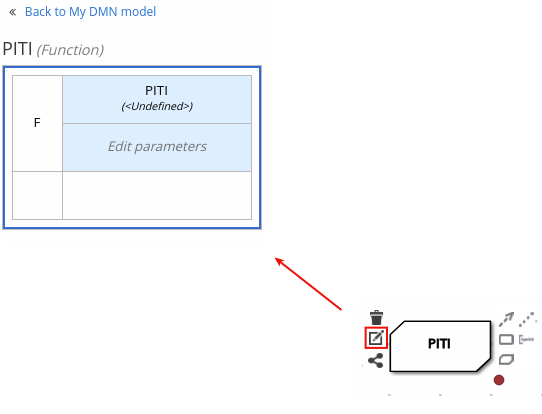

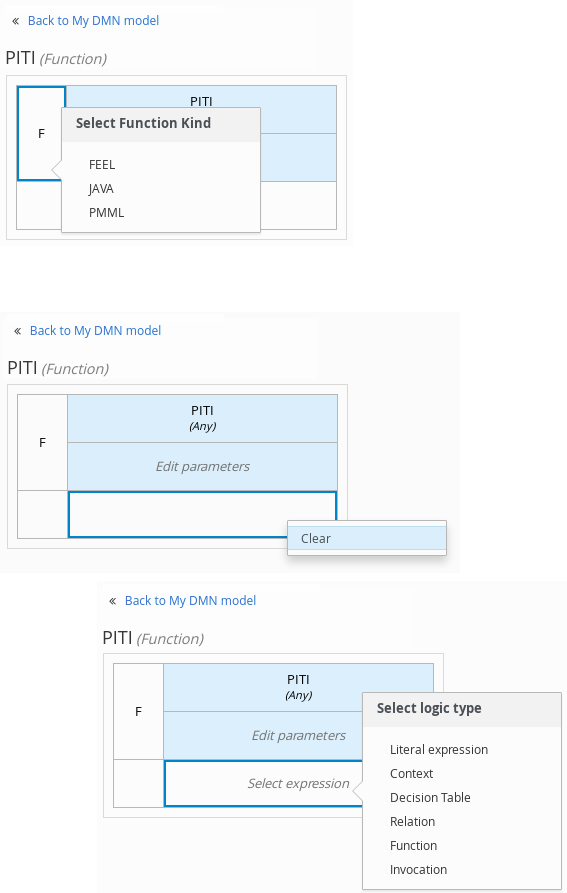

-

To define the driver suspension rule, return to the DMN designer canvas, select the Should the driver be suspended? decision node, and click the Edit icon to open the DMN boxed expression designer.

-

Click Select expression → Context.

-

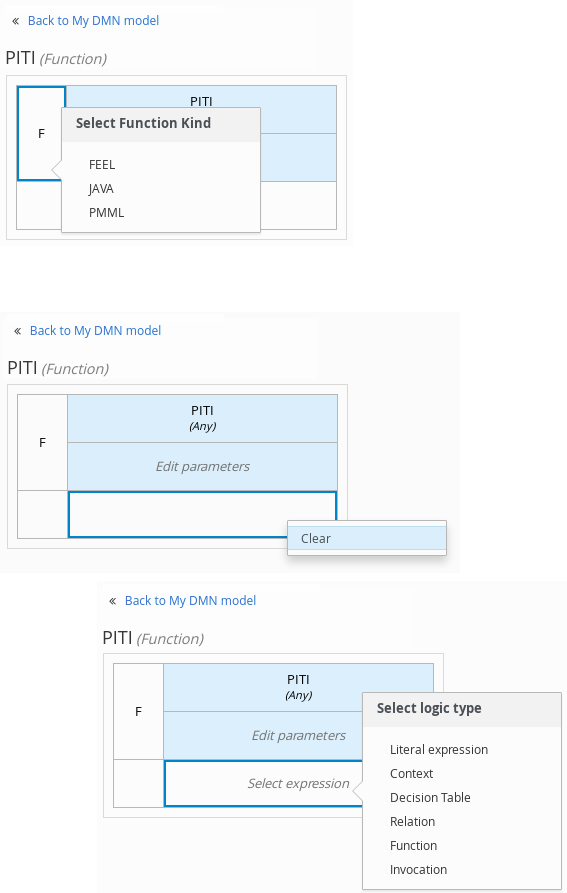

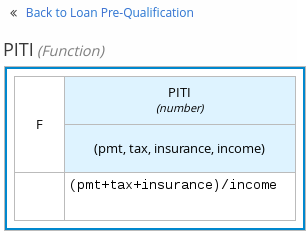

Click ContextEntry-1, enter

Total Pointsas the Name, and selectnumberfrom the Data Type drop-down menu. -

Click the cell next to Total Points, select

Literal expressionfrom the context menu, and enterDriver.Points + Fine.Pointsas the expression. -

In the cell below Driver.Points + Fine.Points, select

Literal Expressionfrom the context menu, and enterif Total Points >= 20 then "Yes" else "No". -

Click Save.

You have defined how to calculate the fine and the context for deciding when to suspend the driver. You can navigate to the traffic-violation project page and click Build to build the example project and address any errors noted in the Alerts panel.

2.4. Test scenarios

Test scenarios in Drools enable you to validate the functionality of business rules and business rule data (for rules-based test scenarios) or of DMN models (for DMN-based test scenarios) before deploying them into a production environment. With a test scenario, you use data from your project to set given conditions and expected results based on one or more defined business rules. When you run the scenario, the expected results and actual results of the rule instance are compared. If the expected results match the actual results, the test is successful. If the expected results do not match the actual results, then the test fails.

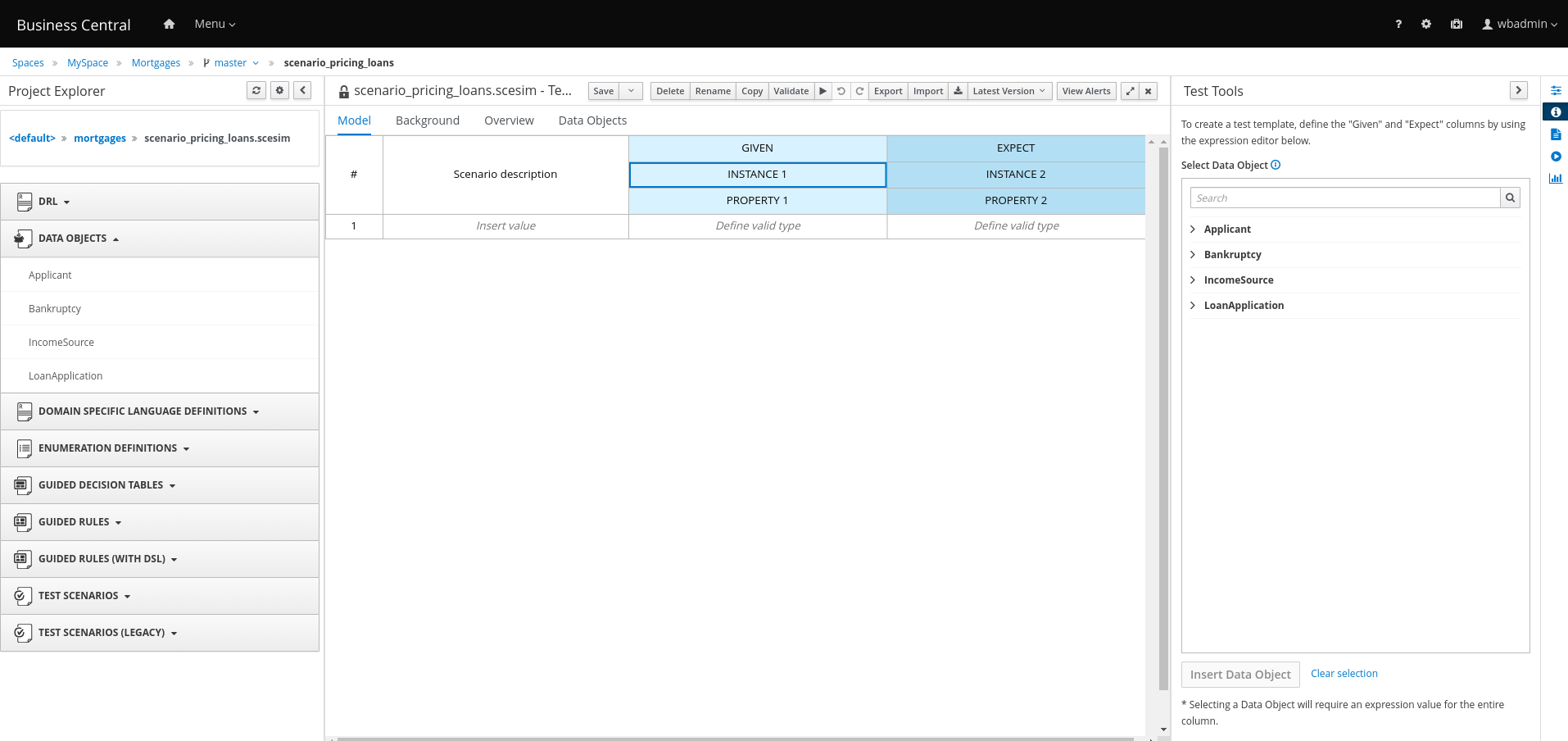

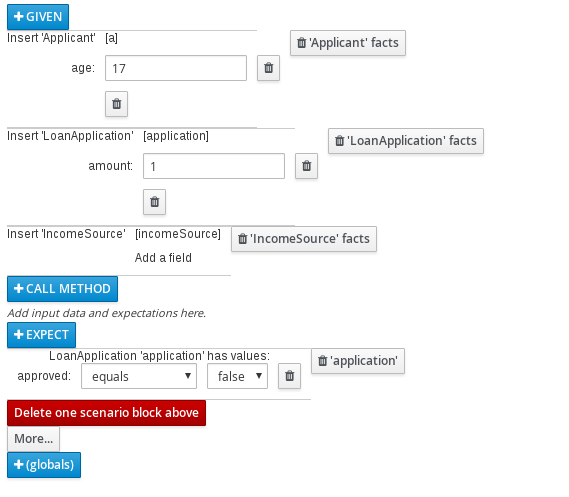

Drools includes both the new Test Scenarios designer and the former Test Scenarios (Legacy) designer. The default designer is the new test scenarios designer, which supports the testing of both rules and DMN models and provides an enhanced overall user experience with test scenarios. If required, you can continue to use the legacy test scenarios designer, which supports rule-based test scenarios only.

| The legacy test scenarios designer is deprecated from Drools version 7.3.0. It will be removed in a future Drools release. Use the new test scenarios designer instead. |

You can run the defined test scenarios in a number of ways, for example, you can run available test scenarios at the project level or inside a specific test scenario asset. Test scenarios are independent and cannot affect or modify other test scenarios. You can run test scenarios at any time during project development in Business Central. You do not have to compile or deploy your decision service to run test scenarios.

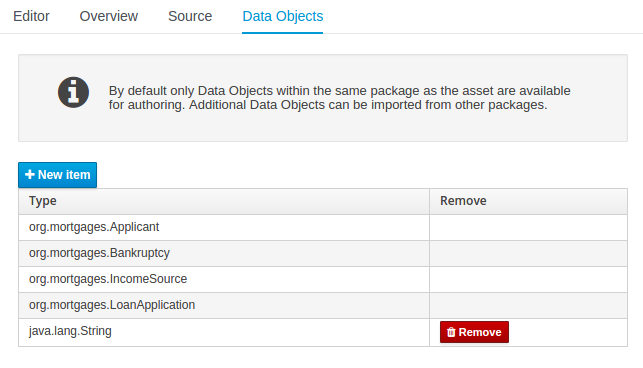

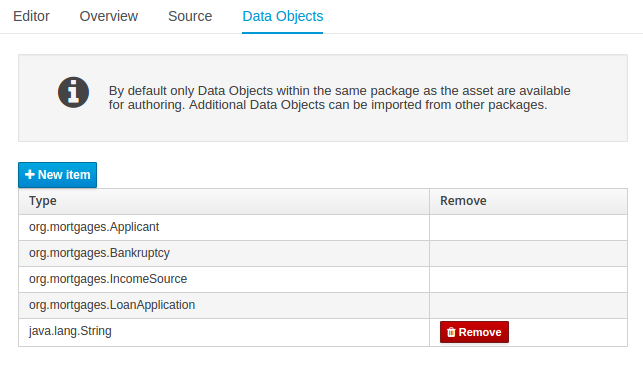

You can import data objects from different packages to the same project package as the test scenario. Assets in the same package are imported by default. After you create the necessary data objects and the test scenario, you can use the Data Objects tab of the test scenarios designer to verify that all required data objects are listed or to import other existing data objects by adding a New item.

| Throughout the test scenarios documentation, all references to test scenarios and the test scenarios designer are for the new version, unless explicitly noted as the legacy version. |

2.4.1. Testing the traffic violations using test scenarios

Use the test scenarios designer in Business Central to test the DMN decision requirements diagrams (DRDs) and define decision logic for the traffic violations project.

-

You have successfully built the traffic violations project in Business Central.

-

On the traffic-violation project’s home screen, click Add Asset to open the Add Asset screen.

-

Click Test Scenario to open the Create new Test Scenario dialog.

-

Enter

Violation Scenariosin the Test Scenario field. -

From the Package list, select

com.myspace.traffic_violation. -

Select

DMNas the Source type. -

From the Choose a DMN asset list, select the path to the DMN asset.

-

Click Ok to open the Violation Scenarios test scenario in the Test Scenarios designer.

-

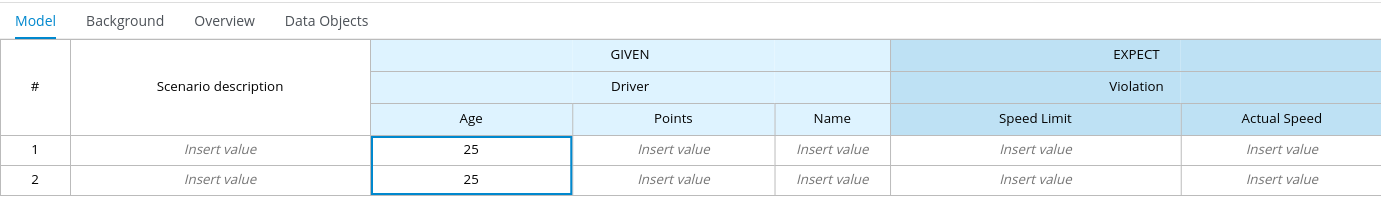

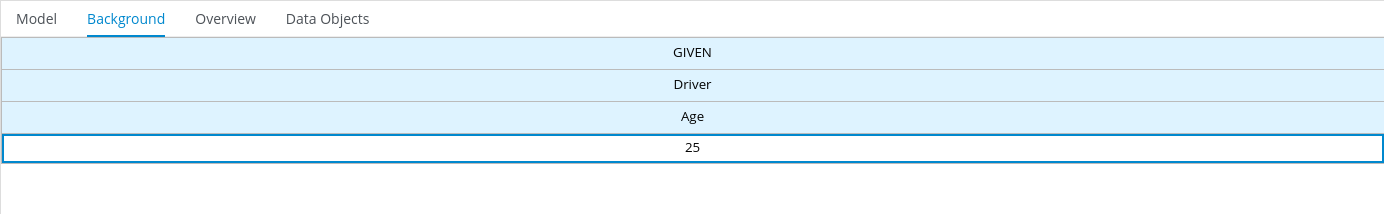

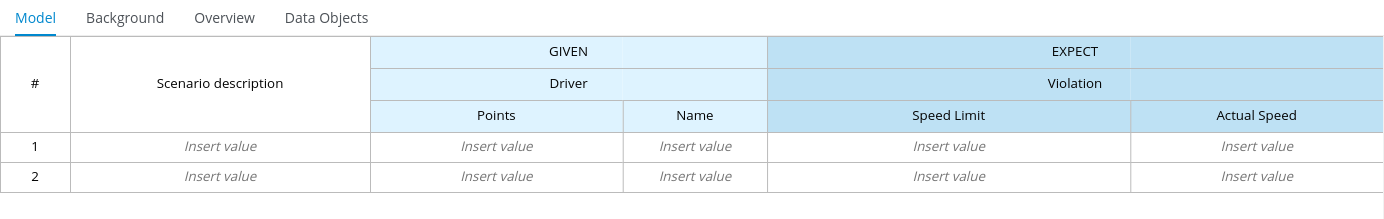

-

Under Driver column sub-header, right-click the State, City, Age, and Name value cells and select Delete column from the context menu options to remove them.

-

Under Violation column sub-header, right-click the Date and Code value cells and select Delete column to remove them.

-

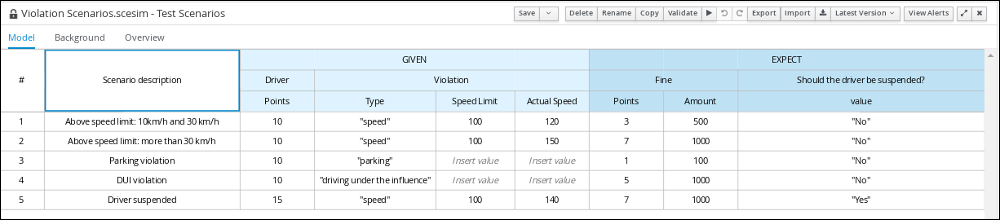

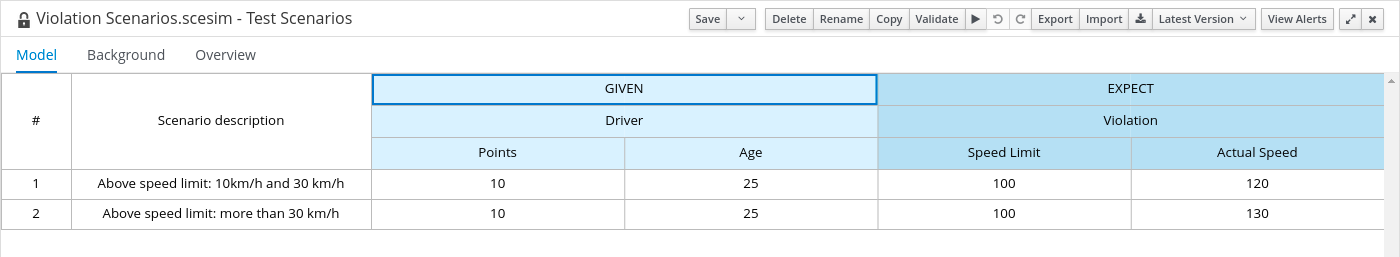

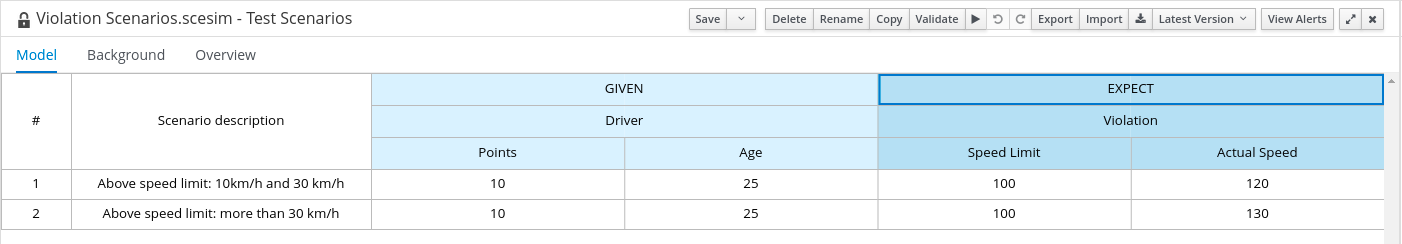

Enter the following information in the first row of the test scenarios:

-

Scenario description:

Above speed limit: 10km/h and 30 km/h -

Points (under Given column header):

10 -

Type:

"speed" -

Speed Limit:

100 -

Actual Speed:

120 -

Points:

3 -

Amount:

500 -

Should the driver be suspended?:

"No"Right-click the first row and select Insert row below to add another row.

-

-

Enter the following information in the second row of the test scenarios:

-

Scenario description:

Above speed limit: more than 30 km/h -

Points (under Given column header):

10 -

Type:

"speed" -

Speed Limit:

100 -

Actual Speed:

150 -

Points:

7 -

Amount:

1000 -

Should the driver be suspended?:

"No"Right-click the second row and select Insert row below to add another row.

-

-

Enter the following information in the third row of the test scenarios:

-

Scenario description:

Parking violation -

Points (under Given column header):

10 -

Type:

"parking" -

Speed Limit: leave blank

-

Actual Speed: leave blank

-

Points:

1 -

Amount:

100 -

Should the driver be suspended?:

"No"Right-click the third row and select Insert row below to add another row.

-

-

Enter the following information in the fourth row of the test scenarios:

-

Scenario description:

DUI violation -

Points (under Given column header):

10 -

Type:

"driving under the influence" -

Speed Limit: leave blank

-

Actual Speed: leave blank

-

Points:

5 -

Amount:

1000 -

Should the driver be suspended?:

"No"Right-click the fourth row and select Insert row below to add another row.

-

-

Enter the following information in the fifth row of the test scenarios:

-

Scenario description:

Driver suspended -

Points (under Given column header):

15 -

Type:

"speed" -

Speed Limit:

100 -

Actual Speed:

140 -

Points:

7 -

Amount:

1000 -

Should the driver be suspended?:

"Yes"

-

-

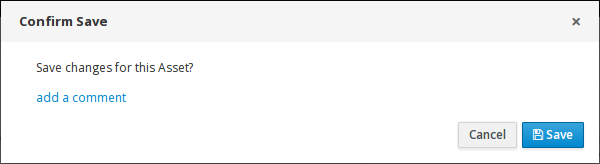

Click Save.

-

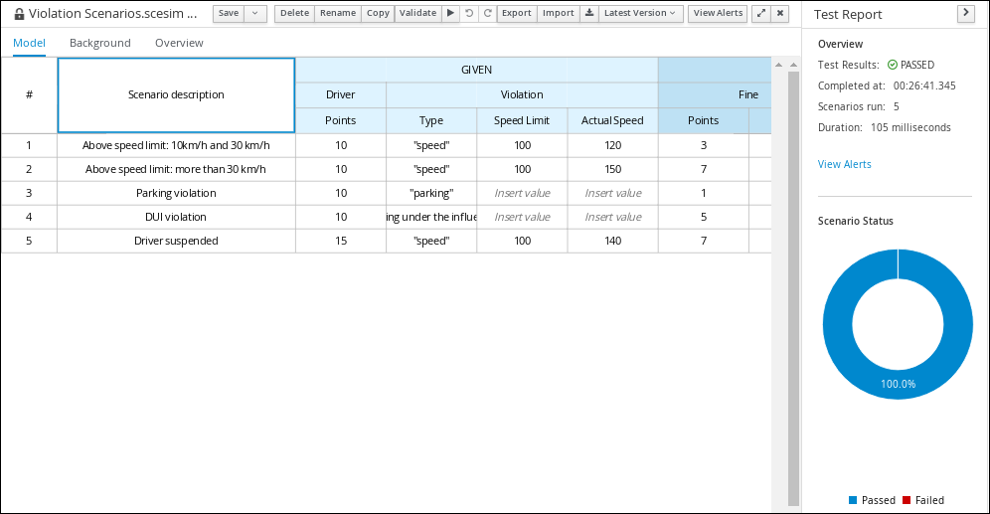

Click the Play icon

to check whether the test scenarios pass or fail.

to check whether the test scenarios pass or fail. Figure 15. Test scenario execution result for the traffic violations example

Figure 15. Test scenario execution result for the traffic violations exampleIn case of failure, correct the errors and run the test scenarios again.

2.5. DMN model execution

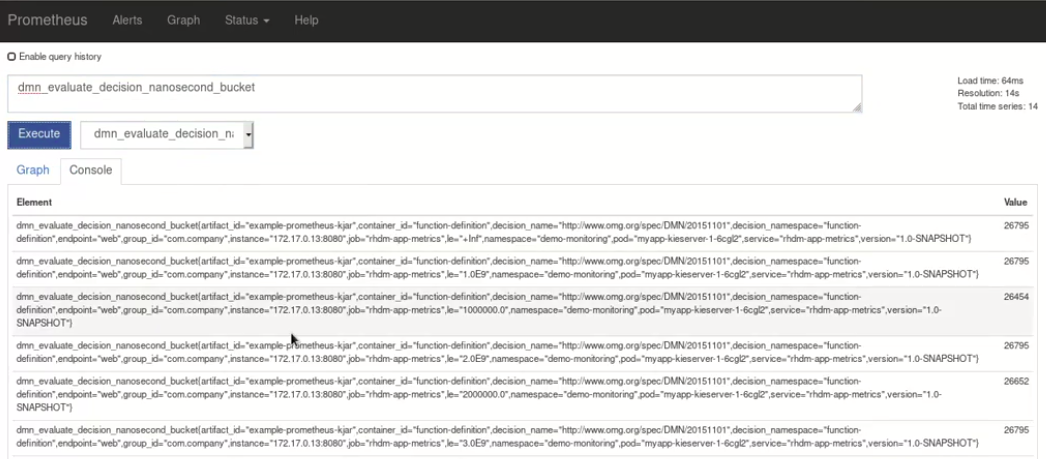

You can create or import DMN files in your Drools project using Business Central or package the DMN files as part of your project knowledge JAR (KJAR) file without Business Central. After you implement your DMN files in your Drools project, you can execute the DMN decision service by deploying the KIE container that contains it to KIE Server for remote access and interacting with the container using the KIE Server REST API.

For information about including external DMN assets with your project packaging and deployment method, see Build, Deploy, Utilize and Run.

2.5.1. Executing a DMN service using the KIE Server REST API

Directly interacting with the REST endpoints of KIE Server provides the most separation between the calling code and the decision logic definition. The calling code is completely free of direct dependencies, and you can implement it in an entirely different development platform such as Node.js or .NET. The examples in this section demonstrate Nix-style curl commands but provide relevant information to adapt to any REST client.

When you use a REST endpoint of KIE Server, the best practice is to define a domain object POJO Java class, annotated with standard KIE Server marshalling annotations. For example, the following code is using a domain object Person class that is annotated properly:

@javax.xml.bind.annotation.XmlAccessorType(javax.xml.bind.annotation.XmlAccessType.FIELD)

public class Person implements java.io.Serializable {

static final long serialVersionUID = 1L;

private java.lang.String id;

private java.lang.String name;

@javax.xml.bind.annotation.adapters.XmlJavaTypeAdapter(org.kie.internal.jaxb.LocalDateXmlAdapter.class)

private java.time.LocalDate dojoining;

public Person() {

}

public java.lang.String getId() {

return this.id;

}

public void setId(java.lang.String id) {

this.id = id;

}

public java.lang.String getName() {

return this.name;

}

public void setName(java.lang.String name) {

this.name = name;

}

public java.time.LocalDate getDojoining() {

return this.dojoining;

}

public void setDojoining(java.time.LocalDate dojoining) {

this.dojoining = dojoining;

}

public Person(java.lang.String id, java.lang.String name,

java.time.LocalDate dojoining) {

this.id = id;

this.name = name;

this.dojoining = dojoining;

}

}For more information about the KIE Server REST API, see KIE Server REST API for KIE containers and business assets.

-

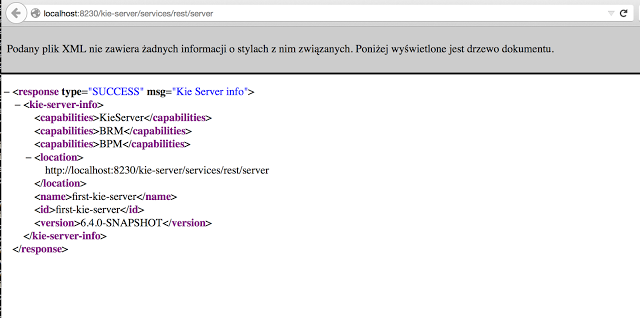

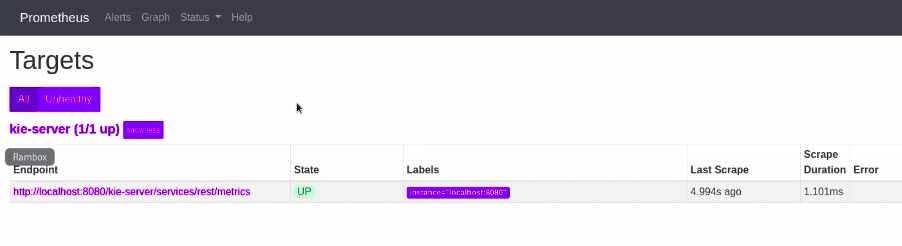

KIE Server is installed and configured, including a known user name and credentials for a user with the

kie-serverrole. For installation options, see Installation and Setup (Core and IDE). -

You have built the DMN project as a KJAR artifact and deployed it to KIE Server:

mvn clean installFor more information about project packaging and deployment and executable models, see Build, Deploy, Utilize and Run.

-

You have the ID of the KIE container containing the DMN model. If more than one model is present, you must also know the model namespace and model name of the relevant model.

-

Determine the base URL for accessing the KIE Server REST API endpoints. This requires knowing the following values (with the default local deployment values as an example):

-

Host (

localhost) -

Port (

8080) -

Root context (

kie-server) -

Base REST path (

services/rest/)

Example base URL in local deployment for the traffic violations project:

http://localhost:8080/kie-server/services/rest/server/containers/traffic-violation_1.0.0-SNAPSHOT -

-

Determine user authentication requirements.

When users are defined directly in the KIE Server configuration, HTTP Basic authentication is used and requires the user name and password. Successful requests require that the user have the

kie-serverrole.The following example demonstrates how to add credentials to a curl request:

curl -u username:password <request>If KIE Server is configured with Red Hat Single Sign-On, the request must include a bearer token:

curl -H "Authorization: bearer $TOKEN" <request> -

Specify the format of the request and response. The REST API endpoints work with both JSON and XML formats and are set using request headers:

JSONcurl -H "accept: application/json" -H "content-type: application/json"XMLcurl -H "accept: application/xml" -H "content-type: application/xml" -

Optional: Query the container for a list of deployed decision models:

[GET]

server/containers/{containerId}/dmnExample curl request:

curl -u wbadmin:wbadmin -H "accept: application/xml" -X GET "http://localhost:8080/kie-server/services/rest/server/containers/traffic-violation_1.0.0-SNAPSHOT/dmn"Sample XML output:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?> <response type="SUCCESS" msg="Ok models successfully retrieved from container 'traffic-violation_1.0.0-SNAPSHOT'"> <dmn-model-info-list> <model> <model-namespace>https://kiegroup.org/dmn/_60b01f4d-e407-43f7-848e-258723b5fac8</model-namespace> <model-name>Traffic Violation</model-name> <model-id>_2CD7D1AA-BD84-4B43-AD21-B0342ADE655A</model-id> <decisions> <dmn-decision-info> <decision-id>_23428EE8-DC8B-4067-8E67-9D7C53EC975F</decision-id> <decision-name>Fine</decision-name> </dmn-decision-info> <dmn-decision-info> <decision-id>_B5EEE2B1-915C-44DC-BE43-C244DC066FD8</decision-id> <decision-name>Should the driver be suspended?</decision-name> </dmn-decision-info> </decisions> <inputs> <dmn-inputdata-info> <inputdata-id>_CEB959CD-3638-4A87-93BA-03CD0FB63AE3</inputdata-id> <inputdata-name>Violation</inputdata-name> <inputdata-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>tViolation</local-part> <prefix></prefix> </inputdata-typeref> </dmn-inputdata-info> <dmn-inputdata-info> <inputdata-id>_B0E810E6-7596-430A-B5CF-67CE16863B6C</inputdata-id> <inputdata-name>Driver</inputdata-name> <inputdata-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>tDriver</local-part> <prefix></prefix> </inputdata-typeref> </dmn-inputdata-info> </inputs> <itemdefinitions> <dmn-itemdefinition-info> <itemdefinition-id>_9C758F4A-7D72-4D0F-B63F-2F5B8405980E</itemdefinition-id> <itemdefinition-name>tViolation</itemdefinition-name> <itemdefinition-itemcomponent> <dmn-itemdefinition-info> <itemdefinition-id>_0B6FF1E2-ACE9-4FB3-876B-5BB30B88009B</itemdefinition-id> <itemdefinition-name>Code</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60b01f4d-e407-43f7-848e-258723b5fac8</namespace-uri> <local-part>string</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_27A5DA18-3CA7-4C06-81B7-CF7F2F050E29</itemdefinition-id> <itemdefinition-name>date</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>date</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_8961969A-8A80-4F12-B568-346920C0F038</itemdefinition-id> <itemdefinition-name>type</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>string</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_7450F12A-3E95-4D5E-8DCE-2CB1FAC2BDD4</itemdefinition-id> <itemdefinition-name>speed limit</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60b01f4d-e407-43f7-848e-258723b5fac8</namespace-uri> <local-part>number</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_0A9A6F26-6C14-414D-A9BF-765E5850429A</itemdefinition-id> <itemdefinition-name>Actual Speed</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>number</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> </itemdefinition-itemcomponent> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_13C7EFD8-B85C-43BF-94D3-14FABE39A4A0</itemdefinition-id> <itemdefinition-name>tDriver</itemdefinition-name> <itemdefinition-itemcomponent> <dmn-itemdefinition-info> <itemdefinition-id>_EC11744C-4160-4549-9610-2C757F40DFE8</itemdefinition-id> <itemdefinition-name>Name</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>string</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_E95BE3DB-4A51-4658-A166-02493EAAC9D2</itemdefinition-id> <itemdefinition-name>Age</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>number</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_7B3023E2-BC44-4BF3-BF7E-773C240FB9AD</itemdefinition-id> <itemdefinition-name>State</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>string</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_3D4B49DD-700C-4925-99A7-3B2B873F7800</itemdefinition-id> <itemdefinition-name>city</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>string</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_B37C49E8-B0D9-4B20-9DC6-D655BB1CA7B1</itemdefinition-id> <itemdefinition-name>Points</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>number</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> </itemdefinition-itemcomponent> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_A4077C7E-B57A-4DEE-9C65-7769636316F3</itemdefinition-id> <itemdefinition-name>tFine</itemdefinition-name> <itemdefinition-itemcomponent> <dmn-itemdefinition-info> <itemdefinition-id>_79B152A8-DE83-4001-B88B-52DFF0D73B2D</itemdefinition-id> <itemdefinition-name>Amount</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>number</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> <dmn-itemdefinition-info> <itemdefinition-id>_D7CB5F9C-9D55-48C2-83EE-D47045EC90D0</itemdefinition-id> <itemdefinition-name>Points</itemdefinition-name> <itemdefinition-typeref> <namespace-uri>https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8</namespace-uri> <local-part>number</local-part> <prefix></prefix> </itemdefinition-typeref> <itemdefinition-itemcomponent/> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> </itemdefinition-itemcomponent> <itemdefinition-iscollection>false</itemdefinition-iscollection> </dmn-itemdefinition-info> </itemdefinitions> <decisionservices/> </model> </dmn-model-info-list> </response>Sample JSON output:

{ "type" : "SUCCESS", "msg" : "OK models successfully retrieved from container 'Traffic-Violation_1.0.0-SNAPSHOT'", "result" : { "dmn-model-info-list" : { "models" : [ { "model-namespace" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "model-name" : "Traffic Violation", "model-id" : "_2CD7D1AA-BD84-4B43-AD21-B0342ADE655A", "decisions" : [ { "decision-id" : "_23428EE8-DC8B-4067-8E67-9D7C53EC975F", "decision-name" : "Fine" }, { "decision-id" : "_B5EEE2B1-915C-44DC-BE43-C244DC066FD8", "decision-name" : "Should the driver be suspended?" } ], "inputs" : [ { "inputdata-id" : "_CEB959CD-3638-4A87-93BA-03CD0FB63AE3", "inputdata-name" : "Violation", "inputdata-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "tViolation", "prefix" : "" } }, { "inputdata-id" : "_B0E810E6-7596-430A-B5CF-67CE16863B6C", "inputdata-name" : "Driver", "inputdata-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "tDriver", "prefix" : "" } } ], "itemDefinitions" : [ { "itemdefinition-id" : "_13C7EFD8-B85C-43BF-94D3-14FABE39A4A0", "itemdefinition-name" : "tDriver", "itemdefinition-typeRef" : null, "itemdefinition-itemComponent" : [ { "itemdefinition-id" : "_EC11744C-4160-4549-9610-2C757F40DFE8", "itemdefinition-name" : "Name", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "string", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_E95BE3DB-4A51-4658-A166-02493EAAC9D2", "itemdefinition-name" : "Age", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "number", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_7B3023E2-BC44-4BF3-BF7E-773C240FB9AD", "itemdefinition-name" : "State", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "string", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_3D4B49DD-700C-4925-99A7-3B2B873F7800", "itemdefinition-name" : "City", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "string", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_B37C49E8-B0D9-4B20-9DC6-D655BB1CA7B1", "itemdefinition-name" : "Points", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "number", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false } ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_A4077C7E-B57A-4DEE-9C65-7769636316F3", "itemdefinition-name" : "tFine", "itemdefinition-typeRef" : null, "itemdefinition-itemComponent" : [ { "itemdefinition-id" : "_79B152A8-DE83-4001-B88B-52DFF0D73B2D", "itemdefinition-name" : "Amount", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "number", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_D7CB5F9C-9D55-48C2-83EE-D47045EC90D0", "itemdefinition-name" : "Points", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "number", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false } ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_9C758F4A-7D72-4D0F-B63F-2F5B8405980E", "itemdefinition-name" : "tViolation", "itemdefinition-typeRef" : null, "itemdefinition-itemComponent" : [ { "itemdefinition-id" : "_0B6FF1E2-ACE9-4FB3-876B-5BB30B88009B", "itemdefinition-name" : "Code", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "string", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_27A5DA18-3CA7-4C06-81B7-CF7F2F050E29", "itemdefinition-name" : "Date", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "date", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_8961969A-8A80-4F12-B568-346920C0F038", "itemdefinition-name" : "Type", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "string", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_7450F12A-3E95-4D5E-8DCE-2CB1FAC2BDD4", "itemdefinition-name" : "Speed Limit", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "number", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false }, { "itemdefinition-id" : "_0A9A6F26-6C14-414D-A9BF-765E5850429A", "itemdefinition-name" : "Actual Speed", "itemdefinition-typeRef" : { "namespace-uri" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "local-part" : "number", "prefix" : "" }, "itemdefinition-itemComponent" : [ ], "itemdefinition-isCollection" : false } ], "itemdefinition-isCollection" : false } ], "decisionServices" : [ ] } ] } } } -

Execute the model:

[POST]

server/containers/{containerId}/dmnThe attribute

model-namespaceis automatically generated and is different for every user. Ensure that themodel-namespaceandmodel-nameattributes that you use match those of the deployed model.Example curl request:

curl -u wbadmin:wbadmin -H "accept: application/json" -H "content-type: application/json" -X POST "http://localhost:8080/kie-server/services/rest/server/containers/traffic-violation_1.0.0-SNAPSHOT/dmn" -d "{ \"model-namespace\" : \"https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8\", \"model-name\" : \"Traffic Violation\", \"dmn-context\" : {\"Driver\" : {\"Points\" : 15}, \"Violation\" : {\"Type\" : \"speed\", \"Actual Speed\" : 135, \"Speed Limit\" : 100}}}"Example JSON request:

{ "model-namespace" : "https://kiegroup.org/dmn/_60B01F4D-E407-43F7-848E-258723B5FAC8", "model-name" : "Traffic Violation", "dmn-context" : { "Driver" : { "Points" : 15 }, "Violation" : { "Type" : "speed", "Actual Speed" : 135, "Speed Limit" : 100 } } }Example XML request (JAXB format):

<?xml version="1.0" encoding="UTF-8" standalone="yes"?> <dmn-evaluation-context> <dmn-context xsi:type="jaxbListWrapper" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"> <type>MAP</type> <element xsi:type="jaxbStringObjectPair" key="Violation"> <value xsi:type="jaxbListWrapper"> <type>MAP</type> <element xsi:type="jaxbStringObjectPair" key="Type"> <value xsi:type="xs:string" xmlns:xs="http://www.w3.org/2001/XMLSchema">speed</value> </element> <element xsi:type="jaxbStringObjectPair" key="Speed Limit"> <value xsi:type="xs:decimal" xmlns:xs="http://www.w3.org/2001/XMLSchema">100</value> </element> <element xsi:type="jaxbStringObjectPair" key="Actual Speed"> <value xsi:type="xs:decimal" xmlns:xs="http://www.w3.org/2001/XMLSchema">135</value> </element> </value> </element> <element xsi:type="jaxbStringObjectPair" key="Driver"> <value xsi:type="jaxbListWrapper"> <type>MAP</type> <element xsi:type="jaxbStringObjectPair" key="Points"> <value xsi:type="xs:decimal" xmlns:xs="http://www.w3.org/2001/XMLSchema">15</value> </element> </value> </element> </dmn-context> </dmn-evaluation-context>Regardless of the request format, the request requires the following elements:

-

Model namespace

-

Model name

-

Context object containing input values

Example JSON response:

{ "type": "SUCCESS", "msg": "OK from container 'Traffic-Violation_1.0.0-SNAPSHOT'", "result": { "dmn-evaluation-result": { "messages": [], "model-namespace": "https://kiegroup.org/dmn/_7D8116DE-ADF5-4560-A116-FE1A2EAFFF48", "model-name": "Traffic Violation", "decision-name": [], "dmn-context": { "Violation": { "Type": "speed", "Speed Limit": 100, "Actual Speed": 135 }, "Should Driver be Suspended?": "Yes", "Driver": { "Points": 15 }, "Fine": { "Points": 7, "Amount": 1000 } }, "decision-results": { "_E1AF5AC2-E259-455C-96E4-596E30D3BC86": { "messages": [], "decision-id": "_E1AF5AC2-E259-455C-96E4-596E30D3BC86", "decision-name": "Should the Driver be Suspended?", "result": "Yes", "status": "SUCCEEDED" }, "_D7F02CE0-AF50-4505-AB80-C7D6DE257920": { "messages": [], "decision-id": "_D7F02CE0-AF50-4505-AB80-C7D6DE257920", "decision-name": "Fine", "result": { "Points": 7, "Amount": 1000 }, "status": "SUCCEEDED" } } } } }Example XML (JAXB format) response:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?> <response type="SUCCESS" msg="OK from container 'Traffic_1.0.0-SNAPSHOT'"> <dmn-evaluation-result> <model-namespace>https://kiegroup.org/dmn/_A4BCA8B8-CF08-433F-93B2-A2598F19ECFF</model-namespace> <model-name>Traffic Violation</model-name> <dmn-context xsi:type="jaxbListWrapper" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"> <type>MAP</type> <element xsi:type="jaxbStringObjectPair" key="Violation"> <value xsi:type="jaxbListWrapper"> <type>MAP</type> <element xsi:type="jaxbStringObjectPair" key="Type"> <value xsi:type="xs:string" xmlns:xs="http://www.w3.org/2001/XMLSchema">speed</value> </element> <element xsi:type="jaxbStringObjectPair" key="Speed Limit"> <value xsi:type="xs:decimal" xmlns:xs="http://www.w3.org/2001/XMLSchema">100</value> </element> <element xsi:type="jaxbStringObjectPair" key="Actual Speed"> <value xsi:type="xs:decimal" xmlns:xs="http://www.w3.org/2001/XMLSchema">135</value> </element> </value> </element> <element xsi:type="jaxbStringObjectPair" key="Driver"> <value xsi:type="jaxbListWrapper"> <type>MAP</type> <element xsi:type="jaxbStringObjectPair" key="Points"> <value xsi:type="xs:decimal" xmlns:xs="http://www.w3.org/2001/XMLSchema">15</value> </element> </value> </element> <element xsi:type="jaxbStringObjectPair" key="Fine"> <value xsi:type="jaxbListWrapper"> <type>MAP</type> <element xsi:type="jaxbStringObjectPair" key="Points"> <value xsi:type="xs:decimal" xmlns:xs="http://www.w3.org/2001/XMLSchema">7</value> </element> <element xsi:type="jaxbStringObjectPair" key="Amount"> <value xsi:type="xs:decimal" xmlns:xs="http://www.w3.org/2001/XMLSchema">1000</value> </element> </value> </element> <element xsi:type="jaxbStringObjectPair" key="Should the driver be suspended?"> <value xsi:type="xs:string" xmlns:xs="http://www.w3.org/2001/XMLSchema">Yes</value> </element> </dmn-context> <messages/> <decisionResults> <entry> <key>_4055D956-1C47-479C-B3F4-BAEB61F1C929</key> <value> <decision-id>_4055D956-1C47-479C-B3F4-BAEB61F1C929</decision-id> <decision-name>Fine</decision-name> <result xsi:type="jaxbListWrapper" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"> <type>MAP</type> <element xsi:type="jaxbStringObjectPair" key="Points"> <value xsi:type="xs:decimal" xmlns:xs="http://www.w3.org/2001/XMLSchema">7</value> </element> <element xsi:type="jaxbStringObjectPair" key="Amount"> <value xsi:type="xs:decimal" xmlns:xs="http://www.w3.org/2001/XMLSchema">1000</value> </element> </result> <messages/> <status>SUCCEEDED</status> </value> </entry> <entry> <key>_8A408366-D8E9-4626-ABF3-5F69AA01F880</key> <value> <decision-id>_8A408366-D8E9-4626-ABF3-5F69AA01F880</decision-id> <decision-name>Should the driver be suspended?</decision-name> <result xsi:type="xs:string" xmlns:xs="http://www.w3.org/2001/XMLSchema" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">Yes</result> <messages/> <status>SUCCEEDED</status> </value> </entry> </decisionResults> </dmn-evaluation-result> </response> -

KIE

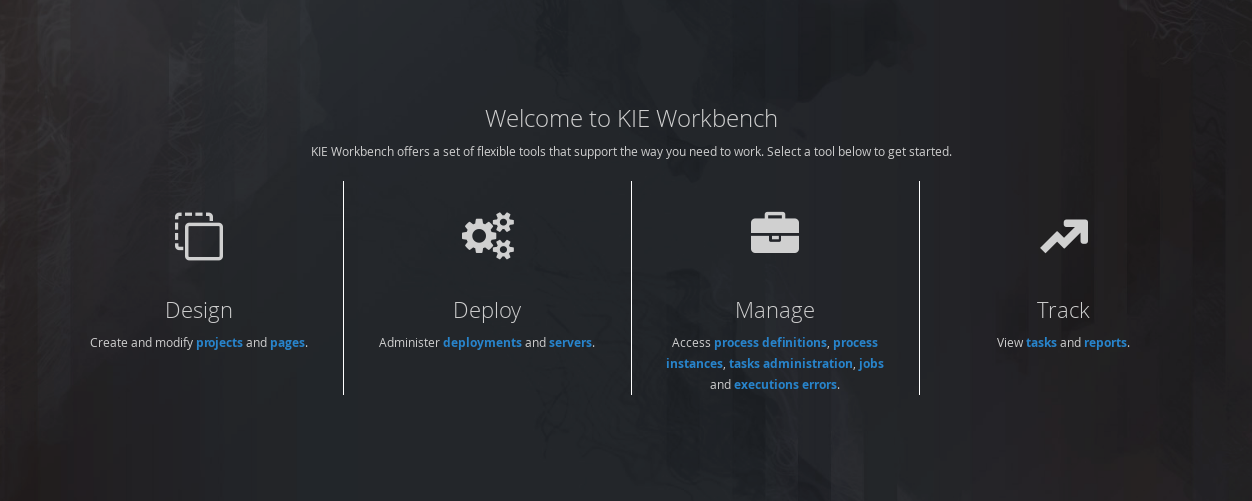

KIE is the shared core for Drools and jBPM. It provides a unified methodology and programming model for building, deploying and utilizing resources.

3. KIE

3.1. Overview

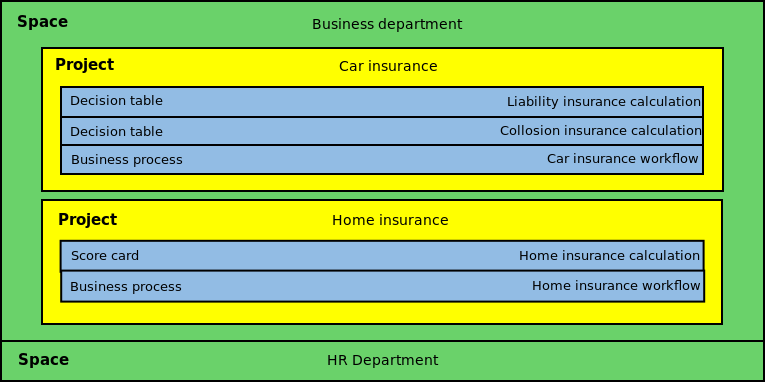

3.1.1. Anatomy of Projects

The process of researching an integration knowledge solution for Drools and jBPM has simply used the "kiegroup" group name. This name permeates GitHub accounts and Maven POMs. As scopes broadened and new projects were spun KIE, an acronym for Knowledge Is Everything, was chosen as the new group name. The KIE name is also used for the shared aspects of the system; such as the unified build, deploy and utilization.

KIE currently consists of the following subprojects:

OptaPlanner, a local search and optimization tool, has been spun off from Drools Planner and is now a top level project with Drools and jBPM. This was a natural evolution as Optaplanner, while having strong Drools integration, has long been independent of Drools.

From the Polymita acquisition, along with other things, comes the powerful Dashboard Builder which provides powerful reporting capabilities. Dashboard Builder is currently a temporary name and after the 6.0 release a new name will be chosen. Dashboard Builder is completely independent of Drools and jBPM and will be used by many projects at JBoss, and hopefully outside of JBoss :)

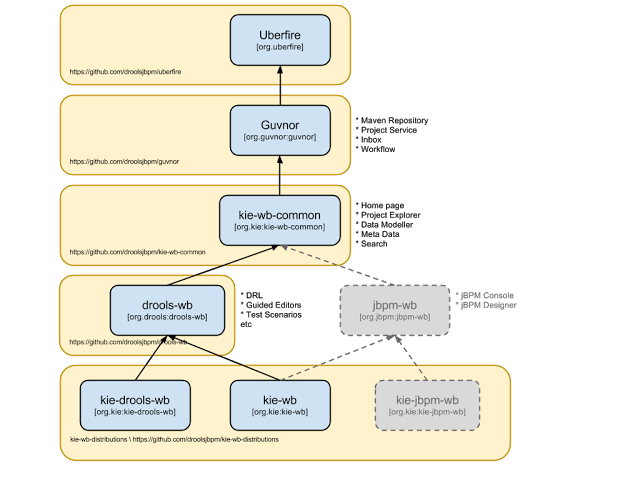

UberFire is the new base Business Central project, spun off from the ground up rewrite. UberFire provides Eclipse-like workbench capabilities, with panels and pages from plugins. The project is independent of Drools and jBPM and anyone can use it as a basis of building flexible and powerful workbenches like Business Central. UberFire will be used for console and workbench development throughout JBoss.

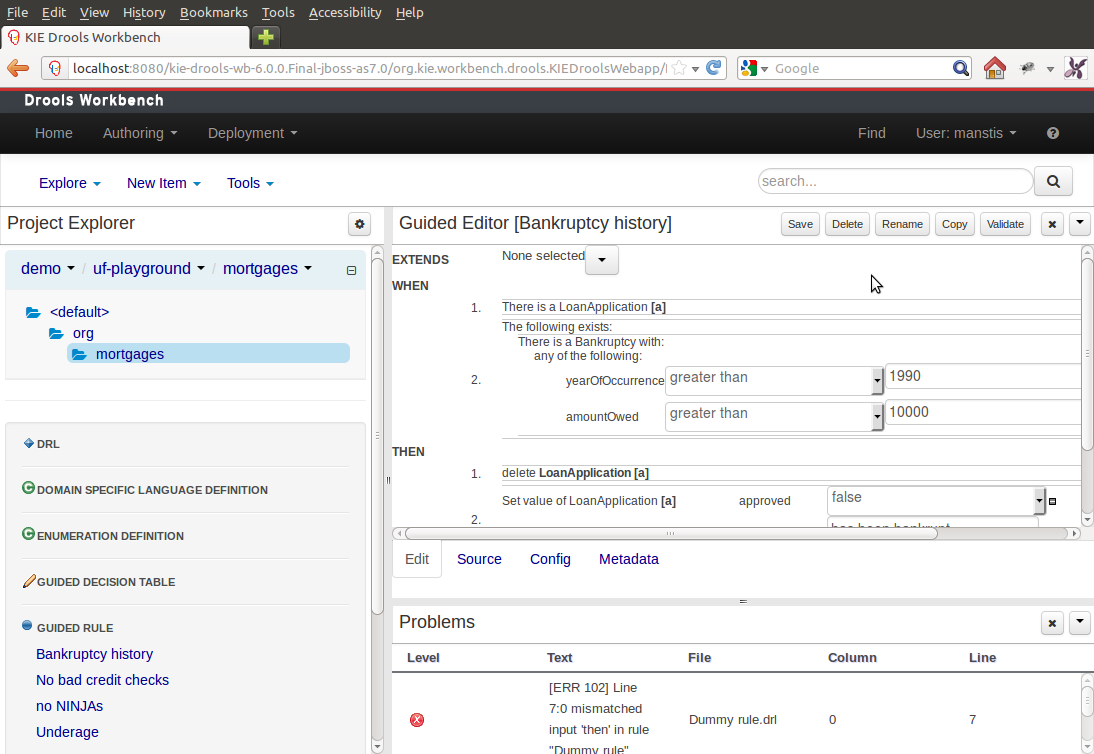

It was determined that the Guvnor brand leaked too much from its intended role; such as the authoring metaphors, like Decision Tables, being considered Guvnor components instead of Drools components. This wasn’t helped by the monolithic projects structure used in 5.x for Guvnor. In 6.0 Guvnor’s focus has been narrowed to encapsulate the set of UberFire plugins that provide the basis for building a web based IDE. Such as Maven integration for building and deploying, management of Maven repositories and activity notifications via inboxes. Drools and jBPM build Business Central distributions using Uberfire as the base and including a set of plugins, such as Guvnor, along with their own plugins for things like decision tables, guided editors, BPMN2 designer, human tasks. Business Central is called business-central.

KIE-WB is the uber workbench that combined all the Guvnor, Drools and jBPM plugins. The jBPM-WB is ghosted out, as it doesn’t actually exist, being made redundant by KIE-WB.

3.1.2. Lifecycles

The different aspects, or life cycles, of working with KIE system, whether it’s Drools or jBPM, can typically be broken down into the following:

-

Author

-

Authoring of knowledge using a UI metaphor, such as: DRL, BPMN2, decision table, class models.

-

-

Build

-

Builds the authored knowledge into deployable units.

-

For KIE this unit is a JAR.

-

-

Test

-

Test KIE knowledge before it’s deployed to the application.

-

-

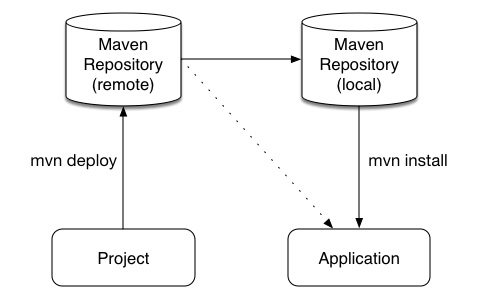

Deploy

-

Deploys the unit to a location where applications may utilize (consume) them.

-

KIE uses Maven style repository.

-

-

Utilize

-

The loading of a JAR to provide a KIE session (KieSession), for which the application can interact with.

-

KIE exposes the JAR at runtime via a KIE container (KieContainer).

-

KieSessions, for the runtime’s to interact with, are created from the KieContainer.

-

-

Run

-

System interaction with the KieSession, via API.

-

-

Work

-

User interaction with the KieSession, via command line or UI.

-

-

Manage

-

Manage any KieSession or KieContainer.

-

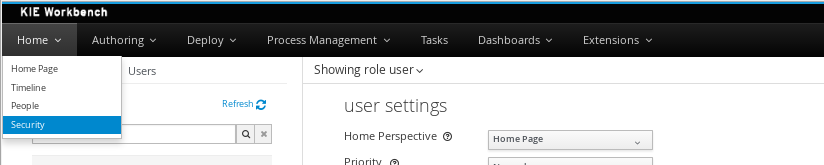

3.1.3. Installation environment options for Drools

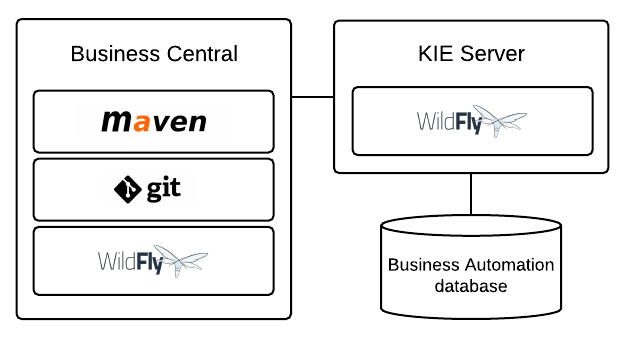

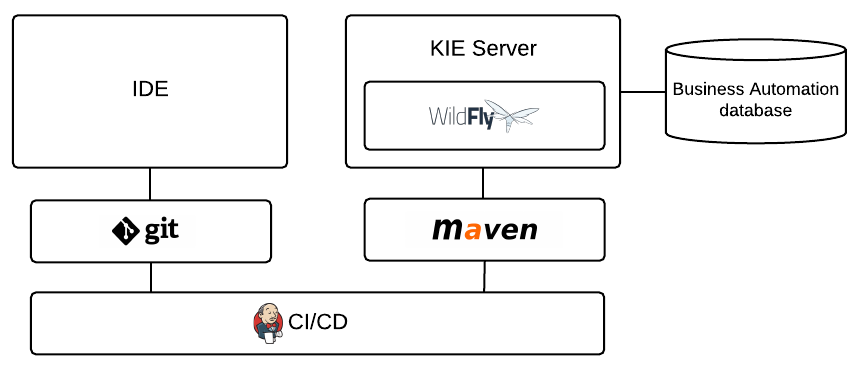

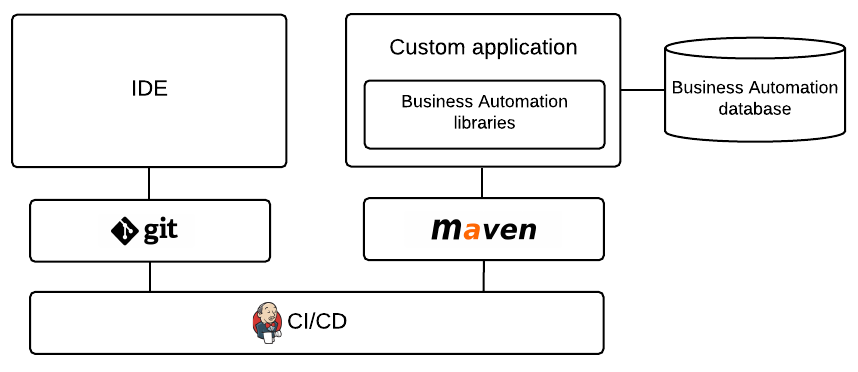

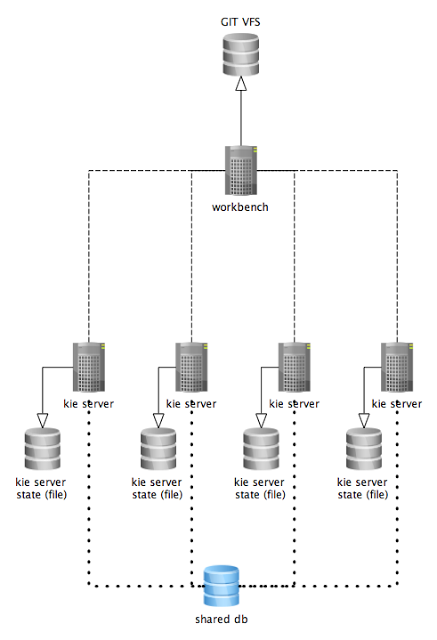

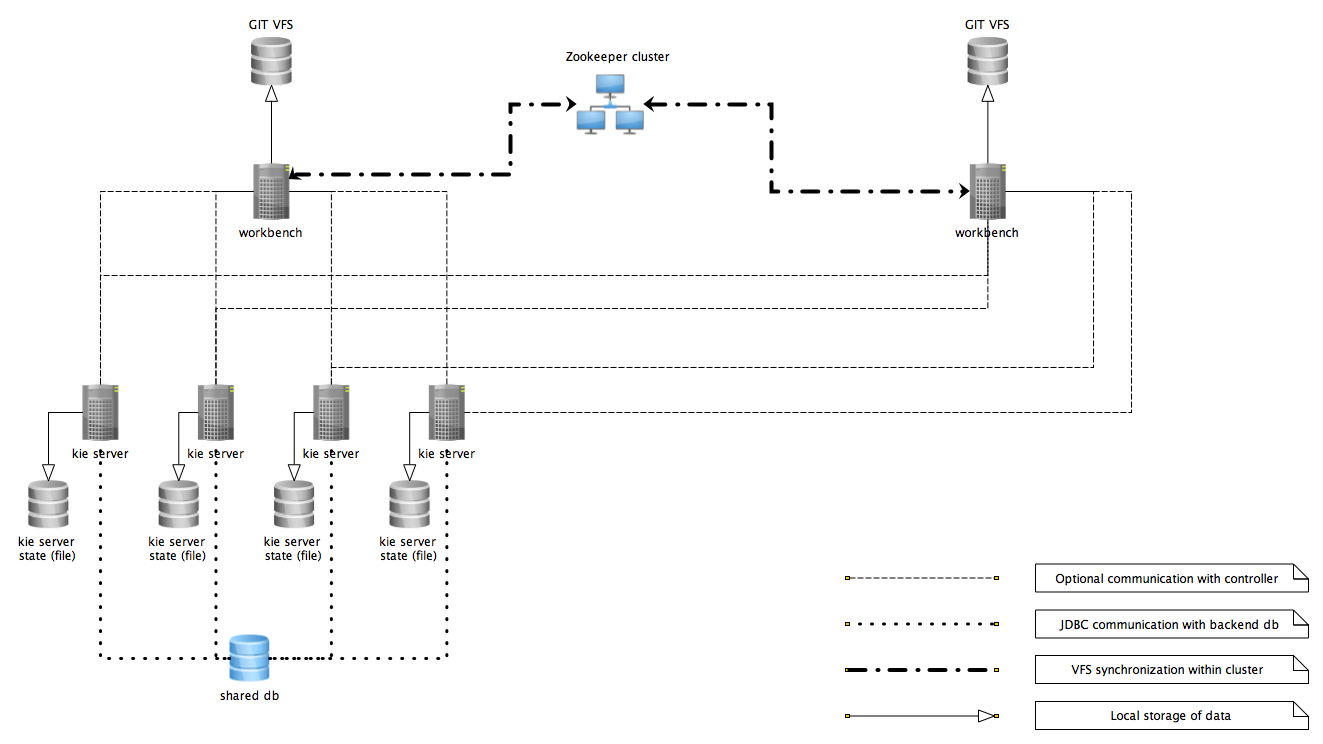

With Red Hat Process Automation Manager, you can set up a development environment to develop business applications, a runtime environment to run those applications to support decisions, or both.

-

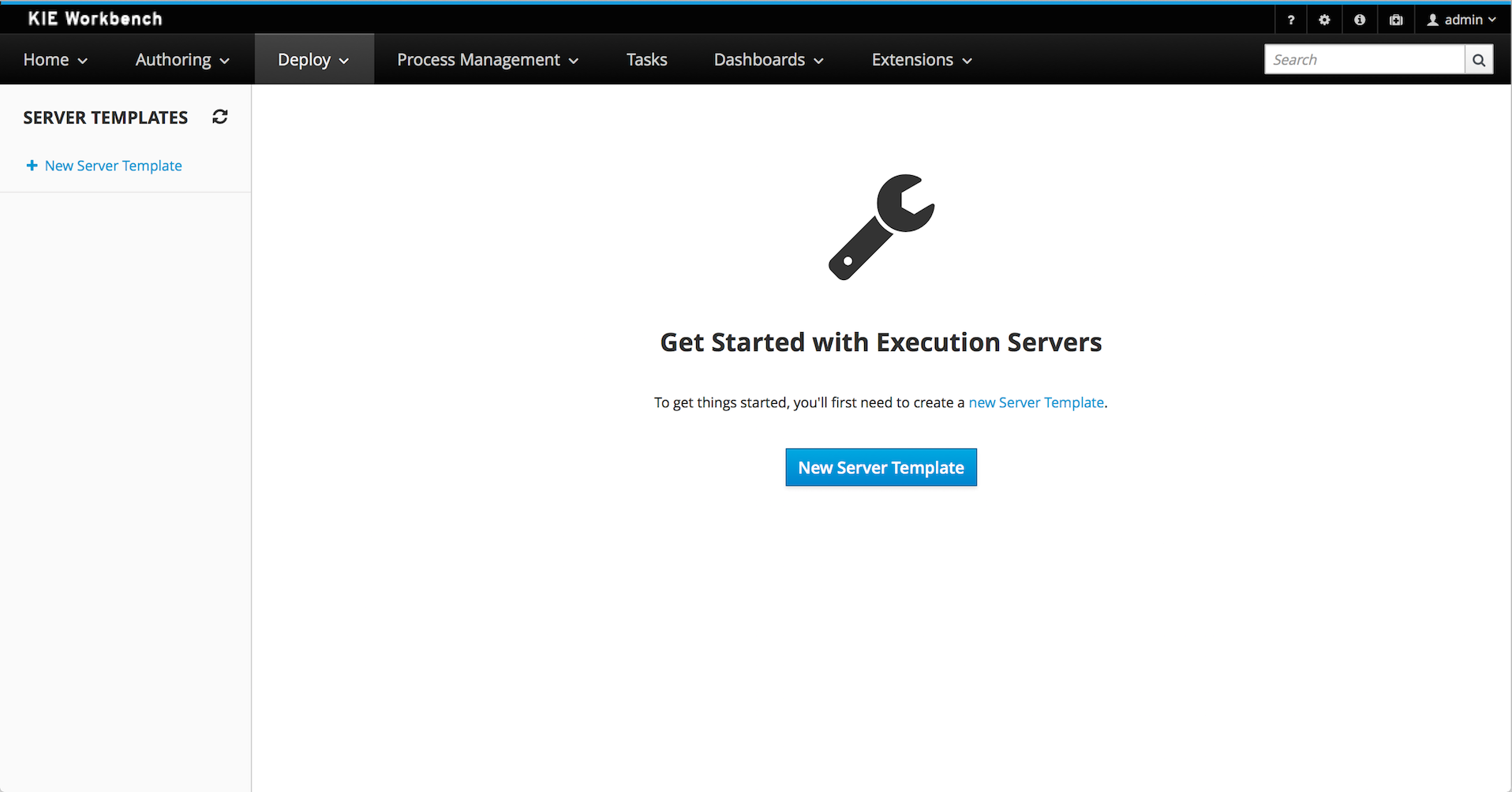

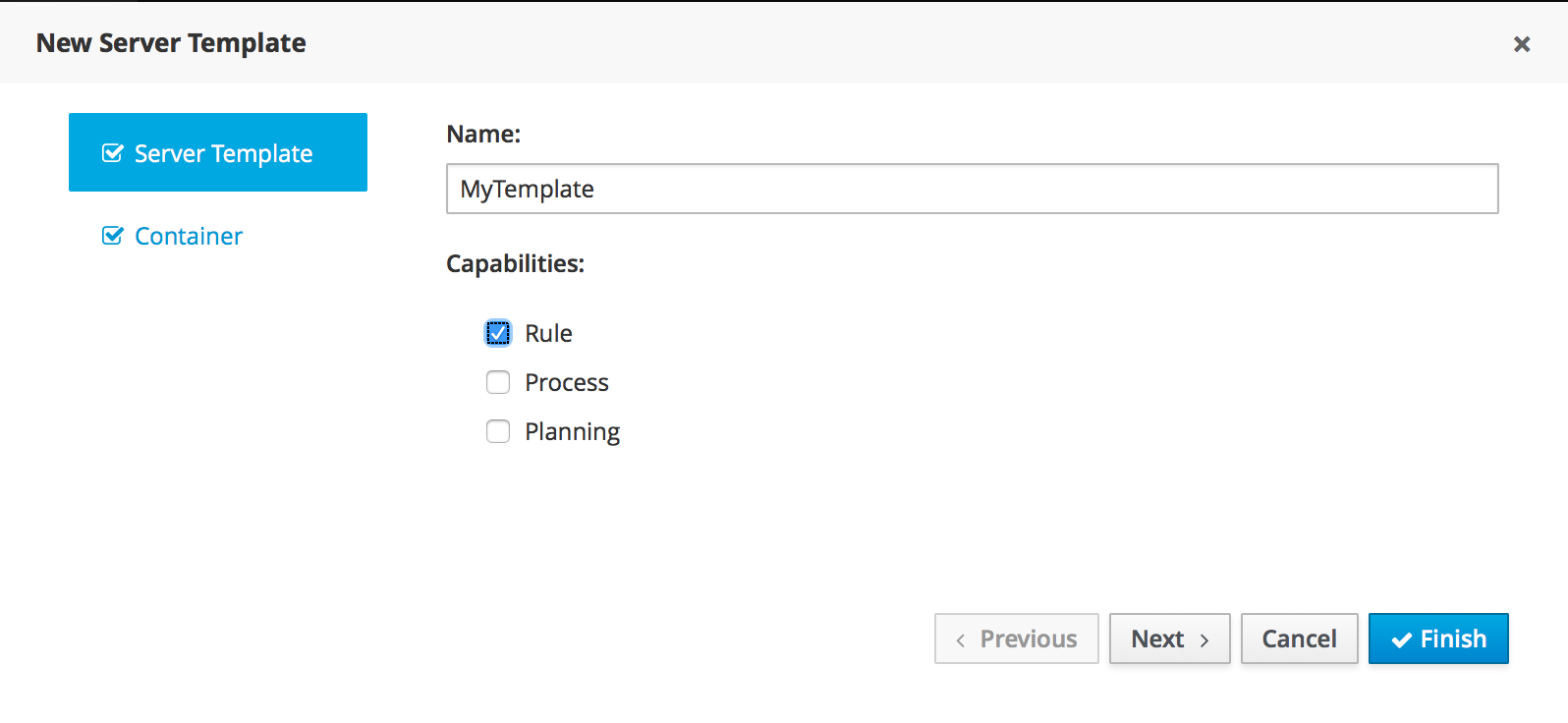

Development environment: Typically consists of one Business Central installation and at least one KIE Server installation. You can use Business Central to design decisions and other artifacts, and you can use KIE Server to execute and test the artifacts that you created.

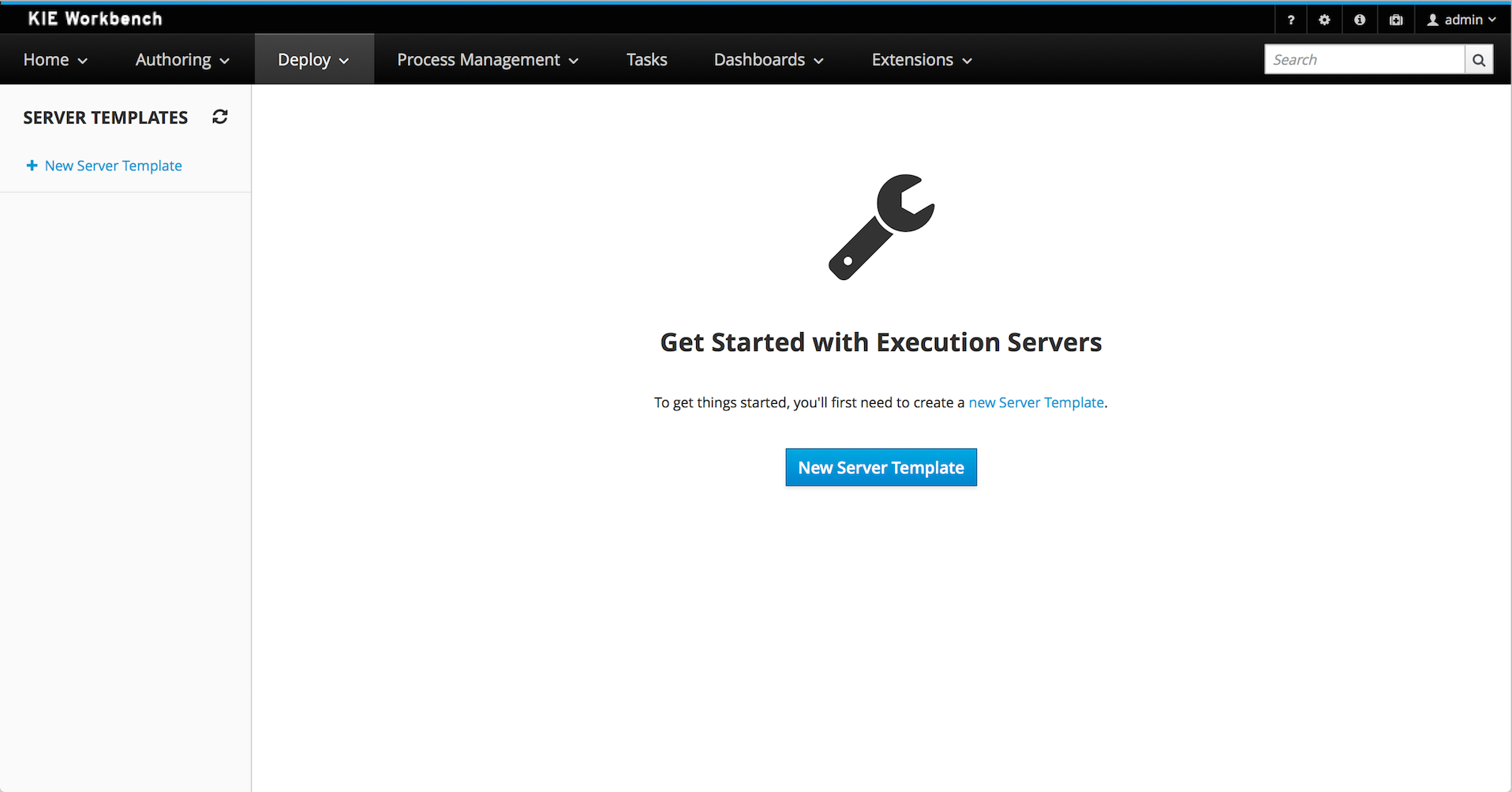

-

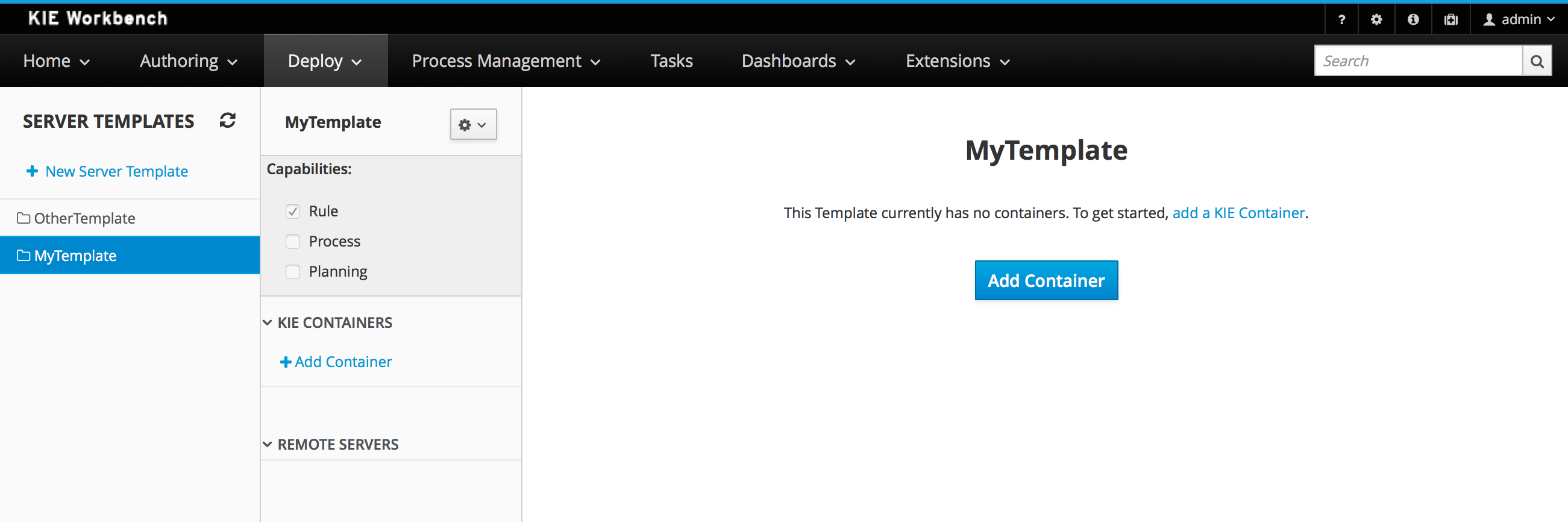

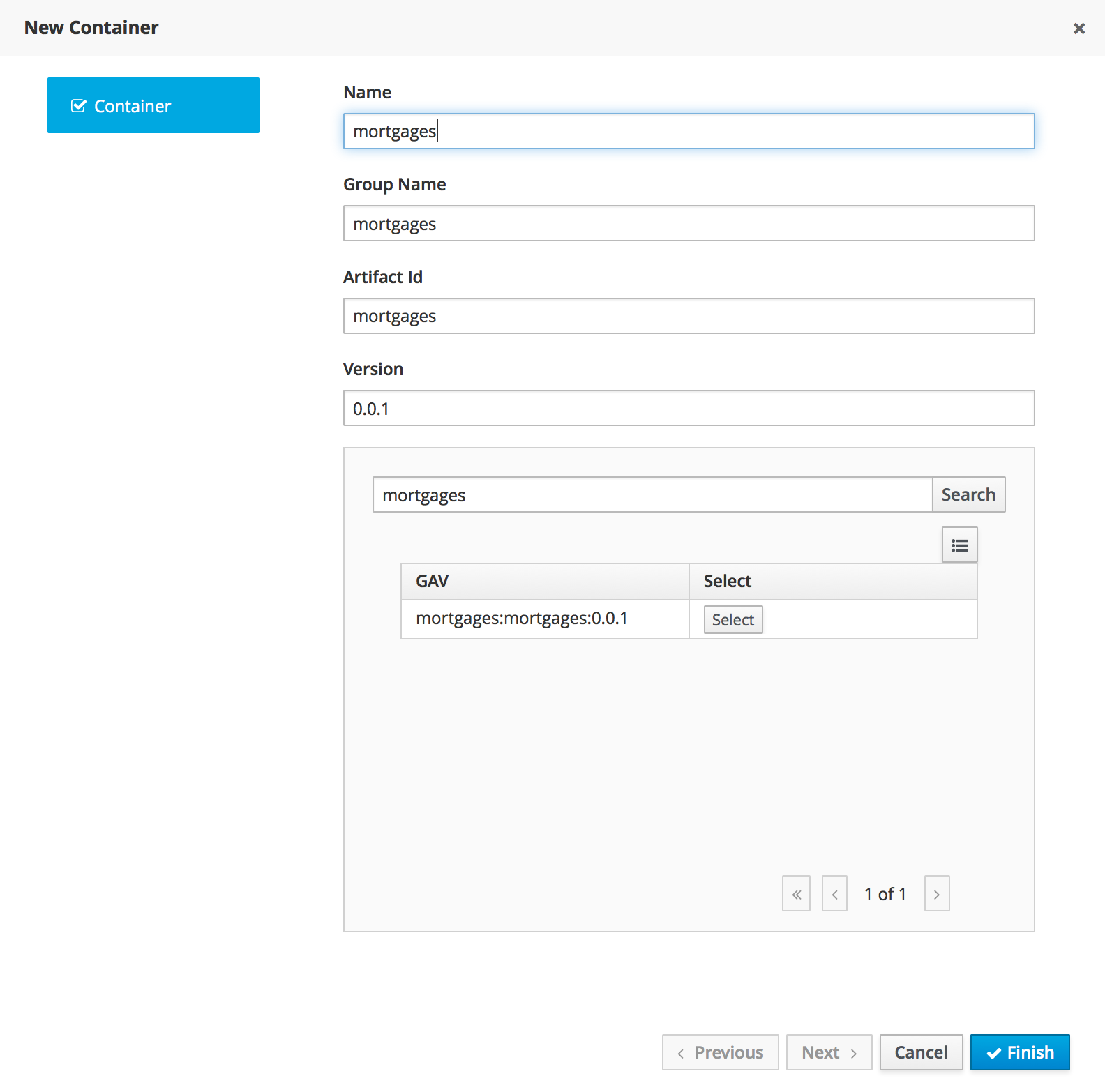

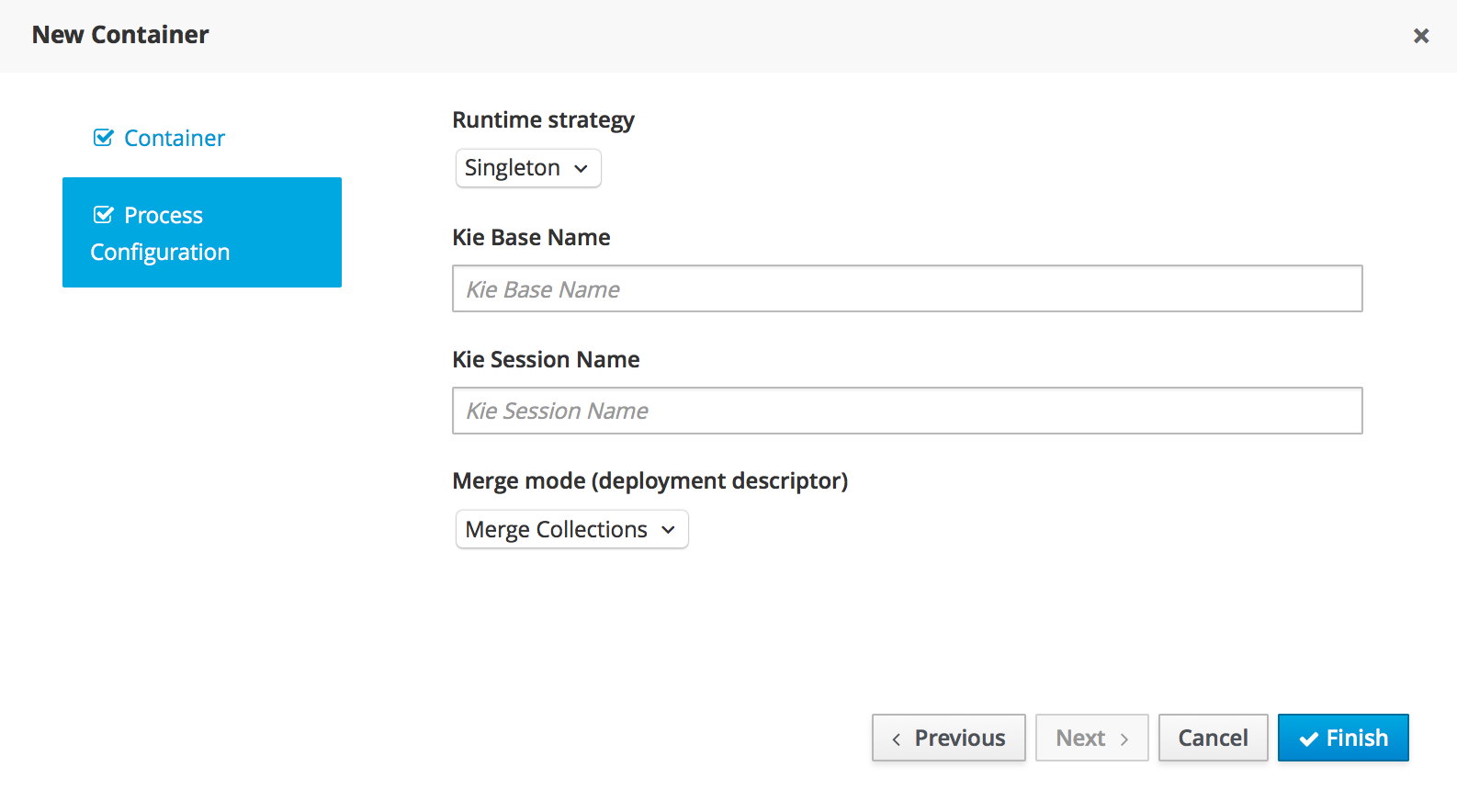

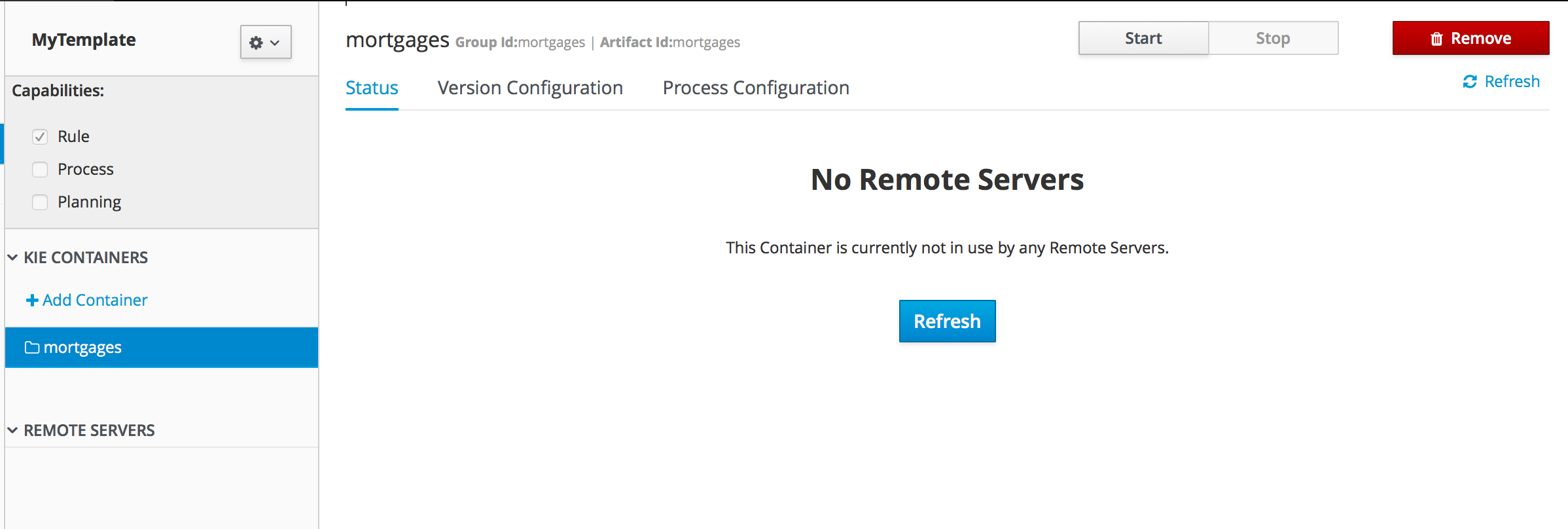

Runtime environment: Consists of one or more KIE Server instances with or without Business Central. Business Central has an embedded Drools controller. If you install Business Central, use the Menu → Deploy → Execution servers page to create and maintain containers. If you want to automate KIE Server management without Business Central, you can use the headless Drools controller.

You can also cluster both development and runtime environments. A clustered development or runtime environment consists of a unified group or cluster of two or more servers. The primary benefit of clustering Red Hat Process Automation Manager development environments is high availability and enhanced collaboration, while the primary benefit of clustering Red Hat Process Automation Manager runtime environments is high availability and load balancing. High availability decreases the chance of data loss when a single server fails. When a server fails, another server fills the gap by providing a copy of the data that was on the failed server. When the failed server comes online again, it resumes its place in the cluster.

| Clustering of the runtime environment is currently supported on Red Hat JBoss EAP 7.4 only. |

3.1.4. Decision-authoring assets in Drools

Drools supports several assets that you can use to define business decisions for your decision service. Each decision-authoring asset has different advantages, and you might prefer to use one or a combination of multiple assets depending on your goals and needs.

The following table highlights the main decision-authoring assets supported in Drools projects to help you decide or confirm the best method for defining decisions in your decision service.

| Asset | Highlights | Authoring tools | Documentation |

|---|---|---|---|

Decision Model and Notation (DMN) models |

|