Drools has a "native" rule language.

This format is very light in terms of punctuation, and supports

natural and domain specific languages via "expanders" that allow the

language to morph to your problem domain. This chapter is mostly concerted

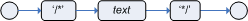

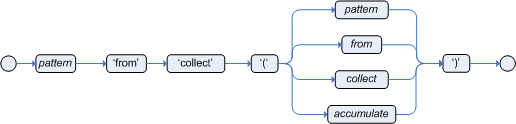

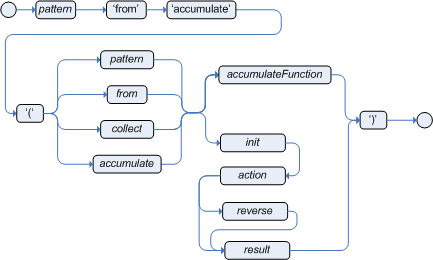

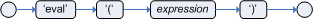

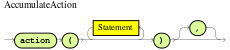

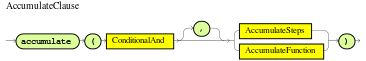

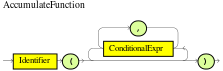

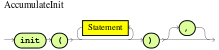

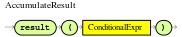

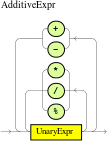

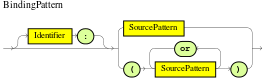

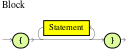

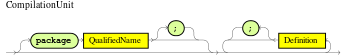

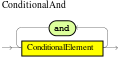

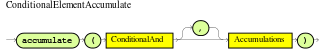

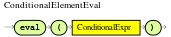

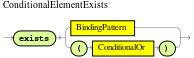

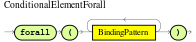

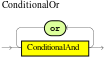

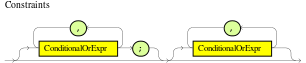

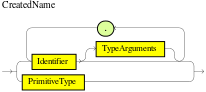

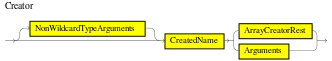

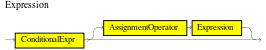

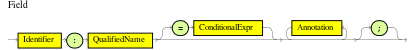

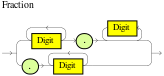

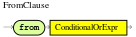

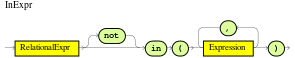

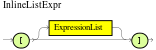

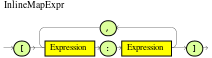

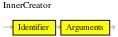

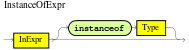

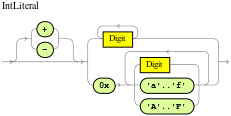

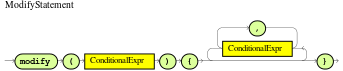

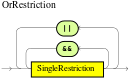

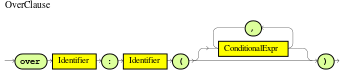

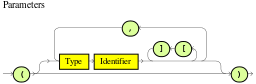

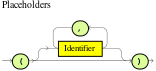

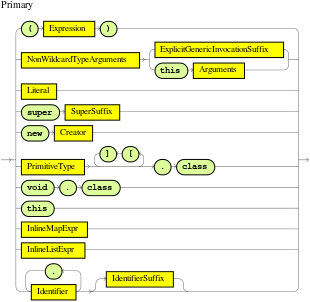

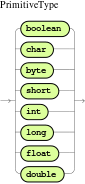

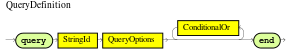

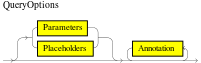

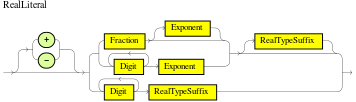

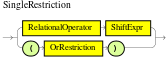

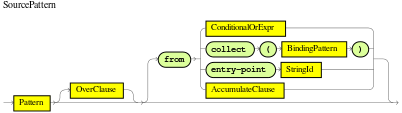

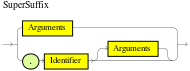

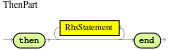

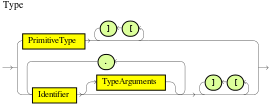

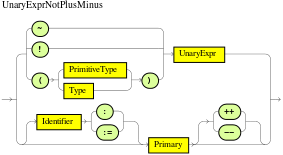

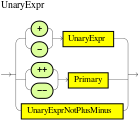

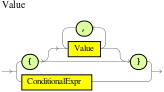

with this native rule format. The diagrams used to present the syntax are

known as "railroad" diagrams, and they are basically flow charts for the

language terms. The

technically very keen may also refer to DRL.g which is

the Antlr3

grammar for the rule language. If you use the Rule Workbench, a lot of the

rule structure is done for you with content assistance, for example, type

"ru" and press ctrl+space, and it will build the rule structure for

you.

A rule file is typically a file with a .drl extension. In a DRL file you can have multiple rules, queries and functions, as well as some resource declarations like imports, globals and attributes that are assigned and used by your rules and queries. However, you are also able to spread your rules across multiple rule files (in that case, the extension .rule is suggested, but not required) - spreading rules across files can help with managing large numbers of rules. A DRL file is simply a text file.

The overall structure of a rule file is:

The order in which the elements are declared is not important, except for the package name that, if declared, must be the first element in the rules file. All elements are optional, so you will use only those you need. We will discuss each of them in the following sections.

For the impatient, just as an early view, a rule has the following rough structure:

rule "name"

attributes

when

LHS

then

RHS

end

It's really that simple. Mostly punctuation is not needed, even the double quotes for "name" are optional, as are newlines. Attributes are simple (always optional) hints to how the rule should behave. LHS is the conditional parts of the rule, which follows a certain syntax which is covered below. RHS is basically a block that allows dialect specific semantic code to be executed.

It is important to note that white space is not important, except in the case of domain specific languages, where lines are processed one by one and spaces may be significant to the domain language.

Drools 5 introduces the concept of hard and soft keywords.

Hard keywords are reserved, you cannot use any hard keyword when naming your domain objects, properties, methods, functions and other elements that are used in the rule text.

Here is the list of hard keywords that must be avoided as identifiers when writing rules:

truefalsenull

Soft keywords are just recognized in their context, enabling you to use these words in any other place if you wish, although, it is still recommended to avoid them, to avoid confusions, if possible. Here is a list of the soft keywords:

lock-on-activedate-effectivedate-expiresno-loopauto-focusactivation-groupagenda-groupruleflow-groupentry-pointdurationpackageimportdialectsalienceenabledattributesruleextendwhen

then

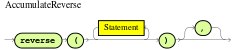

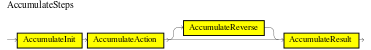

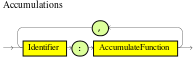

templatequerydeclarefunctionglobalevalnotinorandexistsforallaccumulate

collect

from

actionreverseresultendover

init

Of course, you can have these (hard and soft) words as part of a method name in camel case, like notSomething() or accumulateSomething() - there are no issues with that scenario.

Although the 3 hard keywords above are unlikely to be used in your existing domain models, if you absolutely need to use them as identifiers instead of keywords, the DRL language provides the ability to escape hard keywords on rule text. To escape a word, simply enclose it in grave accents, like this:

Holiday( `true` == "yes" ) // please note that Drools will resolve that reference to the method Holiday.isTrue()Comments are sections of text that are ignored by the rule engine. They are stripped out when they are encountered, except inside semantic code blocks, like the RHS of a rule.

To create single line comments, you can use '//'. The parser will ignore anything in the line after the comment symbol. Example:

rule "Testing Comments"

when

// this is a single line comment

eval( true ) // this is a comment in the same line of a pattern

then

// this is a comment inside a semantic code block

end

Warning

'#' for comments has been removed.

Multi-line comments are used to comment blocks of text, both in and outside semantic code blocks. Example:

rule "Test Multi-line Comments"

when

/* this is a multi-line comment

in the left hand side of a rule */

eval( true )

then

/* and this is a multi-line comment

in the right hand side of a rule */

end Drools 5 introduces standardized error messages. This standardization aims to help users to find and resolve problems in a easier and faster way. In this section you will learn how to identify and interpret those error messages, and you will also receive some tips on how to solve the problems associated with them.

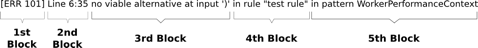

The standardization includes the error message format and to better explain this format, let's use the following example:

1st Block: This area identifies the error code.

2nd Block: Line and column information.

3rd Block: Some text describing the problem.

4th Block: This is the first context. Usually indicates the rule, function, template or query where the error occurred. This block is not mandatory.

5th Block: Identifies the pattern where the error occurred. This block is not mandatory.

Indicates the most common errors, where the parser came to a decision point but couldn't identify an alternative. Here are some examples:

The above example generates this message:

[ERR 101] Line 4:4 no viable alternative at input 'exits' in rule one

At first glance this seems to be valid syntax, but it is not (exits != exists). Let's take a look at next example:

Example 8.3.

1: package org.drools.examples;

2: rule

3: when

4: Object()

5: then

6: System.out.println("A RHS");

7: end

Now the above code generates this message:

[ERR 101] Line 3:2 no viable alternative at input 'WHEN'

This message means that the parser encountered the token WHEN, actually a hard keyword, but it's in the wrong place since the the rule name is missing.

The error "no viable alternative" also occurs when you make a simple lexical mistake. Here is a sample of a lexical problem:

The above code misses to close the quotes and because of this the parser generates this error message:

[ERR 101] Line 0:-1 no viable alternative at input '<eof>' in rule simple_rule in pattern Student

Note

Usually the Line and Column information are accurate, but in some cases (like unclosed quotes), the parser generates a 0:-1 position. In this case you should check whether you didn't forget to close quotes, apostrophes or parentheses.

This error indicates that the parser was looking for a particular symbol that it didn't find at the current input position. Here are some samples:

The above example generates this message:

[ERR 102] Line 0:-1 mismatched input '<eof>' expecting ')' in rule simple_rule in pattern Bar

To fix this problem, it is necessary to complete the rule statement.

Note

Usually when you get a 0:-1 position, it means that parser reached the end of source.

The following code generates more than one error message:

Example 8.6.

1: package org.drools.examples;

2:

3: rule "Avoid NPE on wrong syntax"

4: when

5: not( Cheese( ( type == "stilton", price == 10 ) || ( type == "brie", price == 15 ) ) from $cheeseList )

6: then

7: System.out.println("OK");

8: end

These are the errors associated with this source:

[ERR 102] Line 5:36 mismatched input ',' expecting ')' in rule "Avoid NPE on wrong syntax" in pattern Cheese

[ERR 101] Line 5:57 no viable alternative at input 'type' in rule "Avoid NPE on wrong syntax"

[ERR 102] Line 5:106 mismatched input ')' expecting 'then' in rule "Avoid NPE on wrong syntax"

Note that the second problem is related to the first. To fix it, just replace the commas (',') by AND operator ('&&').

Note

In some situations you can get more than one error message. Try to fix one by one, starting at the first one. Some error messages are generated merely as consequences of other errors.

A validating semantic predicate evaluated to false. Usually these semantic predicates are used to identify soft keywords. This sample shows exactly this situation:

Example 8.7.

1: package nesting;

2: dialect "mvel"

3:

4: import org.drools.compiler.Person

5: import org.drools.compiler.Address

6:

7: fdsfdsfds

8:

9: rule "test something"

10: when

11: p: Person( name=="Michael" )

12: then

13: p.name = "other";

14: System.out.println(p.name);

15: end

With this sample, we get this error message:

[ERR 103] Line 7:0 rule 'rule_key' failed predicate: {(validateIdentifierKey(DroolsSoftKeywords.RULE))}? in rule

The fdsfdsfds text is invalid and

the parser couldn't identify it as the soft keyword

rule.

Note

This error is very similar to 102: Mismatched input, but usually involves soft keywords.

This error is associated with the eval clause,

where its expression may not be terminated with a semicolon. Check this

example:

Due to the trailing semicolon within eval, we get this error message:

[ERR 104] Line 3:4 trailing semi-colon not allowed in rule simple_rule

This problem is simple to fix: just remove the semi-colon.

The recognizer came to a subrule in the grammar that must match an alternative at least once, but the subrule did not match anything. Simply put: the parser has entered a branch from where there is no way out. This example illustrates it:

This is the message associated to the above sample:

[ERR 105] Line 2:2 required (...)+ loop did not match anything at input 'aa' in template test_error

To fix this problem it is necessary to remove the numeric value as it is neither a valid data type which might begin a new template slot nor a possible start for any other rule file construct.

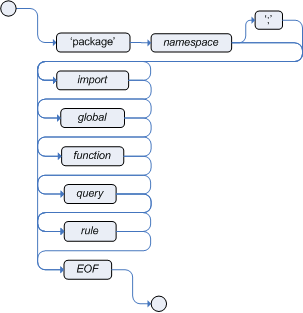

A package is a collection of rules and other related constructs, such as imports and globals. The package members are typically related to each other - perhaps HR rules, for instance. A package represents a namespace, which ideally is kept unique for a given grouping of rules. The package name itself is the namespace, and is not related to files or folders in any way.

It is possible to assemble rules from multiple rule sources, and have one top level package configuration that all the rules are kept under (when the rules are assembled). Although, it is not possible to merge into the same package resources declared under different names. A single Rulebase may, however, contain multiple packages built on it. A common structure is to have all the rules for a package in the same file as the package declaration (so that is it entirely self-contained).

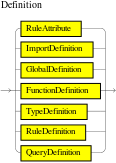

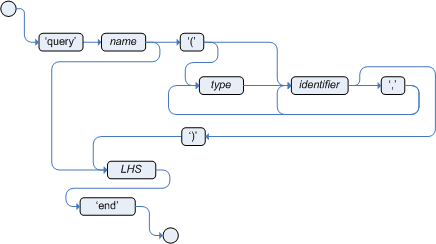

The following railroad diagram shows all the components that may make

up a package. Note that a package must have a namespace and be declared

using standard Java conventions for package names; i.e., no spaces, unlike

rule names which allow spaces. In terms of the order of elements, they can

appear in any order in the rule file, with the exception of the package

statement, which must be at the top of the file. In all cases, the semicolons are

optional.

Notice that any rule attribute (as described the section Rule Attributes) may also be written at package level, superseding the attribute's default value. The modified default may still be replaced by an attribute setting within a rule.

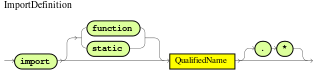

Import statements work like import statements in Java. You need to

specify the fully qualified paths and type names for any objects you want

to use in the rules. Drools automatically imports classes from the

Java package of the same name, and also from the package

java.lang.

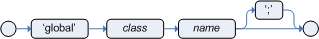

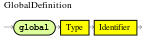

With global you define global variables. They are used to make

application objects available to the rules. Typically, they are used

to provide data or services that the rules use, especially application

services used in rule consequences, and to return data from the rules,

like logs or values added in rule consequences, or for the rules to

interact with the application, doing callbacks. Globals are not

inserted into the Working Memory, and therefore a global should never be

used to establish conditions in rules except when it has a

constant immutable value. The engine cannot be notified about value

changes of globals and does not track their changes. Incorrect use

of globals in constraints may yield surprising results - surprising

in a bad way.

If multiple packages declare globals with the same identifier they must be of the same type and all of them will reference the same global value.

In order to use globals you must:

Declare your global variable in your rules file and use it in rules. Example:

global java.util.List myGlobalList; rule "Using a global" when eval( true ) then myGlobalList.add( "Hello World" ); endSet the global value on your working memory. It is a best practice to set all global values before asserting any fact to the working memory. Example:

List list = new ArrayList(); KieSession kieSession = kiebase.newKieSession(); kieSession.setGlobal( "myGlobalList", list );

Note that these are just named instances of objects that you pass in

from your application to the working memory. This means you can pass in

any object you want: you could pass in a service locator, or perhaps a

service itself. With the new from element it is now common to pass a

Hibernate session as a global, to allow from to pull data from a named

Hibernate query.

One example may be an instance of a Email service. In your integration code that is calling the rule engine, you obtain your emailService object, and then set it in the working memory. In the DRL, you declare that you have a global of type EmailService, and give it the name "email". Then in your rule consequences, you can use things like email.sendSMS(number, message).

Globals are not designed to share data between rules and they should never be used for that purpose. Rules always reason and react to the working memory state, so if you want to pass data from rule to rule, assert the data as facts into the working memory.

Care must be taken when changing data held by globals because the rule engine is not aware of those changes, hence cannot react to them.

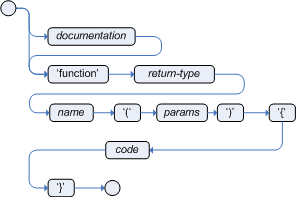

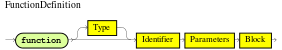

Functions are a way to put semantic code in your rule source file, as

opposed to in normal Java classes. They can't do anything more than what you

can do with helper classes. (In fact, the compiler generates the helper class

for you behind the scenes.) The main advantage of using functions in a rule

is that you can keep the logic all in one place, and you can change the

functions as needed (which can be a good or a bad thing). Functions are most

useful for invoking actions on the consequence (then) part of a rule,

especially if that particular action is used over and over again, perhaps

with only differing parameters for each rule.

A typical function declaration looks like:

function String hello(String name) {

return "Hello "+name+"!";

}

Note that the function keyword is used, even though its not really

part of Java. Parameters to the function are defined as for a method, and

you don't have to have parameters if they are not needed. The return type

is defined just like in a regular method.

Alternatively, you could use a static method in a helper class,

e.g., Foo.hello(). Drools supports the use of

function imports, so all you would need to do is:

import function my.package.Foo.helloIrrespective of the way the function is defined or imported, you use a function by calling it by its name, in the consequence or inside a semantic code block. Example:

rule "using a static function"

when

eval( true )

then

System.out.println( hello( "Bob" ) );

end

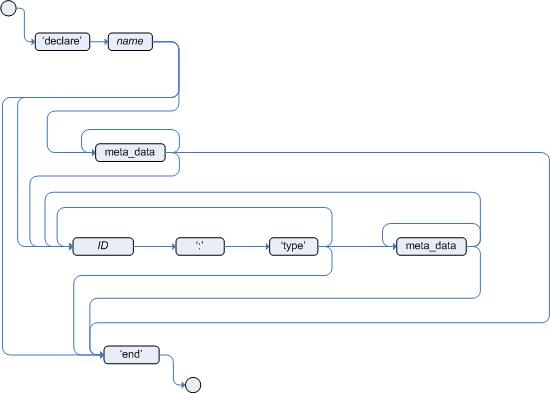

Type declarations have two main goals in the rules engine: to allow the declaration of new types, and to allow the declaration of metadata for types.

Declaring new types: Drools works out of the box with plain Java objects as facts. Sometimes, however, users may want to define the model directly to the rules engine, without worrying about creating models in a lower level language like Java. At other times, there is a domain model already built, but eventually the user wants or needs to complement this model with additional entities that are used mainly during the reasoning process.

Declaring metadata: facts may have meta information associated to them. Examples of meta information include any kind of data that is not represented by the fact attributes and is consistent among all instances of that fact type. This meta information may be queried at runtime by the engine and used in the reasoning process.

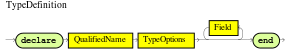

To declare a new type, all you need to do is use the keyword

declare, followed by the list of fields, and the

keyword end. A new fact must have a list of fields,

otherwise the engine will look for an existing fact class in the classpath

and raise an error if not found.

Example 8.10. Declaring a new fact type: Address

declare Address

number : int

streetName : String

city : String

end

The previous example declares a new fact type called

Address. This fact type will have three attributes:

number, streetName and city. Each

attribute has a type that can be any valid Java type, including any other

class created by the user or even other fact types previously

declared.

For instance, we may want to declare another fact type

Person:

Example 8.11. declaring a new fact type: Person

declare Person

name : String

dateOfBirth : java.util.Date

address : Address

end

As we can see on the previous example, dateOfBirth is

of type java.util.Date, from the Java API, while

address is of the previously defined fact type

Address.

You may avoid having to write the fully qualified name of a class

every time you write it by using the import clause, as

previously discussed.

Example 8.12. Avoiding the need to use fully qualified class names by using import

import java.util.Date

declare Person

name : String

dateOfBirth : Date

address : Address

endWhen you declare a new fact type, Drools will, at compile time, generate bytecode that implements a Java class representing the fact type. The generated Java class will be a one-to-one Java Bean mapping of the type definition. So, for the previous example, the generated Java class would be:

Example 8.13. generated Java class for the previous Person fact type declaration

public class Person implements Serializable {

private String name;

private java.util.Date dateOfBirth;

private Address address;

// empty constructor

public Person() {...}

// constructor with all fields

public Person( String name, Date dateOfBirth, Address address ) {...}

// if keys are defined, constructor with keys

public Person( ...keys... ) {...}

// getters and setters

// equals/hashCode

// toString

}

Since the generated class is a simple Java class, it can be used transparently in the rules, like any other fact.

Example 8.14. Using the declared types in rules

rule "Using a declared Type"

when

$p : Person( name == "Bob" )

then

// Insert Mark, who is Bob's mate.

Person mark = new Person();

mark.setName("Mark");

insert( mark );

end

DRL also supports the declaration of enumerative types. Such type declarations require the additional keyword enum, followed by a comma separated list of admissible values terminated by a semicolon.

The compiler will generate a valid Java enum, with static methods valueOf() and values(), as well as instance methods ordinal(), compareTo() and name().

Complex enums are also partially supported, declaring the internal fields similarly to a regular type declaration. Notice that as of version 6.x, enum fields do NOT support other declared types or enums

Example 8.16.

declare enum DaysOfWeek

SUN("Sunday"),MON("Monday"),TUE("Tuesday"),WED("Wednesday"),THU("Thursday"),FRI("Friday"),SAT("Saturday");

fullName : String

end

Enumeratives can then be used in rules

Example 8.17. Using declarative enumerations in rules

rule "Using a declared Enum"

when

$p : Employee( dayOff == DaysOfWeek.MONDAY )

then

...

end

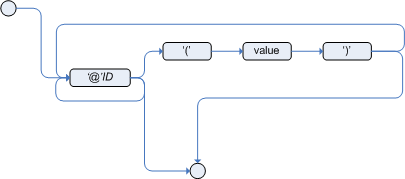

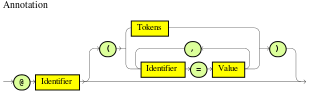

Metadata may be assigned to several different constructions in Drools: fact types, fact attributes and rules. Drools uses the at sign ('@') to introduce metadata, and it always uses the form:

@metadata_key( metadata_value )The parenthesized metadata_value is optional.

For instance, if you want to declare a metadata attribute like

author, whose value is Bob, you could

simply write:

Drools allows the declaration of any arbitrary metadata attribute, but some will have special meaning to the engine, while others are simply available for querying at runtime. Drools allows the declaration of metadata both for fact types and for fact attributes. Any metadata that is declared before the attributes of a fact type are assigned to the fact type, while metadata declared after an attribute are assigned to that particular attribute.

Example 8.19. Declaring metadata attributes for fact types and attributes

import java.util.Date

declare Person

@author( Bob )

@dateOfCreation( 01-Feb-2009 )

name : String @key @maxLength( 30 )

dateOfBirth : Date

address : Address

endIn the previous example, there are two metadata items declared for

the fact type (@author and @dateOfCreation) and

two more defined for the name attribute (@key and

@maxLength). Please note that the @key metadata

has no required value, and so the parentheses and the value were

omitted.:

Some annotations have predefined semantics that are interpreted by the engine. The following is a list of some of these predefined annotations and their meaning.

The @role annotation defines how the engine should handle instances of that type: either as regular facts or as events. It accepts two possible values:

fact : this is the default, declares that the type is to be handled as a regular fact.

event : declares that the type is to be handled as an event.

The following example declares that the fact type StockTick in a stock broker application is to be handled as an event.

Example 8.20. declaring a fact type as an event

import some.package.StockTick

declare StockTick

@role( event )

end

The same applies to facts declared inline. If StockTick was a fact type declared in the DRL itself, instead of a previously existing class, the code would be:

Example 8.21. declaring a fact type and assigning it the event role

declare StockTick

@role( event )

datetime : java.util.Date

symbol : String

price : double

end

By default all type declarations are compiled with type safety enabled; @typesafe( false ) provides a means to override this behaviour by permitting a fall-back, to type unsafe evaluation where all constraints are generated as MVEL constraints and executed dynamically. This can be important when dealing with collections that do not have any generics or mixed type collections.

Every event has an associated timestamp assigned to it. By default, the timestamp for a given event is read from the Session Clock and assigned to the event at the time the event is inserted into the working memory. Although, sometimes, the event has the timestamp as one of its own attributes. In this case, the user may tell the engine to use the timestamp from the event's attribute instead of reading it from the Session Clock.

@timestamp( <attributeName> )To tell the engine what attribute to use as the source of the event's timestamp, just list the attribute name as a parameter to the @timestamp tag.

Example 8.22. declaring the VoiceCall timestamp attribute

declare VoiceCall

@role( event )

@timestamp( callDateTime )

end

Drools supports both event semantics: point-in-time events and interval-based events. A point-in-time event is represented as an interval-based event whose duration is zero. By default, all events have duration zero. The user may attribute a different duration for an event by declaring which attribute in the event type contains the duration of the event.

@duration( <attributeName> )So, for our VoiceCall fact type, the declaration would be:

Example 8.23. declaring the VoiceCall duration attribute

declare VoiceCall

@role( event )

@timestamp( callDateTime )

@duration( callDuration )

endImportant

This tag is only considered when running the engine in STREAM mode. Also, additional discussion on the effects of using this tag is made on the Memory Management section. It is included here for completeness.

Events may be automatically expired after some time in the working memory. Typically this happens when, based on the existing rules in the knowledge base, the event can no longer match and activate any rules. Although, it is possible to explicitly define when an event should expire.

@expires( <timeOffset> )The value of timeOffset is a temporal interval in the form:

[#d][#h][#m][#s][#[ms]]Where [ ] means an optional parameter and # means a numeric value.

So, to declare that the VoiceCall facts should be expired after 1 hour and 35 minutes after they are inserted into the working memory, the user would write:

Example 8.24. declaring the expiration offset for the VoiceCall events

declare VoiceCall

@role( event )

@timestamp( callDateTime )

@duration( callDuration )

@expires( 1h35m )

endThe @expires policy will take precedence and override the implicit expiration offset calculated from temporal constraints and sliding windows in the knowledge base.

Facts that implement support for property changes as defined in the Javabean(tm) spec, now can be annotated so that the engine register itself to listen for changes on fact properties. The boolean parameter that was used in the insert() method in the Drools 4 API is deprecated and does not exist in the drools-api module.

As noted before, Drools also supports annotations in type attributes. Here is a list of predefined attribute annotations.

Declaring an attribute as a key attribute has 2 major effects on generated types:

The attribute will be used as a key identifier for the type, and as so, the generated class will implement the equals() and hashCode() methods taking the attribute into account when comparing instances of this type.

Drools will generate a constructor using all the key attributes as parameters.

For instance:

Example 8.26. example of @key declarations for a type

declare Person

firstName : String @key

lastName : String @key

age : int

end

For the previous example, Drools will generate equals() and hashCode() methods that will check the firstName and lastName attributes to determine if two instances of Person are equal to each other, but will not check the age attribute. It will also generate a constructor taking firstName and lastName as parameters, allowing one to create instances with a code like this:

Example 8.27. creating an instance using the key constructor

Person person = new Person( "John", "Doe" );Patterns support positional arguments on type declarations.

Positional arguments are ones where you don't need to specify the field name, as the position maps to a known named field. i.e. Person( name == "mark" ) can be rewritten as Person( "mark"; ). The semicolon ';' is important so that the engine knows that everything before it is a positional argument. Otherwise we might assume it was a boolean expression, which is how it could be interpreted after the semicolon. You can mix positional and named arguments on a pattern by using the semicolon ';' to separate them. Any variables used in a positional that have not yet been bound will be bound to the field that maps to that position.

declare Cheese

name : String

shop : String

price : int

end

The default order is the declared order, but this can be overridden using @position

declare Cheese

name : String @position(1)

shop : String @position(2)

price : int @position(0)

end

The @Position annotation, in the org.drools.definition.type package, can be used to annotate original pojos on the classpath. Currently only fields on classes can be annotated. Inheritance of classes is supported, but not interfaces of methods yet.

Example patterns, with two constraints and a binding. Remember semicolon ';' is used to differentiate the positional section from the named argument section. Variables and literals and expressions using just literals are supported in positional arguments, but not variables.

Cheese( "stilton", "Cheese Shop", p; )

Cheese( "stilton", "Cheese Shop"; p : price )

Cheese( "stilton"; shop == "Cheese Shop", p : price )

Cheese( name == "stilton"; shop == "Cheese Shop", p : price )

@Position is inherited when beans extend each other; while not recommended, two fields may have the same @position value, and not all consecutive values need be declared. If a @position is repeated, the conflict is solved using inheritance (fields in the superclass have the precedence) and the declaration order. If a @position value is missing, the first field without an explicit @position (if any) is selected to fill the gap. As always, conflicts are resolved by inheritance and declaration order.

declare Cheese

name : String

shop : String @position(2)

price : int @position(0)

end

declare SeasonedCheese extends Cheese

year : Date @position(0)

origin : String @position(6)

country : String

endIn the example, the field order would be : price (@position 0 in the superclass), year (@position 0 in the subclass), name (first field with no @position), shop (@position 2), country (second field without @position), origin.

Drools allows the declaration of metadata attributes for existing types in the same way as when declaring metadata attributes for new fact types. The only difference is that there are no fields in that declaration.

For instance, if there is a class org.drools.examples.Person, and one wants to declare metadata for it, it's possible to write the following code:

Example 8.28. Declaring metadata for an existing type

import org.drools.examples.Person

declare Person

@author( Bob )

@dateOfCreation( 01-Feb-2009 )

end

Instead of using the import, it is also possible to reference the class by its fully qualified name, but since the class will also be referenced in the rules, it is usually shorter to add the import and use the short class name everywhere.

Example 8.29. Declaring metadata using the fully qualified class name

declare org.drools.examples.Person

@author( Bob )

@dateOfCreation( 01-Feb-2009 )

endGenerate constructors with parameters for declared types.

Example: for a declared type like the following:

declare Person

firstName : String @key

lastName : String @key

age : int

end

The compiler will implicitly generate 3 constructors: one without parameters, one with the @key fields, and one with all fields.

Person() // parameterless constructor

Person( String firstName, String lastName )

Person( String firstName, String lastName, int age )@typesafe( <boolean>) has been added to type declarations. By default all type declarations are compiled with type safety enabled; @typesafe( false ) provides a means to override this behaviour by permitting a fall-back, to type unsafe evaluation where all constraints are generated as MVEL constraints and executed dynamically. This can be important when dealing with collections that do not have any generics or mixed type collections.

Declared types are usually used inside rules files, while Java models are used when sharing the model between rules and applications. Although, sometimes, the application may need to access and handle facts from the declared types, especially when the application is wrapping the rules engine and providing higher level, domain specific user interfaces for rules management.

In such cases, the generated classes can be handled as usual with the Java Reflection API, but, as we know, that usually requires a lot of work for small results. Therefore, Drools provides a simplified API for the most common fact handling the application may want to do.

The first important thing to realize is that a declared fact will

belong to the package where it was declared. So, for instance, in the

example below, Person will belong to the

org.drools.examples package, and so the fully qualified name

of the generated class will be

org.drools.examples.Person.

Example 8.30. Declaring a type in the org.drools.examples package

package org.drools.examples

import java.util.Date

declare Person

name : String

dateOfBirth : Date

address : Address

endDeclared types, as discussed previously, are generated at knowledge base compilation time, i.e., the application will only have access to them at application run time. Therefore, these classes are not available for direct reference from the application.

Drools then provides an interface through which users can handle

declared types from the application code:

org.drools.definition.type.FactType. Through this interface,

the user can instantiate, read and write fields in the declared fact

types.

Example 8.31. Handling declared fact types through the API

// get a reference to a knowledge base with a declared type:

KieBase kbase = ...

// get the declared FactType

FactType personType = kbase.getFactType( "org.drools.examples",

"Person" );

// handle the type as necessary:

// create instances:

Object bob = personType.newInstance();

// set attributes values

personType.set( bob,

"name",

"Bob" );

personType.set( bob,

"age",

42 );

// insert fact into a session

KieSession ksession = ...

ksession.insert( bob );

ksession.fireAllRules();

// read attributes

String name = personType.get( bob, "name" );

int age = personType.get( bob, "age" );

The API also includes other helpful methods, like setting all the attributes at once, reading values from a Map, or reading all attributes at once, into a Map.

Although the API is similar to Java reflection (yet much simpler to use), it does not use reflection underneath, relying on much more performant accessors implemented with generated bytecode.

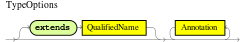

Type declarations now support 'extends' keyword for inheritance

In order to extend a type declared in Java by a DRL declared subtype, repeat the supertype in a declare statement without any fields.

b org.people.Person

declare Person end

declare Student extends Person

school : String

end

declare LongTermStudent extends Student

years : int

course : String

endWARNING : this feature is still experimental and subject to changes

The same fact may have multiple dynamic types which do not fit naturally in a class hierarchy. Traits allow to model this very common scenario. A trait is an interface that can be applied (and eventually removed) to an individual object at runtime. To create a trait rather than a traditional bean, one has to declare them explicitly as in the following example:

Example 8.32.

declare trait GoldenCustomer

// fields will map to getters/setters

code : String

balance : long

discount : int

maxExpense : long

endAt runtime, this declaration results in an interface, which can be used to write patterns, but can not be instantiated directly. In order to apply a trait to an object, we provide the new don keyword, which can be used as simply as this:

when a core object dons a trait, a proxy class is created on the fly (one such class will be generated lazily for each core/trait class combination). The proxy instance, which wraps the core object and implements the trait interface, is inserted automatically and will possibly activate other rules. An immediate advantage of declaring and using interfaces, getting the implementation proxy for free from the engine, is that multiple inheritance hierarchies can be exploited when writing rules. The core classes, however, need not implement any of those interfaces statically, also facilitating the use of legacy classes as cores. In fact, any object can don a trait, provided that they are declared as @Traitable. Notice that this annotation used to be optional, but now is mandatory.

Example 8.34.

import org.drools.core.factmodel.traits.Traitable;

declare Customer

@Traitable

code : String

balance : long

endThe only connection between core classes and trait interfaces is at the proxy level: a trait is not specifically tied to a core class. This means that the same trait can be applied to totally different objects. For this reason, the trait does not transparently expose the fields of its core object. So, when writing a rule using a trait interface, only the fields of the interface will be available, as usual. However, any field in the interface that corresponds to a core object field, will be mapped by the proxy class:

Example 8.35.

when

$o: OrderItem( $p : price, $code : custCode )

$c: GoldenCustomer( code == $code, $a : balance, $d: discount )

then

$c.setBalance( $a - $p*$d );

endIn this case, the code and balance would be read from the underlying Customer object. Likewise, the setAccount will modify the underlying object, preserving a strongly typed access to the data structures. A hard field must have the same name and type both in the core class and all donned interfaces. The name is used to establish the mapping: if two fields have the same name, then they must also have the same declared type. The annotation @org.drools.core.factmodel.traits.Alias allows to relax this restriction. If an @Alias is provided, its value string will be used to resolve mappings instead of the original field name. @Alias can be applied both to traits and core beans.

Example 8.36.

import org.drools.core.factmodel.traits.*;

declare trait GoldenCustomer

balance : long @Alias( "org.acme.foo.accountBalance" )

end

declare Person

@Traitable

name : String

savings : long @Alias( "org.acme.foo.accountBalance" )

end

when

GoldenCustomer( balance > 1000 ) // will react to new Person( 2000 )

then

end

More work is being done on reaxing this constraint (see the experimental section on "logical" traits later). Now, one might wonder what happens when a core class does NOT provide the implementation for a field defined in an interface. We call hard fields those trait fields which are also core fields and thus readily available, while we define soft those fields which are NOT provided by the core class. Hidden fields, instead, are fields in the core class not exposed by the interface.

So, while hard field management is intuitive, there remains the problem of soft and hidden fields. Hidden fields are normally only accessible using the core class directly. However, the "fields" Map can be used on a trait interface to access a hidden field. If the field can't be resolved, null will be returned. Notice that this feature is likely to change in the future.

Example 8.37.

when

$sc : GoldenCustomer( fields[ "age" ] > 18 ) // age is declared by the underlying core class, but not by GoldenCustomer

thenSoft fields, instead, are stored in a Map-like data structure that is specific to each core object and referenced by the proxy(es), so that they are effectively shared even when an object dons multiple traits.

Example 8.38.

when

$sc : GoldenCustomer( $c : code, // hard getter

$maxExpense : maxExpense > 1000 // soft getter

)

then

$sc.setDiscount( ... ); // soft setter

endA core object also holds a reference to all its proxies, so that it is possible to track which type(s) have been added to an object, using a sort of dynamic "instanceof" operator, which we called isA. The operator can accept a String, a class literal or a list of class literals. In the latter case, the constraint is satisfied only if all the traits have been donned.

Example 8.39.

$sc : GoldenCustomer( $maxExpense : maxExpense > 1000,

this isA "SeniorCustomer", this isA [ NationalCustomer.class, OnlineCustomer.class ]

)Eventually, the business logic may require that a trait is removed from a wrapped object. To this end, we provide two options. The first is a "logical don", which will result in a logical insertion of the proxy resulting from the traiting operation. The TMS will ensure that the trait is removed when its logical support is removed in the first place.

Example 8.40.

then

don( $x, // core object

Customer.class, // trait class

true // optional flag for logical insertion

)

The second is the use of the "shed" keyword, which causes the removal of any type that is a subtype (or equivalent) of the one passed as an argument. Notice that, as of version 5.5, shed would only allow to remove a single specific trait.

This operation returns another proxy implementing the org.drools.core.factmodel.traits.Thing interface, where the getFields() and getCore() methods are defined. Internally, in fact, all declared traits are generated to extend this interface (in addition to any others specified). This allows to preserve the wrapper with the soft fields which would otherwise be lost.

A trait and its proxies are also correlated in another way. Starting from version 5.6, whenever a core object is "modified", its proxies are "modified" automatically as well, to allow trait-based patterns to react to potential changes in hard fields. Likewise, whenever a trait proxy (mached by a trait pattern) is modified, the modification is propagated to the core class and the other traits. Morover, whenever a don operation is performed, the core object is also modified automatically, to reevaluate any "isA" operation which may be triggered.

Potentially, this may result in a high number of modifications, impacting performance (and correctness) heavily. So two solutions are currently implemented. First, whenever a core object is modified, only the most specific traits (in the sense of inheritance between trait interfaces) are updated and an internal blocking mechanism is in place to ensure that each potentially matching pattern is evaluated once and only once. So, in the following situation:

declare trait GoldenCustomer end

declare trait NationalGoldenustomer extends GoldenCustomer end

declare trait SeniorGoldenCustomer extends GoldenCustomer enda modification of an object that is both a GoldenCustomer, a NationalGoldenCustomer and a SeniorGoldenCustomer wold cause only the latter two proxies to be actually modified. The first would match any pattern for GoldenCustomer and NationalGoldenCustomer; the latter would instead be prevented from rematching GoldenCustomer, but would be allowed to match SeniorGoldenCustomer patterns. It is not necessary, instead, to modify the GoldenCustomer proxy since it is already covered by at least one other more specific trait.

The second method, up to the usr, is to mark traits as @PropertyReactive. Property reactivity is trait-enabled and takes into account the trait field mappings, so to block unnecessary propagations.

WARNING : This feature is extremely experimental and subject to changes

Normally, a hard field must be exposed with its original type by all traits donned by an object, to prevent situations such as

Example 8.42.

declare Person

@Traitable

name : String

id : String

end

declare trait Customer

id : String

end

declare trait Patient

id : long // Person can't don Patient, or an exception will be thrown

endShould a Person don both Customer and Patient, the type of the hard field id would be ambiguous. However, consider the following example, where GoldenCustomers refer their best friends so that they become Customers as well:

Example 8.43.

declare Person

@Traitable( logical=true )

bestFriend : Person

end

declare trait Customer end

declare trait GoldenCustomer extends Customer

refers : Customer @Alias( "bestFriend" )

end Aside from the @Alias, a Person-as-GoldenCustomer's best friend might be compatible with the requirements of the trait GoldenCustomer, provided that they are some kind of Customer themselves. Marking a Person as "logically traitable" - i.e. adding the annotation @Traitable( logical = true ) - will instruct the engine to try and preserve the logical consistency rather than throwing an exception due to a hard field with different type declarations (Person vs Customer). The following operations would then work:

Example 8.44.

Person p1 = new Person();

Person p2 = new Person();

p1.setBestFriend( p2 );

...

Customer c2 = don( p2, Customer.class );

...

GoldenCustomer gc1 = don( p1, GoldenCustomer.class );

...

p1.getBestFriend(); // returns p2

gc1.getRefers(); // returns c2, a Customer proxy wrapping p2Notice that, by the time p1 becomes GoldenCustomer, p2 must have already become a Customer themselves, otherwise a runtime exception will be thrown since the very definition of GoldenCustomer would have been violated.

In some cases, however, one might want to infer, rather than verify, that p2 is a Customer by virtue that p1 is a GoldenCustomer. This modality can be enabled by marking Customer as "logical", using the annotation @org.drools.core.factmodel.traits.Trait( logical = true ). In this case, should p2 not be a Customer by the time that p1 becomes a GoldenCustomer, it will be automatically don the trait Customer to preserve the logical integrity of the system.

Notice that the annotation on the core class enables the dynamic type management for its fields, whereas the annotation on the traits determines whether they will be enforced as integrity constraints or cascaded dynamically.

Example 8.45.

import org.drools.factmodel.traits.*;

declare trait Customer

@Trait( logical = true )

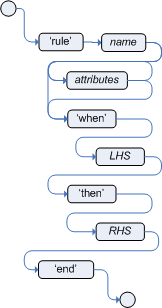

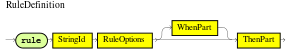

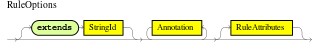

end A rule specifies that when a particular set of conditions occur, specified in the Left Hand Side (LHS), then do what queryis specified as a list of actions in the Right Hand Side (RHS). A common question from users is "Why use when instead of if?" "When" was chosen over "if" because "if" is normally part of a procedural execution flow, where, at a specific point in time, a condition is to be checked. In contrast, "when" indicates that the condition evaluation is not tied to a specific evaluation sequence or point in time, but that it happens continually, at any time during the life time of the engine; whenever the condition is met, the actions are executed.

A rule must have a name, unique within its rule package. If you define a rule twice in the same DRL it produces an error while loading. If you add a DRL that includes a rule name already in the package, it replaces the previous rule. If a rule name is to have spaces, then it will need to be enclosed in double quotes (it is best to always use double quotes).

Attributes - described below - are optional. They are best written one per line.

The LHS of the rule follows the when keyword

(ideally on a new line), similarly the RHS follows the

then keyword (again, ideally on a newline). The rule is

terminated by the keyword end. Rules cannot be

nested.

Example 8.46. Rule Syntax Overview

rule "<name>"

<attribute>*

when

<conditional element>*

then

<action>*

endExample 8.47. A simple rule

rule "Approve if not rejected"

salience -100

agenda-group "approval"

when

not Rejection()

p : Policy(approved == false, policyState:status)

exists Driver(age > 25)

Process(status == policyState)

then

log("APPROVED: due to no objections.");

p.setApproved(true);

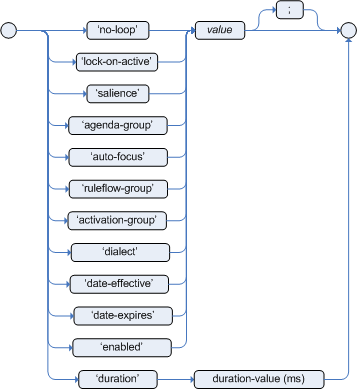

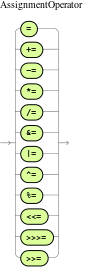

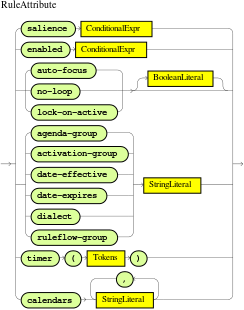

endRule attributes provide a declarative way to influence the behavior of the rule. Some are quite simple, while others are part of complex subsystems such as ruleflow. To get the most from Drools you should make sure you have a proper understanding of each attribute.

no-loopdefault value:

falsetype: Boolean

When a rule's consequence modifies a fact it may cause the rule to activate again, causing an infinite loop. Setting no-loop to true will skip the creation of another Activation for the rule with the current set of facts.

ruleflow-groupdefault value: N/A

type: String

Ruleflow is a Drools feature that lets you exercise control over the firing of rules. Rules that are assembled by the same ruleflow-group identifier fire only when their group is active.

lock-on-activedefault value:

falsetype: Boolean

Whenever a ruleflow-group becomes active or an agenda-group receives the focus, any rule within that group that has lock-on-active set to true will not be activated any more; irrespective of the origin of the update, the activation of a matching rule is discarded. This is a stronger version of no-loop, because the change could now be caused not only by the rule itself. It's ideal for calculation rules where you have a number of rules that modify a fact and you don't want any rule re-matching and firing again. Only when the ruleflow-group is no longer active or the agenda-group loses the focus those rules with lock-on-active set to true become eligible again for their activations to be placed onto the agenda.

saliencedefault value:

0type: integer

Each rule has an integer salience attribute which defaults to zero and can be negative or positive. Salience is a form of priority where rules with higher salience values are given higher priority when ordered in the Activation queue.

Drools also supports dynamic salience where you can use an expression involving bound variables.

Example 8.48. Dynamic Salience

rule "Fire in rank order 1,2,.." salience( -$rank ) when Element( $rank : rank,... ) then ... endagenda-groupdefault value: MAIN

type: String

Agenda groups allow the user to partition the Agenda providing more execution control. Only rules in the agenda group that has acquired the focus are allowed to fire.

auto-focusdefault value:

falsetype: Boolean

When a rule is activated where the

auto-focusvalue is true and the rule's agenda group does not have focus yet, then it is given focus, allowing the rule to potentially fire.activation-groupdefault value: N/A

type: String

Rules that belong to the same activation-group, identified by this attribute's string value, will only fire exclusively. More precisely, the first rule in an activation-group to fire will cancel all pending activations of all rules in the group, i.e., stop them from firing.

Note: This used to be called Xor group, but technically it's not quite an Xor. You may still hear people mention Xor group; just swap that term in your mind with activation-group.

dialectdefault value: as specified by the package

type: String

possible values: "java" or "mvel"

The dialect species the language to be used for any code expressions in the LHS or the RHS code block. Currently two dialects are available, Java and MVEL. While the dialect can be specified at the package level, this attribute allows the package definition to be overridden for a rule.

date-effectivedefault value: N/A

type: String, containing a date and time definition

A rule can only activate if the current date and time is after date-effective attribute.

date-expiresdefault value: N/A

type: String, containing a date and time definition

A rule cannot activate if the current date and time is after the date-expires attribute.

durationdefault value: no default value

type: long

The duration dictates that the rule will fire after a specified duration, if it is still true.

Rules now support both interval and cron based timers, which replace the now deprecated duration attribute.

Example 8.50. Sample timer attribute uses

timer ( int: <initial delay> <repeat interval>? )

timer ( int: 30s )

timer ( int: 30s 5m )

timer ( cron: <cron expression> )

timer ( cron:* 0/15 * * * ? )Interval (indicated by "int:") timers follow the semantics of java.util.Timer objects, with an initial delay and an optional repeat interval. Cron (indicated by "cron:") timers follow standard Unix cron expressions:

Example 8.51. A Cron Example

rule "Send SMS every 15 minutes"

timer (cron:* 0/15 * * * ?)

when

$a : Alarm( on == true )

then

channels[ "sms" ].insert( new Sms( $a.mobileNumber, "The alarm is still on" );

endA rule controlled by a timer becomes active when it matches, and once for each individual match. Its consequence is executed repeatedly, according to the timer's settings. This stops as soon as the condition doesn't match any more.

Consequences are executed even after control returns from a call to fireUntilHalt. Moreover, the Engine remains reactive to any changes made to the Working Memory. For instance, removing a fact that was involved in triggering the timer rule's execution causes the repeated execution to terminate, or inserting a fact so that some rule matches will cause that rule to fire. But the Engine is not continually active, only after a rule fires, for whatever reason. Thus, reactions to an insertion done asynchronously will not happen until the next execution of a timer-controlled rule. Disposing a session puts an end to all timer activity.

Conversely when the rule engine runs in passive mode (i.e.: using fireAllRules

instead of fireUntilHalt) by default it doesn't fire consequences of timed rules

unless fireAllRules isn't invoked again. However it is possible to change this

default behavior by configuring the KieSession with a TimedRuleExectionOption

as shown in the following example.

Example 8.52. Configuring a KieSession to automatically execute timed rules

KieSessionConfiguration ksconf = KieServices.Factory.get().newKieSessionConfiguration();

ksconf.setOption( TimedRuleExectionOption.YES );

KSession ksession = kbase.newKieSession(ksconf, null);It is also possible to have a finer grained control on the timed rules that have to be

automatically executed. To do this it is necessary to set a FILTERED

TimedRuleExectionOption that allows to define a callback to filter those

rules, as done in the next example.

Example 8.53. Configuring a filter to choose which timed rules should be automatically executed

KieSessionConfiguration ksconf = KieServices.Factory.get().newKieSessionConfiguration();

conf.setOption( new TimedRuleExectionOption.FILTERED(new TimedRuleExecutionFilter() {

public boolean accept(Rule[] rules) {

return rules[0].getName().equals("MyRule");

}

}) );For what regards interval timers it is also possible to define both the delay and interval as an expression instead of a fixed value. To do that it is necessary to use an expression timer (indicated by "expr:") as in the following example:

Example 8.54. An Expression Timer Example

declare Bean

delay : String = "30s"

period : long = 60000

end

rule "Expression timer"

timer( expr: $d, $p )

when

Bean( $d : delay, $p : period )

then

endThe expressions, $d and $p in this case, can use any variable defined in the pattern matching part of the rule and can be any String that can be parsed in a time duration or any numeric value that will be internally converted in a long representing a duration expressed in milliseconds.

Both interval and expression timers can have 3 optional parameters named "start", "end" and "repeat-limit". When one or more of these parameters are used the first part of the timer definition must be followed by a semicolon ';' and the parameters have to be separated by a comma ',' as in the following example:

Example 8.55. An Interval Timer with a start and an end

timer (int: 30s 10s; start=3-JAN-2010, end=5-JAN-2010)The value for start and end parameters can be a Date, a String representing a Date or a long, or more in general any Number, that will be transformed in a Java Date applying the following conversion:

new Date( ((Number) n).longValue() )Conversely the repeat-limit can be only an integer and it defines the maximum number of repetitions allowed by the timer. If both the end and the repeat-limit parameters are set the timer will stop when the first of the two will be matched.

The using of the start parameter implies the definition of a phase for the timer, where the beginning of the phase is given by the start itself plus the eventual delay. In other words in this case the timed rule will then be scheduled at times:

start + delay + n*periodfor up to repeat-limit times and no later than the end timestamp (whichever first). For instance the rule having the following interval timer

timer ( int: 30s 1m; start="3-JAN-2010" )will be scheduled at the 30th second of every minute after the midnight of the 3-JAN-2010. This also means that if for example you turn the system on at midnight of the 3-FEB-2010 it won't be scheduled immediately but will preserve the phase defined by the timer and so it will be scheduled for the first time 30 seconds after the midnight. If for some reason the system is paused (e.g. the session is serialized and then deserialized after a while) the rule will be scheduled only once to recover from missing activations (regardless of how many activations we missed) and subsequently it will be scheduled again in phase with the timer.

Calendars are used to control when rules can fire. The Calendar API is modelled on Quartz:

Example 8.56. Adapting a Quartz Calendar

Calendar weekDayCal = QuartzHelper.quartzCalendarAdapter(org.quartz.Calendar quartzCal)Calendars are registered with the KieSession:

They can be used in conjunction with normal rules and rules including timers. The rule attribute "calendars" may contain one or more comma-separated calendar names written as string literals.

Example 8.58. Using Calendars and Timers together

rule "weekdays are high priority"

calendars "weekday"

timer (int:0 1h)

when

Alarm()

then

send( "priority high - we have an alarm" );

end

rule "weekend are low priority"

calendars "weekend"

timer (int:0 4h)

when

Alarm()

then

send( "priority low - we have an alarm" );

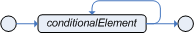

endThe Left Hand Side (LHS) is a common name for the conditional part of the rule. It consists of zero or more Conditional Elements. If the LHS is empty, it will be considered as a condition element that is always true and it will be activated once, when a new WorkingMemory session is created.

Example 8.59. Rule without a Conditional Element

rule "no CEs"

when

// empty

then

... // actions (executed once)

end

// The above rule is internally rewritten as:

rule "eval(true)"

when

eval( true )

then

... // actions (executed once)

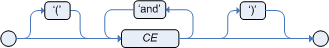

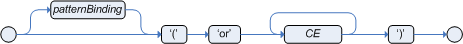

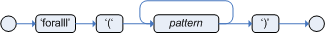

endConditional elements work on one or more

patterns (which are described below). The most

common conditional element is "and". Therefore it is

implicit when you have multiple patterns in the LHS of a rule that are

not connected in any way:

Example 8.60. Implicit and

rule "2 unconnected patterns"

when

Pattern1()

Pattern2()

then

... // actions

end

// The above rule is internally rewritten as:

rule "2 and connected patterns"

when

Pattern1()

and Pattern2()

then

... // actions

endNote

An "and" cannot have a leading declaration

binding (unlike for example or). This is obvious,

since a declaration can only reference a single fact at a time, and

when the "and" is satisfied it matches both facts -

so which fact would the declaration bind to?

// Compile error

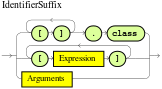

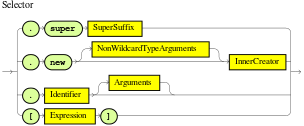

$person : (Person( name == "Romeo" ) and Person( name == "Juliet"))A pattern element is the most important Conditional Element. It can potentially match on each fact that is inserted in the working memory.

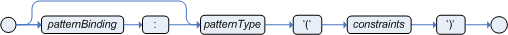

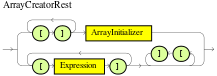

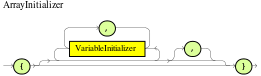

A pattern contains of zero or more constraints and has an optional pattern binding. The railroad diagram below shows the syntax for this.

In its simplest form, with no constraints, a pattern matches

against a fact of the given type. In the following case the type is

Cheese, which means that the pattern will match against

all Person objects in the Working Memory:

Person()The type need not be the actual class of some fact object. Patterns may refer to superclasses or even interfaces, thereby potentially matching facts from many different classes.

Object() // matches all objects in the working memoryInside of the pattern parenthesis is where all the action happens: it defines the constraints for that pattern. For example, with a age related constraint:

Person( age == 100 )Note

For backwards compatibility reasons it's allowed to suffix

patterns with the ; character. But it is not

recommended to do that.

For referring to the matched object, use a pattern binding

variable such as $p.

Example 8.61. Pattern with a binding variable

rule ...

when

$p : Person()

then

System.out.println( "Person " + $p );

endThe prefixed dollar symbol ($) is just a

convention; it can be useful in complex rules where it helps to easily

differentiate between variables and fields, but it is not

mandatory.

A constraint is an expression that returns

true or false. This example has

a constraint that states 5 is smaller than

6:

Person( 5 < 6 ) // just an example, as constraints like this would be useless in a real patternIn essence, it's a Java expression with some enhancements (such

as property access) and a few differences (such as

equals() semantics for ==).

Let's take a deeper look.

Any bean property can be used directly. A bean property is

exposed using a standard Java bean getter: a method

getMyProperty() (or

isMyProperty() for a primitive boolean) which takes

no arguments and return something. For example: the age property is

written as age in DRL instead of the getter

getAge():

Person( age == 50 )

// this is the same as:

Person( getAge() == 50 )Drools uses the standard JDK Introspector

class to do this mapping, so it follows the standard Java bean

specification.

Note

We recommend using property access (age)

over using getters explicitly (getAge()) because

of performance enhancements through field indexing.

Warning

Property accessors must not change the state of the object in a way that may effect the rules. Remember that the rule engine effectively caches the results of its matching in between invocations to make it faster.

public int getAge() {

age++; // Do NOT do this

return age;

}public int getAge() {

Date now = DateUtil.now(); // Do NOT do this

return DateUtil.differenceInYears(now, birthday);

}To solve this latter case, insert a fact that wraps the

current date into working memory and update that fact between

fireAllRules as needed.

Note

The following fallback applies: if the getter of a property cannot be found, the compiler will resort to using the property name as a method name and without arguments:

Person( age == 50 )

// If Person.getAge() does not exists, this falls back to:

Person( age() == 50 )Nested property access is also supported:

Person( address.houseNumber == 50 )

// this is the same as:

Person( getAddress().getHouseNumber() == 50 )Nested properties are also indexed.

Warning

In a stateful session, care should be taken when using nested

accessors as the Working Memory is not aware of any of the nested

values, and does not know when they change. Either consider them

immutable while any of their parent references are inserted into the

Working Memory. Or, instead, if you wish to modify a nested value

you should mark all of the outer facts as updated. In the above

example, when the houseNumber changes, any

Person with that Address must

be marked as updated.

You can use any Java expression that returns a

boolean as a constraint inside the parentheses of a

pattern. Java expressions can be mixed with other expression

enhancements, such as property access:

Person( age == 50 )It is possible to change the evaluation priority by using parentheses, as in any logic or mathematical expression:

Person( age > 100 && ( age % 10 == 0 ) )It is possible to reuse Java methods:

Person( Math.round( weight / ( height * height ) ) < 25.0 )Warning

As for property accessors, methods must not change the state of the object in a way that may affect the rules. Any method executed on a fact in the LHS should be a read only method.

Person( incrementAndGetAge() == 10 ) // Do NOT do thisWarning

The state of a fact should not change between rule invocations (unless those facts are marked as updated to the working memory on every change):

Person( System.currentTimeMillis() % 1000 == 0 ) // Do NOT do thisNormal Java operator precedence applies, see the operator precedence list below.

Important

All operators have normal Java semantics except for

== and !=.

The == operator has null-safe

equals() semantics:

// Similar to: java.util.Objects.equals(person.getFirstName(), "John")

// so (because "John" is not null) similar to:

// "John".equals(person.getFirstName())

Person( firstName == "John" )The != operator has null-safe

!equals() semantics:

// Similar to: !java.util.Objects.equals(person.getFirstName(), "John")

Person( firstName != "John" )Type coercion is always attempted if the field and the value are of different types; exceptions will be thrown if a bad coercion is attempted. For instance, if "ten" is provided as a string in a numeric evaluator, an exception is thrown, whereas "10" would coerce to a numeric 10. Coercion is always in favor of the field type and not the value type:

Person( age == "10" ) // "10" is coerced to 10The comma character (',') is used to separate

constraint groups. It has implicit AND connective

semantics.

// Person is at least 50 and weighs at least 80 kg

Person( age > 50, weight > 80 )// Person is at least 50, weighs at least 80 kg and is taller than 2 meter.

Person( age > 50, weight > 80, height > 2 )Note

Although the && and

, operators have the same semantics, they are

resolved with different priorities: The

&& operator precedes the

|| operator. Both the

&& and || operator

precede the , operator. See the operator

precedence list below.

The comma operator should be preferred at the top level constraint, as it makes constraints easier to read and the engine will often be able to optimize them better.

The comma (,) operator cannot be embedded in

a composite constraint expression, such as parentheses:

Person( ( age > 50, weight > 80 ) || height > 2 ) // Do NOT do this: compile error

// Use this instead

Person( ( age > 50 && weight > 80 ) || height > 2 )A property can be bound to a variable:

// 2 persons of the same age

Person( $firstAge : age ) // binding

Person( age == $firstAge ) // constraint expressionThe prefixed dollar symbol ($) is just a

convention; it can be useful in complex rules where it helps to easily

differentiate between variables and fields.

Note

For backwards compatibility reasons, It's allowed (but not recommended) to mix a constraint binding and constraint expressions as such:

// Not recommended

Person( $age : age * 2 < 100 )// Recommended (separates bindings and constraint expressions)

Person( age * 2 < 100, $age : age )Bound variable restrictions using the operator

== provide for very fast execution as it use hash

indexing to improve performance.

Drools does not allow bindings to the same declaration. However this is an important aspect to derivation query unification. While positional arguments are always processed with unification a special unification symbol, ':=', was introduced for named arguments named arguments. The following "unifies" the age argument across two people.

Person( $age := age )

Person( $age := age) In essence unification will declare a binding for the first occurrence and constrain to the same value of the bound field for sequence occurrences.

Often it happens that it is necessary to access multiple properties of a nested object as in the following example

Person( name == "mark", address.city == "london", address.country == "uk" )These accessors to nested objects can be grouped with a '.(...)' syntax providing more readable rules as in

Person( name == "mark", address.( city == "london", country == "uk") )Note the '.' prefix, this is necessary to differentiate the nested object constraints from a method call.

When dealing with nested objects, it also quite common the need to cast to a subtype. It is possible to do that via the # symbol as in:

Person( name == "mark", address#LongAddress.country == "uk" )This example casts Address to LongAddress, making its getters available. If the cast is not possible (instanceof returns false), the evaluation will be considered false. Also fully qualified names are supported:

Person( name == "mark", address#org.domain.LongAddress.country == "uk" )It is possible to use multiple inline casts in the same expression:

Person( name == "mark", address#LongAddress.country#DetailedCountry.population > 10000000 )moreover, since we also support the instanceof operator, if that is used we will infer its results for further uses of that field, within that pattern:

Person( name == "mark", address instanceof LongAddress, address.country == "uk" )Besides normal Java literals (including Java 5 enums), this literal is also supported:

The date format dd-mmm-yyyy is supported by

default. You can customize this by providing an alternative date

format mask as the System property named

drools.dateformat. If more control is required, use a

restriction.

It's possible to directly access a List value

by index:

// Same as childList(0).getAge() == 18

Person( childList[0].age == 18 )It's also possible to directly access a Map

value by key:

// Same as credentialMap.get("jsmith").isValid()

Person( credentialMap["jsmith"].valid )This allows you to place more than one restriction on a field

using the restriction connectives && or

||. Grouping via parentheses is permitted,

resulting in a recursive syntax pattern.

// Simple abbreviated combined relation condition using a single &&

Person( age > 30 && < 40 )// Complex abbreviated combined relation using groupings

Person( age ( (> 30 && < 40) ||

(> 20 && < 25) ) )// Mixing abbreviated combined relation with constraint connectives

Person( age > 30 && < 40 || location == "london" )Coercion to the correct value for the evaluator and the field will be attempted.

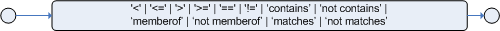

These operators can be used on properties with natural

ordering. For example, for Date fields, <

means before, for String

fields, it means alphabetically lower.

Person( firstName < $otherFirstName )Person( birthDate < $otherBirthDate )Only applies on Comparable

properties.

The !. operator allows to derefencing in a null-safe way. More in details the matching algorithm requires the value to the left of the !. operator to be not null in order to give a positive result for pattern matching itself. In other words the pattern:

Person( $streetName : address!.street )will be internally translated in:

Person( address != null, $streetName : address.street )Matches a field against any valid Java Regular Expression. Typically that regexp is a string literal, but variables that resolve to a valid regexp are also allowed.

Note

Like in Java, regular expressions written as string literals

need to escape '\'.

Only applies on String properties.

Using matches against a null value

always evaluates to false.

The operator returns true if the String does not match the

regular expression. The same rules apply as for the

matches operator. Example:

Only applies on String properties.

Using not matches against a null value

always evaluates to true.

The operator contains is used to check

whether a field that is a Collection or elements contains the specified

value.

Example 8.65. Contains with Collections

CheeseCounter( cheeses contains "stilton" ) // contains with a String literal

CheeseCounter( cheeses contains $var ) // contains with a variableOnly applies on Collection

properties.

The operator contains can also be used in place of String.contains()

constraints checks.

Example 8.66. Contains with String literals

Cheese( name contains "tilto" )

Person( fullName contains "Jr" )

String( this contains "foo" )The operator not contains is used to check

whether a field that is a Collection or elements does not

contain the specified value.

Example 8.67. Literal Constraint with Collections

CheeseCounter( cheeses not contains "cheddar" ) // not contains with a String literal

CheeseCounter( cheeses not contains $var ) // not contains with a variableOnly applies on Collection

properties.

Note

For backward compatibility, the

excludesoperator is supported as a synonym fornot contains.

The operator not contains can also be used in place of the logical negation of String.contains()

for constraints checks - i.e.: ! String.contains()

Example 8.68. Contains with String literals

Cheese( name not contains "tilto" )

Person( fullName not contains "Jr" )

String( this not contains "foo" )The operator memberOf is used to check

whether a field is a member of a collection or elements; that

collection must be a variable.

The operator not memberOf is used to check

whether a field is not a member of a collection or elements; that

collection must be a variable.

Example 8.70. Literal Constraint with Collections

CheeseCounter( cheese not memberOf $matureCheeses )This operator is similar to matches, but it

checks whether a word has almost the same sound (using English

pronunciation) as the given value. This is based on the Soundex

algorithm (see

http://en.wikipedia.org/wiki/Soundex).

Example 8.71. Test with soundslike

// match cheese "fubar" or "foobar"

Cheese( name soundslike 'foobar' )This operator str is used to check whether

a field that is a String starts with or ends with

a certain value. It can also be used to check the length of the

String.

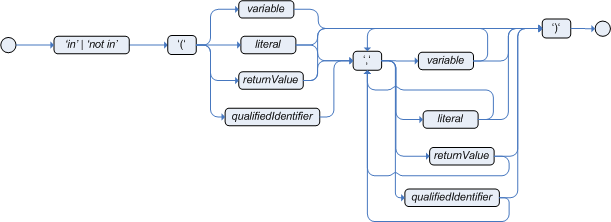

Message( routingValue str[startsWith] "R1" )Message( routingValue str[endsWith] "R2" )Message( routingValue str[length] 17 )The compound value restriction is used where there is more

than one possible value to match. Currently only the

in and not in evaluators

support this. The second operand of this operator must be a

comma-separated list of values, enclosed in parentheses. Values may

be given as variables, literals, return values or qualified

identifiers. Both evaluators are actually syntactic

sugar, internally rewritten as a list of multiple

restrictions using the operators != and

==.

Example 8.72. Compound Restriction using "in"

Person( $cheese : favouriteCheese )

Cheese( type in ( "stilton", "cheddar", $cheese ) )An inline eval constraint can use any valid dialect expression as long as it results to a primitive boolean. The expression must be constant over time. Any previously bound variable, from the current or previous pattern, can be used; autovivification is also used to auto-create field binding variables. When an identifier is found that is not a current variable, the builder looks to see if the identifier is a field on the current object type, if it is, the field binding is auto-created as a variable of the same name. This is called autovivification of field variables inside of inline eval's.

This example will find all male-female pairs where the male is 2

years older than the female; the variable age is

auto-created in the second pattern by the autovivification

process.

Example 8.73. Return Value operator

Person( girlAge : age, sex = "F" )

Person( eval( age == girlAge + 2 ), sex = 'M' ) // eval() is actually obsolete in this exampleNote